Assessing the Performance of the Urban Partnership Agreements and Congestion Reduction Programs

Printable Version [PDF 100 KB]

To view PDF files, you need the Adobe Acrobat Reader.

Purpose of Evaluation

The United States Department of Transportation (U.S. DOT) is taking a bold step in dealing with metropolitan area congestion by promoting and funding demonstrations of congestion pricing and other strategies aimed at reducing congestion.1 The key policy question is the effectiveness of these strategies. An evaluation is designed to answer this question by measuring the benefits, impacts, and value of each metropolitan area's approach to congestion reduction. In addition, evaluation of multiple sites will demonstrate how well the strategies perform under different situations.

Objectivity is an important feature of an evaluation and for that reason the U.S. DOT has put in place a national evaluation team.2 The national evaluation team is responsible for the evaluation design and analysis and reporting of findings. They are working closely with each of the demonstration sites in the collection of the evaluation data.

What Will Be Learned?

At each site the evaluation will address the following four essential questions:

Objective Question 1

How much congestion was reduced in the area impacted by the implementation of the tolling, transit, technology and telecommuting/TDM strategies? Examples of measures of congestion reduction are:

- Reductions in vehicle trips made during peak/congested periods,

- Reductions in travel times during peak/congested periods,

- Reductions in congestion delay during peak/congested periods, and

- Reductions in the time of congested periods.

Objective Question 2

What are the associated impacts of implementing the congestion reduction strategies? The impacts will vary by site depending upon the strategies used. Examples of potential measures include:

- Changes in roadway throughput and travel times during peak periods,

- Changes in transit ridership during peak periods,

- Mode shifts to transit, car or van pooling, or non-motorized modes

- Changes in time of travel, route, destination, or foregoing of trips,

- Operational impacts,

- Safety impacts,

- Equity impacts,

- Environmental impacts,

- Impacts in goods movement, and

- Impacts on businesses.

Objective Question 3

What are the lessons learned with respect to the impacts of outreach, political and community support, and institutional arrangements implemented to manage and guide the implementation?

Objective Question 4

What is the overall cost/benefit of the deployed set of strategies?

National Evaluation Framework

These four questions and the 4T congestion strategies provide the basis for the National Evaluation Framework (NEF). The NEF is the foundation for evaluation of the demonstration sites, because it defines the questions, analyses, measures of effectiveness, and associated data collection for the entire evaluation. The framework will be the driver of the site specific evaluation plans and test plans and will serve as a touchstone throughout the project to ensure that national evaluation objectives are being supported through the site-specific activities. Depending upon each site's strategies the NEF will be used to tailor the evaluation of the demonstration site.

Within the NEF each of the objective questions is translated into specific hypotheses or subquestions, measures of effectiveness (MOEs) are specified, and the data identified for generating the MOEs. An example from Objective Question 1 dealing with congestion is the following:

| Hypotheses/Questions | Measures of Effectiveness | Data |

|---|---|---|

|

|

|

In this example, the travel time data would be collected through either an automated process using sensor data already being collected as part of a traffic management system or by a special study. Collection of travel time data before and after the strategies are operational will be needed to assess the impact. Beyond this one example, the evaluation will encompass a wide variety of quantitative and qualitative data and data collection techniques including traveler surveys.

Steps in the Evaluation Process

The evaluation process for each of the demonstration sites consists of the following six steps:

- Evaluation Strategy: The purpose of the evaluation strategy is to reach consensus among the demonstration site partners and U.S. DOT on what should be evaluated and how. Using the National Evaluation Framework, preliminary hypotheses or questions tailored to the local conditions are identified along with data requirements, risks, and issues to be resolved.

- Evaluation Plan: This step documents the finalized hypotheses, MOEs, and needed data, and identifies the detailed test plans that need to be developed. At this stage the roles and responsibilities of the demonstration sites' partners and the national evaluation team are specified, as well as the needed resources and evaluation schedule.

- Evaluation Test Plans: Each test plan provides details on how the test will be conducted, and identifies the number of evaluation personnel, equipment, supplies, procedures, schedule, and resources required to complete the test.

- Data Collection: The evaluation data are collected according to the methods defined in the test plans. The goal is to collect one year of pre-deployment (or "before") data and one year of post-deployment (or "after") data. The demonstration site is tasked with collecting the data, with the exception of the information on lessons learned by the demonstration sites which the national evaluation team will gather.

- Data Analysis: The analysis of the data is performed according to the approaches defined in the test plans. Some partial analyses are performed at the start of the pre- and post-deployment periods to assess data quality and fix any data collection problems that might be identified. Where appropriate a baseline analysis of the "before" data and a second analysis of the "after" data are performed.

- Reporting of Findings: A report for each site incorporates the findings for all the analyses performed at the site. A national report synthesizing the findings across all the demonstration sites provides a comparative analysis of the performance of the congestion strategies that can aid policy and resource decisions at the federal and local levels.

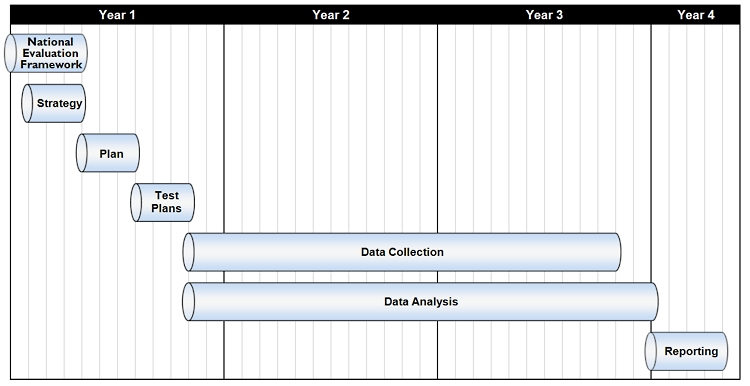

The Evaluation Schedule

The evaluation of each site will take about three and a half years. The demonstration sites are not starting at the same time, and, therefore, the completion of the individual site evaluations will vary. In general the time needed to complete the evaluation of all the sites extends from April 2008 through June 2012. The graphic below is a general timeline for the evaluation process at an individual site with the vertical bars indicating months and years for each step.

1 The congestion strategies are known collectively as the 4 Ts: tolling, transit, telecommuting/TDM, and technology. The demonstration sites proposed projects that aligned with these four strategies.

2 Battelle is the prime contractor and subcontractors include the Center for Urban Transportation Research, Texas Transportation Institute, University of Minnesota, Wilbur Smith Associates and consultants Caroline Rodier, Eric Schreffler, and Susan Shaheen.