2. The Deployment and Operating Experience

Two previous reports from this evaluation, the iFlorida Model Deployment Design Review Evaluation Report and the iFlorida Model Deployment—Deployment Experience Study, document FDOT's experience in designing and deploying the iFlorida Model Deployment. These reports covered the period from May 2003, when the Model Deployment began, through May 2006. This report covers activities that occurred from May 2006 through November 2007. In particular, it focuses on the challenges FDOT faced in the development of the traffic management software for the RTMC because this effort was the focus of iFlorida activities during this period. Details on other facets of the deployment can be found in other sections of this report.

2.1. Summary of Observations from Previous Reports

The iFlorida Model Deployment began with FDOT D5 taking advantage of its already strong relationships with other transportation stakeholders in the Orlando area. One of the advantages of these relationships was that the plans for iFlorida leveraged existing or planned capabilities from several of these stakeholders. For example, FDOT D5 planned to use toll tag readers to measure travel times on Orlando arterials, and OOCEA was already developing a system to use toll tag readers to measure travel times on the toll roads they operated. OOCEA agreed to use the travel time engine it was developing to compute arterial travel times from iFlorida toll tag readings. FDOT planned to use an existing network of microwave communication towers to support a statewide network of traffic monitoring stations.

By January 2004, FDOT had completed the high-level design of the iFlorida system. Operational plans for the deployment were not yet well-defined. The iFlorida Design Review report noted that the lack of well-defined operation plans:

...introduced the risk that either operational plans would have to be tailored to the capabilities of the deployed system or that late changes would have to be made to the functional requirements to support operational plans, once they were developed. This risk was probably slight for the data collection procurements because the data collection requirements were relatively clear and FDOT had conducted pilot testing of the various data collection approaches being used for iFlorida, further clarifying those requirements. The risk for the Conditions System, which will directly support iFlorida operations, is probably greater. The report noted that FDOT's strong "people themes" might overcome any difficulties that might arise because of uncertainties in the operational plans.

By April 2005, the deployment of most of the iFlorida field equipment was complete, though there had been some delays due to the two major hurricanes that passed through the Orlando area in 2004. Signs of delays in developing the CRS were beginning to show and, despite the fact that the software was due to be complete in May 2005, the requirements for this software were still evolving.

These problems compounded over the summer of 2005. Additional delays occurred with the CRS development. As elements of the CRS came online, FDOT began noting problems with the deployed field equipment. The problems were particularly pronounced with the arterial toll tag reader network-almost half of the readers were not reporting tag reads in June 2005. The lack of data meant that FDOT could not adequately test the CRS software. When problems were detected, it was not clear if the problems were caused by poor data or software errors.

Without diagnostic tools to assess system performance, identifying the root cause of problems that were observed often required extensive manual work; sometimes it was not possible. For example, problems with the arterial toll tag readers were identified by daily, painstaking, manual reviews of the self-diagnostic screens for each reader and archives of the individual reads that were made. Problems with the weather data feed were not detected until the CRS software began accessing the data. Early development of stand-alone tools to access these data feeds and diagnose problems with them would have allowed FDOT to identify and correct problems with these interfaces before they impacted RTMC operations.

| The design for TMC software should include stand-alone tools for diagnosing problems with the systems feeding data to it. |

In November 2005, FDOT hosted a public unveiling of the iFlorida system. The system was not perfect-only 70 to 80 percent of arterial toll tag readers were operational and the CRS had not been completely tested. However, the system did demonstrate the basic functionality expected of the iFlorida deployment. Arterial and highway travel times were being computed, and this information was being used to generate DMS and 511 messages and populate traveler information web pages.

In the months that followed the unveiling, FDOT began to coordinate with other regional stakeholders concerning how best to use the iFlorida infrastructure to support regional transportation management. Key to these plans was sharing transportation data through the CRS. At the same time, FDOT began to discover problems with the CRS. The CRS sometimes failed to correctly update DMS messages, and it sometimes miscomputed travel times from the raw travel time data it received. Incidents from the FHP CAD system were sometimes placed at the wrong map location.

Some problems also occurred with the less-used field equipment. The wireless network deployed along I-4 to support a bus security system was proving unreliable, and FDOT found it difficult to maintain the Statewide Monitoring System. The traffic monitoring equipment in the Orlando area, however, was becoming increasingly reliable. The arterial toll tag reader network and the I-4 loop detectors were operating reliably. FDOT had even deployed a series of detectors on I-95 and was beginning to integrate those into the iFlorida systems.

FDOT was expending significant resources diagnosing problems with the CRS, and this software was slowly improving. The Deployment Experience Study report noted that, by May 2006, "FDOT had finally overcome the most significant challenges and was beginning to rely on the CRS to manage its traffic management activities."

2.2. The Failure of the CRS

By early May 2006, it appeared that FDOT may have turned the corner on the problems they had faced with iFlorida deployment. The toll tag readers used on the arterials and the loop detectors used on I-4, I-95, and the toll roads to estimate travel times (see Section 3) were working reliably. The active bug list for the CRS was down to eight items and FDOT was regularly using the CRS at the RTMC. A test had recently been conducted, during which the CRS had successfully updated the message on each FDOT DMS. (Despite the success of this test, RTMC operators continued the practice of deactivating signs in the CRS so they could reliably control the sign messages.) The 511 system was operating reliably and processing more than 100,000 calls per month (see Section 10). The CRS contractor was preparing for FDOT to begin testing the CFDW. As FDOT continued to use these systems more extensively, the agency discovered that a number of problems were still unresolved.

The interface with the DMSs was unreliable, with sign messages sometimes not updating when requested. RTMC operators continued to report cases where a DMS message set through CRS did not appear on the sign or appeared only briefly before being replaced with another message. RTMC operators also reported that the signs sometimes seemed to "disappear," dropping off the user interface screen entirely.

In May 2006, FDOT discovered the cause of the disappearing signs: the CRS did not maintain an internal table of known signs. Instead, it downloaded the list of active signs once-per-minute from the Cameleon 360 software used to interface between CRS and the message signs. When the CRS had difficulty reading the file used to transmit this information (e.g., if the CRS tried to access the file while it was being updated), it deleted all of the signs from the CRS operator interface. The Evaluation Team felt that the best way to have prevented this error from occurring would have been for the requirements to specify what action was appropriate when the Cameleon 360 failed to provide information about a sign. (An appropriate response might be to alert the operator that contact with the signs had been lost rather than simply deleting the signs from the user interface.) When the problem was first reported, it was occurring several times per day. By July 2006, the frequency of these conflicts had been reduced to several times per week.

| The TMC software requirements should address the actions that should be taken when different types of failures occur. |

In September 2006, FDOT discovered that the CRS and Cameleon 360 software were applying opposite interpretations to priorities applied to messages: CRS interpreted higher numbers as having higher priority, while Cameleon 360 interpreted lower numbers as having higher priorities. This meant that messages sent by CRS as low priority messages often overwrote other, higher priority messages.

The travel time estimates for 511 and DMS travel time segments were sometimes miscalculated. This problem was first reported in January 2006 when OOCEA reported that messages signs were misreporting travel times on OOCEA toll roads. FDOT reviewed the configuration file, which was believed to be the source of the errors, and the CRS contractor made changes to these configuration files. However, spot checks by FDOT continued to identify additional travel time segments for which CRS was incorrectly computing travel times.

In September 2006, plans were made to perform "static tests" of the travel time calculations. The CRS contractor had stated that, because of the constantly fluctuating nature of the travel time information received by the CRS, it was difficult to test whether CRS was performing travel time calculations correctly. During a "static test," a set of constant travel times would be fed to the CRS so that the accuracy of the travel times computed by CRS could be more easily assessed. This testing was apparently the first time the CRS contractor had conducted an exhaustive test of the travel time calculations for every iFlorida travel time segment. These tests were repeated periodically over the remaining nine months of CRS usage by FDOT, with each test revealing additional problems that would be addressed before the tests were repeated.

The travel time estimates for 511 and DMS travel time segments were sometimes missing. The original iFlorida design called for the system to produce estimated travel times based on historical averages that could be used if equipment failures resulted in missing travel time data. The CRS/CFDW contractor had specified that the CFDW would produce these estimates, yet had not demonstrated this capability. In July 2006, FDOT identified this as one of their key concerns.

The locations of incidents from the FHP CAD system were sometimes misinterpreted. In early 2006, FDOT reported that the CRS was incorrectly geo-coding incident information received through the FHP CAD interface. (See Section 5 for more information.) In July 2006, an example of this error occurred while the CRS contractor was demonstrating CRS and CFDW and the contractor began trying to correct the problem. The problem continued until, in November 2006, FDOT requested that the CRS contractor turn off the geo-location feature because the incorrect geo-coding was causing incidents to show up at the wrong locations on iFlorida traveler information Web sites and operator displays. With this feature turned off, RTMC operators were forced to review each FHP CAD incident and either delete it or identify the correct location for it manually. During peak travel periods, this required almost full-time attention from one RTMC operator. Although a number of attempts were made to correct this problem, FDOT never considered the CRS processing of FHP CAD data stable enough to use.

The CRS software was often unstable and crashed periodically. FDOT reported that the CRS would sometimes crash or begin working so slowly that a system restart was required. This problem recurred with less and less frequency as the CRS contractor applied fixes to the software.

The CRS filtering of weather data was sometime unavailable. In June 2006, FDOT discovered that the CRS contractor had, in January 2006, quit processing weather data received from Meteorlogix. (See Section 11.) When weather data processing was resumed, FDOT discovered that the CRS was no longer filtering weather events before displaying them on the operator's interface. The correction for this problem had apparently been lost during other CRS upgrades.

The CFDW was unable to pass acceptance testing. In late May 2006, FDOT cancelled acceptance testing after the CFDW failed to pass a large number of tests. In July 2006, the CRS contractor reported that the CFDW passed integration testing and requested that FDOT begin the 30-day acceptance test period. FDOT disagreed that the system requirements had been satisfactorily met. For example, repeated efforts by FDOT and the Evaluation Team to log into the CFDW resulted in errors. FDOT also noted that the CFDW was expected to produce travel time estimates based on historical data that could be used if real-time measurements were unavailable. This capability was never demonstrated to FDOT.

In August 2006, the Evaluation Team reviewed the CFDW capabilities and noted the following problems (some problems related to accessing current data in the CFDW):

- The "current situation" map included incidents that should have been closed.

- Attempting to access DMS information resulted in a system crash.

- Some discrepancies were noted between events listed in the CFDW and those in the CRS.

- The link-node travel time calculation feature failed when an attempt was made to use it.

Other problems related to accessing historical data included:

- The historical speed map appeared to display current rather than historical data.

- There were discrepancies in the historical incident lists.

FDOT testing also identified a number of additional problems with the CFDW reporting features.

This set of key problems remained the center of focus throughout the period from June 2006 through April 2007, with the CRS contractor fixing bugs and FDOT conducting further CRS/CFDW tests, which revealing that the same types of problems continued to plague the software. The CRS contractor insisted that the system was ready for final testing, and FDOT began acceptance testing on April 3, 2007. The testing was discontinued on April 4 after FDOT noted that several CRS/CFDW features were incomplete and did not function as intended. FDOT agreed to grant an extension to the period of performance of the CRS/CFDW contract, due to expire on April 30, 2007. The CRS contractor wanted additional funds to continue work on the project.

On May 1, 2007, the CRS/CFDW contractor quit supporting FDOT and the CRS/CFDW development. FDOT attempted to follow the instructions in the administrative guides to maintain the system, but the CRS and CFDW both failed within a week. The CRS/CFDW contractor refused to assist FDOT in restarting the software. FDOT managed to restart the CRS services, but could not restart the CFDW. Within a week of the first re-start, the CRS had failed again. The frequency of CRS failures increased throughout May, with the final failure occurring by the end of the month.

2.3. Transitioning to New Traffic Management Software

After the CRS failed, FDOT quickly developed a set of operational procedures that allowed it to continue to support transportation management operations at the D5 RTMC without the CRS. The following list describes FDOT's challenges and reactions following the final failure of the CRS:

- Data from loop detectors on I-4 was still available through the Cameleon 360 software, which interfaced directly with these detectors. RTMC operators could review current travel speeds in order to estimate travel times, though operators found that reviewing traffic conditions on the video wall often sufficed.

- The OOCEA travel time server continued to compute travel time estimates for arterials and toll roads, though FDOT had no way to access these estimates. Instead, FDOT reviewed historical data to estimate typical travel times on these roads.

- RTMC operators continued to use the FHP Web site to access incident information from the FHP CAD system. In addition, an FDOT contractor developed a new interface to the FHP CAD data that re-organized the data to better support iFlorida traveler information systems. (See Section 5 for more details on this interface.)

- RTMC operators continued to update DMS messages directly through the Cameleon 360 software.

- The 511 system allowed FDOT to update 511 messages through a Web-based interface. RTMC operators began using this interface to manage 511 messages rather than recording messages through CRS. Because CRS had previously created the 511 travel time messages automatically, FDOT replaced the automatically generated messages with more generic ones based on typical travel times. When an incident occurred, operators overrode these messages with incident-specific ones.

- The iFlorida traveler information Web site was unavailable during this period. In its stead, FDOT developed static Web content that provided access to other traveler information resources, such as the FHP incident information Web site.

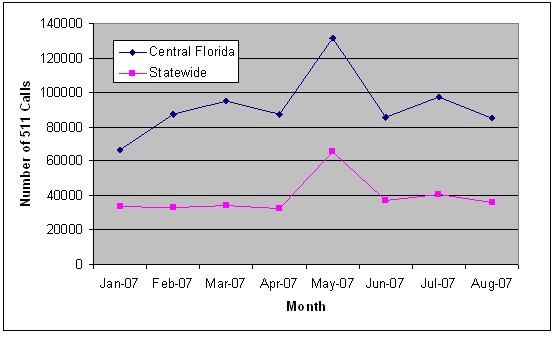

Through these efforts, FDOT was able to continue to perform most of its traffic management activities with only somewhat reduced capacity. One reflection of the success of these efforts was the log of 511 calls made during this period. After the CRS failure, 511 users continued to access the 511 service at much the same rate as before the failure. (The increase in 511 usage in May is a seasonal increase that also occurred in 2006.)

Figure 10. Number of iFlorida 511 Calls Before and After

the CRS Failure

2.4. Developing SunGuide

FDOT, aware of the problems with the CRS and anticipating the potential that it might fail, had begun testing SunGuide, a replacement for the CRS, in November 2006. FDOT began by installing an existing version of the SunGuide software and configuring it to manage recently deployed ITS equipment on and near I-95. This included a set of loop and radar detectors to measure volume, speed, and occupancy on I-95, DMS signs on I-95, and trailblazer signs on key intersections located near I-95. (See Section 6 for more information on these message signs.) They had used the period from November 2006 through April 2007 to work out problems with this system, such as interfacing correctly with the message signs, computing travel times from the speed data, and configuring the system to automatically post travel time messages on the message signs.

When the CRS failed in May 2007, FDOT began expediting a transition to this new software. The agency negotiated a new schedule with the SunGuide contractor to provide a new version of that software that included most of the transportation management features critical to operations at the D5 RTMC, including ingesting loop detector data and computing travel times, ingesting travel time data from the OOCEA travel time server, automatically updating DMSs and the 511 system with travel time messages, and managing ad hoc DMS and 511 messages. The schedule called for most of these features of the software to be available by August 2007.

By September 2007, FDOT was operating a Beta release of the SunGuide 3.0 software. This version implemented most of the above-mentioned features. Difficulties with the interface to the 511 system, however, meant that RTMC operators were still updating 511 messages through a Web-based interface. A final release of this version of the software was released in December 2007. The current version of this software (as of July 7, 2008) was released in June 2008. Because this occurred at the end of the evaluation period, the performance of the SunGuide software was not included in this report.

2.5. Lessons Learned-CRS versus SunGuide Development

One of the remarkable features of the SunGuide development process was how much more smoothly it went than the CRS development process. When asked about this, FDOT noted several differences that contributed to the improved process.

One item FDOT noted was that the SunGuide contractor spent much more time working with FDOT at the start of the project to understand FDOT's functional requirements than had the CRS contractor. With SunGuide, FDOT initially provided high-level functional requirements to the contractor, and the contractor mocked up screens to refine these requirements. Sometimes, the contractor would point FDOT to other Web sites as examples of what the contractor believed FDOT had requested. At other times, the SunGuide contractor would make "quick-and-dirty" changes to the version of SunGuide to demonstrate an understanding of FDOT's requirements.

| Devote time at the start of the project to ensure that the contractor shares an understanding of what is desired from the software. |

In contrast, the CRS contractor took FDOT's high-level requirements and attempted to build tools that complied with those requirements without much additional exploration. This was particularly problematic because FDOT had not developed detailed Concept of Operations for iFlorida-having an iFlorida Concept of Operations may have helped the CRS contractor better understand FDOT's expectations. Numerous examples of this occurred during a meeting held in January 2005 to review the CRS Software Design Document.

- It was unclear whether the CRS should post travel delay or travel time messages on DMSs. Most iFlorida documentation indicated that signs would post travel times, though current FDOT D5 practice was to post travel delays. The CRS contractor had been developing the software under the assumption that travel delays would be used for most messages, but was uncertain of the type of information that would be provided for diversion messages (i.e., messages providing information about two or more alternate routes).

- There was disagreement on whether user-specific data access restrictions would exist. FDOT expected to provide versions of the CRS software to several iFlorida stakeholders so that each could use the software to manage their roadside assets and yet share control. For example, a stakeholder with 24-7 operations might turn control of their roadside assets over to the D5 RTMC during off hours. FDOT expected that the CRS would include capabilities for managing which organization had "control" over those assets. The CRS contractor expected all users to have the same privileges for accessing and changing data and suggested that FDOT use MOUs between the agencies to describe under what conditions different agencies should change CRS data.

- There was disagreement on shared control for managing messages on DMS signs. OOCEA planned to deploy a number of DMSs along Orlando toll roads, and their operational plans called for using non-CRS software to manage content on those signs while allowing the RTMC to monitor and update those signs. The CRS design did not allow for this type of shared control.

- It was unclear whether weather data should be filtered to include only

severe weather alerts before being received by the CRS. The CRS had been

designed to treat all incoming weather data as an alert that should be displayed

to RTMC operators. The weather data provider expected to provide all weather

data to the CRS, with the CRS processing the received data to determine

which conditions should generate an alert.

- The road speed index, a factor indicating the expected impact of weather conditions on traffic, was removed from the weather data to be provided to the CRS. The weather data provider had expected that this index would be key to identifying weather conditions that might impact traffic. It could be used to determine if weather conditions should generate an alert or to help determine appropriate travel speeds for variable speed limit signs. The CRS contractor indicated that the data would not be useful within the CRS and proposed instead to include weather information in its approach for forecasting travel times.

- There was disagreement about how 511 messages would be generated. The CRS contractor believed that all messages would be recorded by the operators, with most messages recorded live and some messages from a reusable message library. FDOT representatives at the review meeting wanted to generate 511 messages by splicing together message phrases while allowing operators to override the spliced messages. FDOT upper management had previously stated that no spliced messages should be used in the CRS.

Because the iFlorida operational concepts where not clearly defined, key operational capabilities such as those listed above were still being debated as part of the review of the software design-only five months before the CRS was expected to be operational.

| Software projects are complex efforts that require skills that are not necessarily common to transportation agencies. Sustaining trained, certified, and knowledgeable staff is a key to success. |

The lack of a well-defined Concept of Operations for iFlorida might have had less impact if the CRS contractor had followed its initial plans for using a "spiral model" for developing the CRS software. This approach would have allowed FDOT and the CRS contractor to develop a shared understanding of iFlorida operations through repeated opportunities for FDOT to provide feedback to the contractor based on incremental releases of the CRS software. This approach was not used.

If FDOT D5 had more stringently followed a systems engineering process, some of these problems might have been avoided. In fact, the FDOT ITS Office had developed a systems engineering approach for ITS projects in Florida, and FDOT D5 chose not to apply that approach. FHWA had also agreed to provide to FDOT personnel responsible for iFlorida software acquisition a course entitled ITS Software Acquisition.1 FDOT D5 declined FHWA's offer.

FDOT also noted that the process of refining the functional requirements required great deal more upfront expense than the agency was accustomed to spending on non-software projects. In traditional FDOT projects, such as widening a road, the specifications are set by existing FDOT standards and there are few questions about the functional requirements of the resulting product. With software projects, there are no existing standards specifying requirements-many functional requirements will be unique to a particular organization, so significantly more time is required to develop a mutual understanding of those requirements.

| The cost structure for a software project is significantly different than that for traditional DOT projects. |

In the case of the CRS, FDOT relied on the CRS contractor to convert the functional requirements into detailed technical requirements. In many cases, the CRS contractor accepted the functional requirements provided by FDOT as the detailed requirements. This was particularly problematic because some FDOT functional requirements were ambiguous or otherwise flawed. This affected the development of the CRS software in that the CRS requirements continued to evolve throughout the development process. It also affected the testing of the software in that testing was performed against high-level, ambiguous functional requirements. As an example, one CFDW requirement was that the software would include "a data mining application to facilitate the retrieval of archived data." The test for this requirement was "Verify that the CFDW includes Crystal Reports or similar software." No more significantly detailed requirements or testing of the CFDW data mining capabilities was performed.

Another example of an ambiguous, high-level functional requirement was the FDOT specification that "Users shall be able to view/extract archived data via fifteen (15) standard reports." This requirement made its way into the CRS requirements specification, and the associated test was to "Confirm the existence of at least fifteen standard reports" with the acceptance criteria being "Pass if at least fifteen standard reports are available." The CRS contractor should have developed detailed requirements based on the high-level requirements provided by FDOT and developed tests based on the detailed requirements.

| Requesting documentation of detailed testing performed by the contractor can help ensure that appropriate tests are performed. |

Because no detailed requirements were developed, it was difficult for FDOT to verify that detailed testing had been performed. For example, if a detailed requirement regarding the accuracy of the travel time calculations was present, system engineering practices would have required development of an approach for testing that accuracy and documentation of the results of those tests. This would have also provided a mechanism for FDOT to review the proposed testing process and test results. If detailed requirements were available and systems engineering practices were followed, errors in the travel time calculations might have been detected before the system was released and inaccurate travel time messages were broadcast to the public.

Another problem FDOT faced with operating the CRS was that it was very difficult to administer. When FDOT specified the high-level functional requirements, it did require that the CRS include certain administrative capabilities, such as adding and deleting users and modifying the set of roads managed by the system. However, the CRS contractor did not provide any additional information to FDOT about how system administration would work and the CRS contractor performed the initial configuration. When problems with the travel time calculations were traced to inaccurate information in the configuration data, FDOT discovered that configuring the system required hand-modifying a large number of text files and that the approach for doing so was not well documented. This meant that many configuration activities could only be performed reliably by the CRS contractor. It also meant that the configuration process was error prone, which may have been one factor that led to the repeated cycles of performing static tests on the travel time calculations and correcting configuration problems that were discovered, only to have future tests reveal additional problems.

| Being involved in the initial configuration of the system can help determine whether the configuration process is overly complex. |

With the SunGuide software, the SunGuide contractor provided FDOT with the software and FDOT was responsible for configuring it as they wanted. (The SunGuide contractor did provide support during this process.) This approach simultaneously configured the system, trained FDOT in the administration process, and allowed FDOT to verify that the configuration process was easy to use.

Part of the reason for the difference in the administration processes might be related to a difference in how the two contractors intended the software to be used. In both cases, the software was based on tools that were being developed for use at multiple locations. With the CRS, the CRS contractor manages most of the deployments-they operate the servers and handle the configuration. With SunGuide, the SunGuide contractor provides software-software that is operated on DOT-owned servers and configured by the DOTs. The CRS contractor can afford more arcane configuration processes because the configuration is performed by CRS staff members expert in the process. The SunGuide contractor must have simpler, more robust configuration processes because the end-user will be performing those operations.

| Contractual requirements can be used to ensure that software fixes can be implemented without disrupting operations. |

FDOT also believed that the SunGuide architecture as a whole-not just the configuration process-was more robust than the CRS architecture. One example they noted was that the SunGuide contractor was able to modify the software without bringing down the entire system. With CRS, the contractor usually was required to shut down the entire system in order to install a patch. This meant that the CRS contractor tended to provide large patches less frequently, while the SunGuide contractor could provide smaller, more frequent patches. This allowed the SunGuide contractor to implement fixes for small bugs in the SunGuide software quickly. It also made it simpler for FDOT to test the patches, since each patch typically covered only a small number of problems.

Finally, FDOT noted that it would have been useful to have had available tools to help monitor and test individual components of the software. When problems were detected with the CRS, FDOT and the CRS contractor often spent more time trying to locate where problems were occurring than fixing problems. FDOT could have obtained these tools by either including such tools in the CRS requirements or contracting for independent verification and validation effort on the CRS software.

| Tools for testing of individual software components can help pinpoint the root cause of problems that may occur. |

The problem of testing individual software components was particularly severe when multiple organizations were involved in the problem. For example, the toll tag reader data was collected at field devices maintained by one FDOT contractor, transmitted through the FDOT network to a FDOT server, transmitted across the FDOT and OOCEA networks to the OOCEA travel time server, which was running software developed by a different contractor. The resulting travel times were pushed back across the OOCEA and FDOT networks to the CRS, which used the data to produce travel time estimates and published those travel times to message signs and the 511 system. When a problem occurred, it was difficult to identify at what point in this chain the problem first appeared, which sometimes resulted in more finger pointing than problem solving. It would have been useful to have had a tool that could sample each of the data streams involved in the travel time calculations so that the location at which an error occurred could be more easily identified.

2.6. Summary and Conclusions

FDOT experienced significant difficulties in reaching its objectives with the iFlorida Model Deployment. Early in the deployment, the problems were centered on the deployed field equipment, with a large number of the arterial toll tag readers having failed by the time the software systems were in place to use that data. As FDOT brought the field equipment back online, problems with the CRS software became more apparent. From November 2005 through November 2007, FDOT's efforts were focused on eliminating the problems with the CRS software. Through this process, FDOT identified a number of lessons learned that might benefit others attempting to deploy a new (or upgrade an existing) traffic management system.

- Following sound systems engineering practices is key for successfully deploying a complex software system like the CRS. The CRS project began with inadequate requirements, and a process for refining those into detailed requirements was not followed. Systems engineering approaches that are less reliant on detailed requirements, such as a spiral model, were not employed.

- Staff overseeing development of a complex software system like the CRS should be experienced in the software development process. FDOT D5 had no ITS staff with that experience and declined FHWA's offer to provide a training course for the FDOT iFlorida staff.

- In a complex system, like the software system used to manage TMC

operations, the system design should include tools for diagnosing problems

that might occur in individual subsystems.

- When errors occurred with the arterial travel time system, it was difficult to identify whether the error was caused by the readers, the travel time server that computed travel times from the reader data, the CRS that used the computed travel times, or the interfaces and network connections between these systems.

- When errors occurred with updating DMS messages, it was difficult to identify whether the CRS was sending incorrect data to the system that interfaced with the signs or the sign interface was not updating the signs correctly.

- One of the biggest sources of problems in a complex system is the

interfaces between subsystems. With the CRS, long-standing problems

occurred with the interfaces between the CRS and the FHP CAD system, the

weather provider, the travel time server, and the DMS signs.

- One approach for reducing the risk associated with these interfaces would be to adopt ITS standards that have been used effectively in other, similar applications.

- Another approach for reducing the risk associated with these interfaces would be to develop the interfaces early in the development process and test the interfaces independently of the other parts of the system.

- A third approach would be to include interface diagnostic tools that could sample data passing through the interface and assess whether the interface was operating correctly.

- It is useful to have alternate methods for accessing data and updating

signs and 511 messages in case the primary tools for doing so are not functioning

correctly.

- The I-4 loop detector data was available through the Cameleon 360 software after the CRS failed, enabling RTMC operators to continue to estimate I-4 travel times.

- The backup interface for updating DMS messages allowed FDOT to disable the signs in the CRS and manage the sign messages through the backup interface when the CRS interface for updating the sign messages proved unreliable.

- The backup interface for updating 511 messages allowed FDOT to continue to provide 511 services when the CRS failed.

- It is important to devote a significant amount of time at the start

of a software development project to ensure that all parties share a mutual

vision for how the resulting software should operate.

- With the CRS, FDOT had envisioned that the CRS would accept data from a variety of sources and filter that data before providing it to the RTMC operators. The CRS contractor expected some of the data to be filtered to include only critical events before being provided to the CRS.

- With SunGuide, the contractor spent significant time with FDOT, ensuring that contractor staff understood FDOT's functional requirements and how those requirements would be implemented before beginning software development.

- Testing is a critical part of a software development project.

While testing is primarily the responsibility of the contractor, the lead

agency may want to review the test plans and documentation of test results

during the development, including unit and integration testing. The testing

should include both the software and configuration information used to initialize

the system. To achieve this, the contractual process must include details

related to the visibility of the testing process.

- Errors in the CRS travel time calculations were not discovered until OOCEA reviewed the resulting travel times, and tools to test them (such as the static tests) were not available until more than 6 months after the problem was first discovered. Better testing for configuration data validity might have prevented errors from reaching the production software. For example, tools could have been developed to verify computed travel times independently. Tools that generated a map-based display of the configuration data would have provided an alternate means of testing that data.

- More detailed tests of the component that related FHP CAD incidents to roadways should have identified the fact that the software sometimes miscalculated incident locations.

- Tests of the CRS interface to the DMSs should have revealed some of the problems that FDOT experienced with this interface, such as the fact that the Cameleon 360 and CRS software had an opposite interpretation of the meaning of sign priorities.

- The system requirements should include requirements related to configuration

and administration of the system.

- With the CRS, the configuration was performed by the CRS contractor. When errors with the configuration were identified, FDOT discovered that the configuration was too complex to correct without assistance from the CRS contractor. Even the CRS contractor failed to eliminate all of the configuration errors in the CRS. Requirements that described the configuration process might have resulted in a simpler, less error-prone configuration process.

The deployment experience also highlighted the challenges of taking a "top-down" approach rather than a "bottom-up" approach to development. FDOT wavered in its leadership over the contractors after expressing only a vision for the system operation. Guidance provided to contractors by lower level FDOT staff was often over-ridden by upper management, and lower-level staff had little or no voice in expressing concerns. FDOT also did not provide a well-developed document to describe the traffic and travel management operations the iFlorida systems were expected to support. This meant that some iFlorida contractors were provided with limited documentation regarding the requirements of the systems they were developing and received contradictory feedback from different FDOT staff. Many critical client-contractor relations suffered as a result, and the continued miscommunication magnified the errors of each successive phase of the development until it became too difficult to manage.

Despite these failures in developing the CRS, the existence of other methods of performing key operations, such as updating 511 and DMS messages, meant that FDOT did continue to perform these traffic management operations in spite of the failings of the CRS.

1 This course is offered by the US DOT Research and Innovative Technology Administration (RITA). Information on the course is available at www.pcb.its.dot.gov.