| Related: |

|

OBJECTIVES |

Confirm that the installed system meets the user’s needs and is effective in meeting its intended purpose. |

|---|---|

|

Description |

System validation is an assessment of the operational system. Validation ensures the system meets the intended purpose and needs of system’s owner and stakeholders. In addition, validation refers to the actions taken at each step in the development process to ensure that the outputs of the step are validated by the stakeholders. |

|

ConText |

|

|

INPUT Sources of Information |

· Concept of Operations · Validation Plan · Verified and accepted system |

|

PROCESS Key Activities |

· Plan validation · Perform in-process validation · Perform system validation and document results |

|

OUTPUT Process Results |

· Validation procedures · Validation results |

Validation is the confirmation that the need(s) identified in the Concept of Operations have been met by the new operational system. Recall that needs are different from requirements in that requirements identify the correct operation and performance of the system in the specified environment, and needs identify the expected impact of the new system on the environment.

For example, an off-board fare collection system will have requirements regarding accepting payment from travelers and dispensing payment instruments with associated stored value or trip contracts. That same off-board fare collection system may have the need to reduce the dwell time of a bus at the bus stop (compared to, for example, an on-board fare collection system that the new system is replacing). Verification testing involves verifying the correct operations of the system to collect fares and dispense tickets/contracts in the expected environment. Validation is testing that in fact the bus dwell time (e.g. average or median time stopped at a bus stop normalized by passenger volume at the bus stop) has in fact been reduced by the expected amount.

Validation really can’t be completed until the system is in its operational environment and is being used by the real users. For example, validation of a new signal control system can’t really be completed until the new system is in place and we can see how effectively it controls traffic.

Of course, the last thing we want to find is that we’ve built the wrong system just as it is becoming operational. This is why the systems engineering approach seeks to validate the products that lead up to the final operational system to maximize the chances of a successful system validation at the end of the project. This approach is called in-process validation and is described in the activities section.

System does not meet a key need: The risk being managed is that the system has the desired impact on the environment into which it is deployed, i.e. it meets the needs.

Validation is testing that the needs documented in the Concept of Operations have been satisfied by the deployment of the new system. The validation process has two primary activities:

Plan Validation

With stakeholder involvement planning starts at the beginning of the project timeline. The plan includes who will be involved, what will be validated, what is the schedule for validation, and where the validation will take place. An additional aspect of planning is the definition of the validation strategy. This defines how the validation will take place and what resources will be needed. For example, whether a before and/or an after study will be needed. If so, the before study will need to be done prior to deployment of the system.

An initial Validation Plan was created with the Concept of Operations earlier in the life cycle (see Section 3.3.5). The performance measures identified in the Concept of Operations forced early consideration and agreement on how system performance and project success would be measured.

It is important to

think about the desired outcomes and how they will be measured early in the

process because some measures may require data collection before the system is

operational to support “before and after” studies. For example, if the desired

outcome of the project is an improvement in incident response times, then data

must be collected before the system is installed to measure existing response

times. This “before” data is then compared with data collected after the system

is operational to estimate the impact of the new system. Even with “before”

data, determining how much of the difference between “before” and “after” data

is actually attributable to the new system is a significant challenge because

there are many other factors involved. Without “before” data, validation of

these types of performance improvements is impossible.

It is important to

think about the desired outcomes and how they will be measured early in the

process because some measures may require data collection before the system is

operational to support “before and after” studies. For example, if the desired

outcome of the project is an improvement in incident response times, then data

must be collected before the system is installed to measure existing response

times. This “before” data is then compared with data collected after the system

is operational to estimate the impact of the new system. Even with “before”

data, determining how much of the difference between “before” and “after” data

is actually attributable to the new system is a significant challenge because

there are many other factors involved. Without “before” data, validation of

these types of performance improvements is impossible.

In addition to objective performance measures, the system validation may also measure how satisfied the users are with the system. This can be assessed directly using surveys, interviews, in-process reviews, and direct observation. Other metrics that are related to system performance and user satisfaction can also be monitored, including defect rates, requests for help, and system reliability. Don’t forget the maintenance aspects of the system during validation – it may be helpful to validate that the maintenance group’s needs are being met as they maintain the system.

Detailed validation procedures may also be developed that provide step-by-step instructions on how support for specific user needs will be validated. At the other end of the spectrum, the system validation could be a set time period when data collection is performed during normal operations. This is really the system owner’s decision – the system validation can be as formal and as structured as desired. The benefit of detailed validation procedures is that the validation will be repeatable and well documented. The drawback is that a carefully scripted sequence may not accurately reflect “intended use” of the system.

In-Process Validation

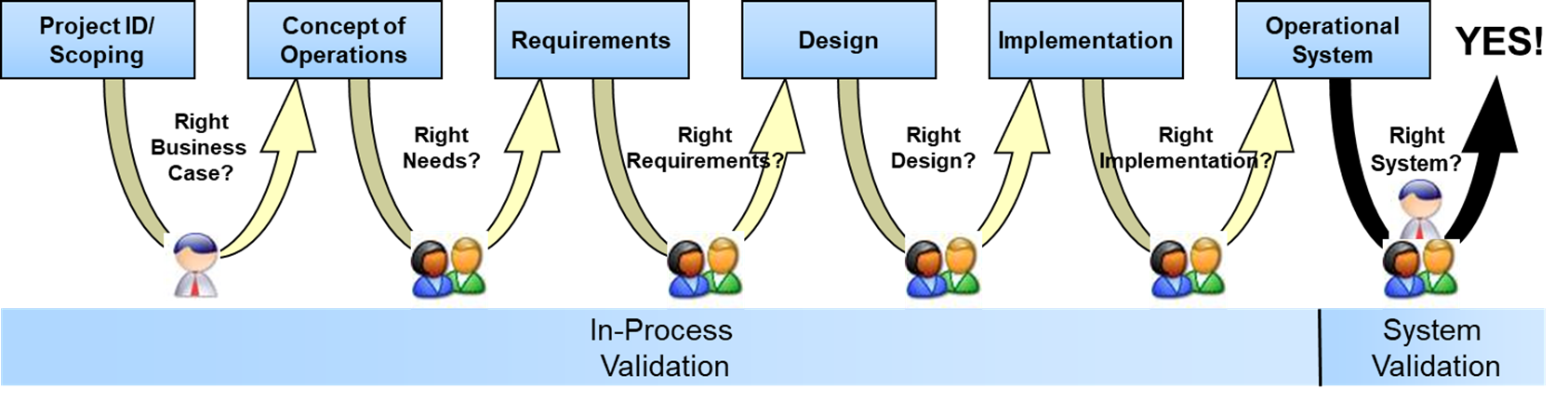

One of the key principles of systems engineering is stakeholder involvement in the development process. This is why the systems engineering approach seeks to engage stakeholders to validate the products that lead up to the final operational system to maximize the chances of a successful system validation at the end of the project. This approach is called in-process validation and is shown in Figure 22. As depicted in the figure, validation was performed on an ongoing basis throughout the process:

· The business case for the project was documented and validated by senior decision makers during the initial project identification and scoping.

· User needs were documented and validated by the stakeholders during the Concept of Operations development, i.e., “Are these the right needs?”

· Stakeholder and system requirements were developed and validated by the stakeholders, i.e., “Do these requirements accurately reflect your needs?”

· As the system was designed and the software was created, key aspects of the implementation were validated by the users. Particular emphasis was placed on validating the user interface design since it has a strong influence on user satisfaction.

Figure 22: In-Process Validation Reduces Risk

(Source: FHWA)

Since validation was performed along the way, there should be fewer surprises during the final system validation that is discussed in this step. The system will have already been designed to meet the user’s expectations, and the user’s expectations will have been set to match the delivered system.

Perform system validation and document results

The system is validated according to the Validation Plan. The system owner and system users actually conduct the system validation. The validation activities are documented and the resulting data, including system performance measures, are collected. If validation procedures are used, then the as-run procedures should also be documented.

The measurement of

system performance should not stop after the validation period. Continuing

performance measurement will enable you to determine when the system becomes

less effective. The desired performance measures should be reflected in the

system requirements so that these measures are collected as a part of normal

system operation as much as possible. Similarly, the mechanisms that are used

to gauge user satisfaction with the system (e.g., surveys) should be used

periodically to monitor user satisfaction as familiarity with the system

increases and expectations change.

The measurement of

system performance should not stop after the validation period. Continuing

performance measurement will enable you to determine when the system becomes

less effective. The desired performance measures should be reflected in the

system requirements so that these measures are collected as a part of normal

system operation as much as possible. Similarly, the mechanisms that are used

to gauge user satisfaction with the system (e.g., surveys) should be used

periodically to monitor user satisfaction as familiarity with the system

increases and expectations change.

The data resulting from the system validation is analyzed, and a validation report is prepared that indicates where needs were met and where deficiencies were identified. Deficiencies can result in recommended enhancements or changes to the existing system that can be implemented in a future upgrade or maintenance release. If an evolutionary development approach is used, the validation results can be a key driver for the next release of the product.

There is great latitude in system validation. It is dependent on institutional agreements (State and FHWA requirements) on a per project basis. In signal upgrade systems a simple before and after study on selected intersections may be sufficient to validate. In a more complex system, a number of evaluations may be needed. This validation may be needed for each stakeholder element, each sub-system [e.g., camera, CMS, and detection system]. It may be done on a sample area of the system or comprehensively. Getting this addressed with the stakeholders in the planning stage is very important.

The FHWA Regulation does not specifically mention general validation practices to be followed. IEEE-1012 Independent verification and validation and CMMI identify best practices.

System validation should result in a document trail that includes the Validation Plan, Verification Procedures (if written), and the Validation Results including disposition for identified deficiencies. There are several industry and government standard outlines for validation plans including FIPS Publication 101 and IEEE Standard 1012. Note that both of these standards cover both verification and validation plans with a single outline. Consider maximizing commonality between the verification and validation documentation in your project for efficiency.

Table 10 shows the Validation process artifacts and their backward traceability to the need(s) in the Concept of Operations document.

Table 10: Validation traceability

|

Traceable Artifacts |

Backward Traceability To: |

Forward Traceability To: |

|---|---|---|

|

Validation Test Plan |

Needs in the Concept of Operations |

N/A |

|

Validation Test Results |

Needs in the Concept of Operations |

Recommended System Changes, Enhancements, Upgrades |

R Were all the needs clearly documented?

R With each need, goal, and objective is there an outcome that can be measured?

R Are all the stakeholders involved in the validation planning and the definition of the validation strategy

R Are all the stakeholders involved in the in-process validation of the systems engineering products?

R Are all the stakeholders involved in the performance of the validation and is there an agreement on the planned outcomes?

R Are there adequate resources to complete the validation?

| Related: |