6. Hardware Testing

6.1 Overview

This chapter focuses on the testing of hardware or physical devices including traffic controllers, detection systems, ramp controllers, and dynamic message signs and TMC devices such as workstations, video projectors, and communications equipment. While software is usually part of these devices since most include computers (microprocessors), it is typically "embedded" (classified as firmware) or an integral part of the device. The hardware test program is intended to cover the device testing from the device testing from prototype to final deployment.

Throughout this chapter, the various phases of testing are presented, from prototype testing during early phases of the product development through site testing, which occurs once the equipment is installed at its final location. The degree of testing required will depend on the maturity and track record or installation history of the device (product), the number of devices purchased, the cost of the testing, and the risk of system failure caused by problems with the device. For general classification purposes, the maturity of the device will be categorized based on its history, which can vary from standard devices (typically standard product), to modified devices, to new or custom devices developed for a specific deployment.

The following sections will discuss the device testing in phases starting with the development of a prototype to the final acceptance testing. In the installation phases and beyond, the test activities are the same regardless of the maturity of the product (new, existing, or modified).

6.2 What Types of Testing Should Be Considered?

The testing for a device or product can be broken down into the following general categories:

- Design verification.

- Functionality.

- Mechanical and construction.

- Standards compliance (NTCIP and others).

- Environmental.

- Serviceability.

Each of these will be discussed to gain a better understanding of what is meant and what is required for each.

The following sections describe the elements of a complete testing program based on the assumption that the device being offered is a new design or custom product, and hence the device should be subjected to all aspects of requirements verification. After this initial discussion of the worst case testing program, this guide will consider what steps can probably be eliminated or minimized for standard products and modified products as described above.

6.2.1 Design Verification

Most procurement specifications will include design requirements for the ITS devices. If these requirements are not explicitly included in the procurement specifications, they may be invoked through referenced standards such as the CALTRANS Traffic Engineering Electrical Specification (TEES) or the equivalent NY State Standards. 17 These requirements typically include such physical issues as choice of hardware (see mechanical and construction below), choice of materials, voltage margins, visibility of indicators, speed of devices, and component thermal restrictions. These design requirements may also include limitations on the mounting of electronic components, insertion and removal force, connector plating, labeling of electronic components, printed circuit board layout markings, and custom components. The agency is cautioned that re-use of "existing" procurement specifications can often lead to references that may be outdated or obsolete, such as retired military, or "MIL," standards. In fact, if you use MIL standards, the question of whether your agency is capable of actually performing tests to verify conformance to MIL standards must be asked. If you can't or don't intend to actually test for compliance with these standards, don't put them in the specifications unless you are willing to accept a certificate of compliance from the vendor for these tests.

There may also be outdated restrictions on electronic construction techniques, such as a prohibition of multi-layer printed circuit boards, and requirements for integrated circuit sockets that are no longer valid and would prohibit many of today's newer technologies. It is important that the procurement specifications be reviewed and updated by a person who is knowledgeable of current electronic designs and construction methods to ensure that all external references are valid and current and that manufacturing technology has likewise been updated to reflect current techniques. Because of the specialized skills required, most agencies and many consulting firms will need to supplement their staff by outsourcing this work. When an agency engages a consultant to prepare their procurement specification, how and by whom (e.g., sub-consultant or on-staff engineer) this expertise will be provided should be well defined.

As a case in point, the following are "design requirements" typically found in ITS device procurement specifications and therefore must be verified for product acceptance by either the agency or its consultant. Note in example requirement 1.3.2.3 below, it may be difficult or impossible to read the manufacturing dates on all the PC board components unless they are physically removed and inspected under a magnifying glass or microscope. However, this requirement could be verified during the manufacturing process, before the components are inserted and wave soldered on the PC boards.

|

REAL WORLD EXAMPLE: (taken from CALTRANS TEES August 200218). 1.3.2.3No component shall be provided where the manufactured date is 3 years older than the contract award date. The design life of all components, operating for 24 hours a day and operating in their circuit application, shall be 10 years or longer.19 |

It is recommended that these types of design requirements be validated with respect to the rationale behind why they have been included in the specification (e.g., what value do they add to the product's reliability, electromagnetic compatibility, etc.) and what methods will be used to verify them. Requiring vendor certification that the design meets these requirements and a performance bond with an extended full replacement warranty on the entire device might accomplish the same objective without the agency bearing the cost of extensive design verification testing. For new or custom devices, the device design must be reviewed for conformance to these types of requirements and this type of inspection will require specialized technical expertise to review design drawings, data sheets, etc.

The goal of the design review is to examine areas of the design that may be subjected to electrical, mechanical, or thermal stress. Several areas of a device's electronic design typically warrant close design review; these include the power supply design and the external interfaces for voltage and power dissipation. These areas of a design are often subjected to the most stress due to AC power line regulation and the characteristics of the external devices. Design short cuts in these circuits can affect the long-term reliability of the device. It is also necessary to review the manufacturer's data sheets to ensure that the components being provided are truly rated for operation over the NEMA or TEES temperature ranges; this is often the difference between "commercial" and "industrial" rated components. It is common to include a requirement that non component shall be used in a manner which is inconsistent with the manufacturer's recommendations without explicit written information from the manufacturer stating that the vendor's use is acceptable.

As with the environmental testing (below), it is important that the specifications identify the design requirements and that the test (and inspection) procedure include verification of these requirements. The vendor should be required to assemble a set of manufacturer data sheets for all components and have those included in the reference material provided as part of the test procedure. Often the best approach to this aspect of the "testing" (inspection) of the product is to require that the vendor provide engineering work sheets that show the thermal characteristics of the device, demonstrate how the components are maintained within their junction temperatures for all ambient temperatures required, and how the voltage ratings of the interface devices were determined.

Experience has shown that when vendors are aware that the device will be inspected for conformance to all of the design requirements, they will revisit their design decisions and/or start the process of submitting a request for an exception. Often, by requiring that the vendor develop the test procedures and inspection "check sheets," they will address potential problems before the testing.

|

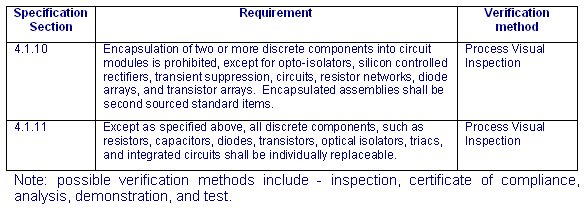

EXAMPLE: During the development of custom telemetry equipment for the Manhattan Signal System, the vendor was required to develop a test procedure that included full and complete verification of all of the design requirements in addition to all of the functional and performance requirements listed in the specifications. Initial test procedures delivered to the agency did not include inspection for the design requirements. When the agency insisted that "all aspects of the specification requirements be demonstrated," the vendor conducted their internal review, which revealed that their power supply design and interface circuitry would not meet the specified requirements. The vendor modified the design and submitted proof that the design met the requirements, and the units have provided long-term (> 15 years) reliable operation. EXAMPLE TEST STEPS TAKEN FROM A DMS FACTORY INSPECTION TEST PROCEDURE: This test procedure included a complete matrix of all of specification requirements and a method used to verify all such requirements. Only two of the requirements are shown here, but the test procedure included design review of the power supplies, driver circuits, and such details as component mounting and printed circuit board labeling.

|

6.2.2 Electrical, Mechanical and Construction Verification

Different devices will have different mechanical and construction requirements. This aspect of the testing program should include conformance to mechanical requirements such as weight, height, depth, and width, where specified. For example, for dynamic message signs, it is important that the vendor's design adhere to the specifications for these parameters because they directly affect the design of the structure and its installation (assuming that the structure was properly designed). For other ITS devices such as traffic controllers or field cabinets, it may be essential that they match an existing foundation size, mounting footprint, or existing cabinet. For some devices such as detectors, communications equipment, and controllers, it may be shelf limitations, rack height and depth, or printed circuit card profile.

Aspects of the mechanical design and construction must also be inspected or tested to ensure that the welding is proper, that the paint adhesion, thickness, harness, and color are proper, and that the material was properly treated before assembly. Some agencies have developed specific test procedures for parameters such as paint hardness (e.g., writing with a HB pencil which must not leave a mark) and paint color (the agency's specified color samples are compared to the painted cabinet). Some parameters (e.g., paint thickness) require that the thickness of the paint be measured on a cross section of a sample. In summary, although some of these requirements can be observed, many may require certification by the vendor and an inspection or analysis by a third party laboratory.

Other construction requirements may require inspecting for all stainless steel hardware, prohibitions on sheet metal screws and pop-rivets, and specific requirements for wire protection against chaffing and abrasion, and the use of wire harnessing, terminal block types, wire terminations, wire labeling, and wire colors and gauges. A design and construction checklist developed from these specification requirements (and other invoked standards) should be used during this aspect of testing and inspection for compliance verification.

The procurement specifications should specify which party develops the checklist. Typically, the vendor creates this checklist for review and approval by the agency. The agency in turn, must verify that all of the requirements identified in the procurement specifications (and invoked standards20) are included in the checklist. The advantage of requiring that the vendor develop the checklist is that as the vendor develops the list, they are forced to review the details of the specifications and address potential areas of non-conformance before the formal factory testing. There have been instances where vendors have discovered areas of their own non-compliance during the development of this procedure; they can then work with the agency to accept the deviation or alter their design without the impending failure of a factory acceptance test. Sometimes, such deviations are inconsequential in nature and may reflect improvements in the overall product design. By reviewing the issues before the formal test, both the vendor and the agency are able to identify a workable solution without the pressures often associated with a factory acceptance test.

For design requirements such as cabinet doors, one needs to inspect the latches and locks, the gasket material, and the adhesion of same. If the specifications for the cabinet include specific airflow requirements, the vendor should be required to show that the design of the fan, filter, or louver systems are sufficient to provide the required airflow. This must generally be done through airflow calculations (analysis) based on the openings, filter, and fan characteristics. Associated components and design characteristics such as the thermostat, fan bearings, fastener and filter types used, component locations and accessibility for replacement and maintenance, and component labeling should also be inspected.

Verification of water leakage (entry) requirements will generally be a combination of inspection and actual water testing. To test for water leakage, the entire cabinet (e.g., controller, DMS, etc.) should be subjected to water flow based on the specification requirements that reflect the expected environmental conditions and maintenance activities at the installation site. This should include driving rain on all surfaces as a minimum and high pressure washing of sign faces. While not quantitative in nature, some agencies simply subject the cabinet (or sign) to the water spray from a typical garden hose held at a 45-degree angle from the ground. This test practice may not be sufficient and does not reflect the real world environment. A specific test designed to verify the expected environmental conditions and maintenance activities should be used. For example, cabinets supplied to meet CALTRANS TEES specification requirements are tested by subjecting them to the spray from an irrigation sprinkler of a specific type with a specific volume of water. When performing such a test, white newspaper (the type used for packing) can be placed into the cabinet and then inspected for signs of water after the test. Cabinet inspections should include design characteristics such as how various joints are constructed and sealed. The inspection should consider what happens as gaskets age or if the gasket material is damaged. Examine the cabinet design to determine what happens when (not if) water does enter around the gasket area or through the louvers. A good design will anticipate this life cycle problem and will ensure that the mechanical design is such that any water entering the cabinet is safely managed so that it does not damage any of the components or compromise the integrity, operation, or utility of the device.

As noted earlier, the testing procedure can only verify the requirements documented in the specification. In order to require that the vendor conduct these tests and inspections, the procurement specification must address all of these issues in either an explicit manner (e.g., "all hardware shall be stainless steel") or in a functional manner (e.g., "the device shall ensure that as the gasket ages and the product is serviced, water cannot cause damage or improper operation to the device or its components"). The requirements should be explicit and quantifiable such that verification by one of the test methods (inspection, certificate of compliance, demonstration or test) is not subject to interpretation. In the above example the requirement phrase "water cannot cause damage or improper operation" is subjective and not easily verified - in general negative statements in requirements should be avoided, they are difficult or impossible to verify. This example's requirements language should be replace by a positive statement like "cabinet drainage holes shall be provided to allow for water that intrudes into the interior of the cabinet to self drain; interior components shall be mounted at least 2 inches above the bottom of the cabinet; and all electrical connections shall be covered with water spray shield."

While you can't make all of your tests totally objective, you have to be careful how you deal with things that could be judged as subjective. In particular, there should be a stated methodology in the procurement specification for how the agency intends to resolve such conflicts. For example, "should a conflict arise with respect to satisfaction of any requirement that may be subject to interpretation, the agency shall have the right to accept or reject the vendor's interpretation and test results offered as proof of compliance, and shall provide a requirement clarification and/or set specific criteria for compliance for a re-test." This type of statement in a procurement specification would serve notice to vendors that they need to thoroughly review the specification requirements and ensure that the requirements are clear, unambiguous, and not subject to interpretation. Any requirements that don't meet this standard should be questioned and clarified in the final procurement documents. This is an area where careful document review before the bid will lead to pre-bid questions for clarification. Then all bidders will understand the intent and intended testing that will be performed.

Some of the requirements may need more explicit mechanical design review to determine if the structural integrity of the product is sufficient (e.g., design of a large walk-in dynamic message sign) and that the welding meets American Welding Society (AWS) standards. This may require that a welding inspection firm be hired to x-ray and verify the welds. For a less costly approach, the agency could require that the vendor provide certification that the welders, who actually constructed the device, have been properly trained and certified by the AWS and that such certification is up to date. An outside inspection firm could be hired to inspect some of the more critical welds and those made at the installation site (if any) to provide added confidence. For installation of an over-the-road device, the agency should request that a State licensed structural engineer be responsible for and oversee the design and seal all structural documents.

6.2.3 Environmental

Environmental testing verifies that the product operates properly under the field conditions of the expected installation site and typically includes temperature, humidity, vibration, shock, and electrical variations. This aspect of testing is usually the most extensive and complex required for any product.

There are a number of industry-accepted references for the environmental and electrical requirements; these include (as examples) NEMA TS2 (and TS4 for DMS), the CALTRANS TEES document and the NY State controller specifications.

All of these documents provide guidelines for temperature and humidity, vibration and shock, and power interruptions, voltage transients, and power line voltages during which the equipment must operate properly. Vendors typically develop a test plan that includes testing performed by an independent test lab based on the NEMA testing profile.

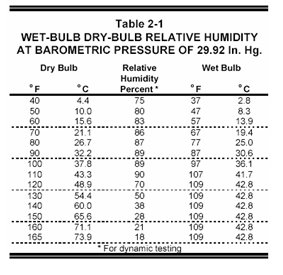

A portion of the test profile in the NEMA TS2 Standard21 (see figures 6-1 & 6-2) includes a temperature and humidity time profile that lowers the temperature to -30° F and then raises the temperature to +165° F.

Figure 6-1 NEMA Temperature Profile

Figure 6-2. NEMA Relative Humidity Profile

Detailed operational testing is performed at room temperature, low temperature, and high temperature with varying line voltage and power interruptions. Vendors typically subject only a single unit to the testing profile, and the unit is often not continuously monitored; as a result, failures caused by thermal transients during temperature transitions can go undetected. Further, the shock and vibration testing should be performed before the functional and environmental testing. Performing the environmental testing after the shock and vibration testing should reveal any previously undetected problems due to intermittent connections or components that may have suffered damaged as a resulted of the mechanical testing.

For the environmental testing, it is recommended that the procurement specification require the vendor to develop the test plan with references to the specific environmental requirements to be verified and submit this test plan to the agency for review and approval. The rational for this approach is that the vendor can then develop the test plan based on their available instrumentation and resources. Review of the test plan and associated test procedures is extremely important. A proper review of the test plan requires that the agency (or their representative with the appropriate technical expertise) compare the specifications and additional standards with the proposed test procedures to ensure that all of the requirements are verified. Such a test plan should be submitted well in advance of the planned testing date, and it is recommended that agency personnel and/or their representatives observe the testing program.

The environmental test configuration should include a means to continuously monitor and record the operation of the device under test (DUT). The vendor should be required to develop simulators and monitoring interfaces that will continuously exercise the unit's inputs and monitor all of the unit's outputs. For devices such as traffic controllers, ramp meters, and detector monitoring stations, it is essential that strip chart recorders or similar devices be used to continuously record the operation and that inputs are "stimulated" in a known manner to verify monitoring and data collection calculations. For devices such as DMS, new messages need to be sent to the sign, and pixel testing should be periodically requested. All results should be logged. For all ITS devices, a simulated central computer system needs to continuously (at least once per minute) interrogate the device and verify the proper responses. If the device is intended to support once-per-second communications, then the central simulator should interrogate the device at that rate. All of the device inputs and outputs (e.g., auxiliary functions) must be included in the testing; where measurements are required (e.g., speed traps), the simulator must be able to provide precise inputs to the DUT to verify proper interface timing and calculations.

The test plan must include provisions to verify the test configuration before starting the test. To accomplish this, the test procedure must be reviewed to determine whether all the test conditions can be met and that the appropriate test tools (including software), test equipment, and other resources that will be used to accomplish the test are available and ready to support the test. If the test configuration cannot support the testing requirements for observing, measuring and/or recording expected results as detailed in the test procedure, then the test configuration cannot be verified and the test should not be attempted.

Constructing and configuring a test environment that establishes the appropriate set of test conditions, test stimulus, and measuring and recording equipment while remaining unaffected by the DUT can be difficult. For example, a rapid power interruption and restoration test procedure may cause the DUT to fail, shorting out its power supply and blowing a fuse on the power source side. If the test environment and DUT are fed from the same fused source, the test instrumentation and simulation equipment will also lose power and can't record the event or subsequent effects. Independent power feeds would prevent this problem and expedite testing. When reviewing test procedures, the accepting agency should pay careful attention to what is defined for the test environment and how it is isolated from the DUT.

The environmental test plan must include, as a minimum, the following elements:

- A complete description of the test environment including a diagram showing all wiring and instrumentation, the location of all equipment, etc.

- A detailed description of the techniques that will be used to measure the performance of the DUT. The test procedure should also include verification of calibration certificates for all test equipment used to measure or control temperature, voltage, vibration, shock, and timing (frequency, spectrum, etc.).

A complete step-by-step procedure (test scenarios) showing how each requirement listed in the specifications (and auxiliary standards which may be invoked) will be verified. For any measurement or printed result, the test procedure should indicate the expected (correct) result; any other result is classified as an error.

There are other requirements for the test procedure that will be discussed later; what the reader should understand is that a simple "follow the NEMA testing profile" is not sufficient. It is up to the vendor to determine how to demonstrate proper operation and how the test will be conducted and to show each step that will be taken to verify the requirement. It is up to the agency to review this material to ensure that the testing is thorough, fully verifies the requirements of the specifications, and, at a minimum, is representative of the extreme field conditions expected. Note that it was the responsibility of the specification writer to ensure that the requirements stated in the procurement specifications (and invoked standards) are representative of the field conditions; if the temperature is expected to be colder than -30° F, but the specification only mandated operation to -30° F, it is not reasonable to require that the vendor test to -50° F. If this is a real requirement, it should have been included in the specifications.

|

REAL WORLD EXAMPLE: Note the following are examples of environmental requirements that exceed NEMA requirements; if the vendor only tested to the NEMA standard, it is likely that the product was not subjected to testing for these requirements; therefore, additional testing will be required even if the product has been previously tested to the NEMA standard. Examples of additional requirements include:

|

In this case, the test procedure must be expanded to show that the equipment will meet these requirements.

6.2.4 Functionality

Functionality testing verifies that the device performs all of the specified operations listed in the requirements. Examples of operational requirements include the number of plans supported by a ramp controller, the number of events in a scheduler, the number of messages for a DMS, the number of fonts for a DMS, accuracy of speed detection algorithms, etc. Functionality includes such capabilities as display a message, change a timing plan, detect a failure, calculate ramp metering rates, and collect data.

Functionality testing will be extensive, and it is likely to be incomplete when one examines the complete "tree" of all possible combinations of operational situations. As an example, it is virtually impossible to test for all combinations of timing plan parameters (e.g., cycle, splits, offset), output configurations, phase sequences, communications anomalies, and preemption conditions for a traffic controller. Likewise for a DMS, it is very time consuming to test for all possibilities of animation, fonts, text, special characters, graphics, communications anomalies, message sequencing, and timing. Under these circumstances, one must weigh and manage the risk of having an undiscovered "bug" with the time and budget available and the likelihood that the specific combination will ever be experienced during operation of the device.

When attempting to determine what testing is important – one might consider some of the following:

- What happens when the communications is disrupted and restored?

- What happens under a fully loaded Ethernet connection? [Warning, 2070 traffic controllers have a problem with this.]

- How does the device recover from power outages of various types?

- Does it support the correct number of plans, events, fonts, etc.? This is generally checked at the limit conditions (i.e., plan 31, message 63, etc.) and should also be checked to see if it rejects a request for something outside the limits (e.g., 0 and limit +1).

- Does the device keep proper time; i.e., does it meet the timing accuracy and drift requirements of the specifications (see 6.2.5). Does it properly deal with the daylight savings time transitions?

- For a dynamic message sign, check basic text rendering, justification, character sizes, flashing timing, multi-phase message timing, scheduler operation, status monitoring, error detection, communications response times (assuming they were specified), and error handling for messages that are too long, improperly formulated, etc.

- For a traffic controller, check for basic operation, phase sequencing, plan transitions, event scheduling, preemption, and detector processing. For a traffic controller, it is likely that the agency has a specific subset of the overall functionality that is critical to its operation; the testing should be structured to test for those specific combinations of operation and features.

- There are a number of deployment issues that need to be addressed such as: Will there be three thousand of the devices deployed in the field or a dozen? Are the devices easy to access (e.g., 10 intersections along an arterial) or difficult (e.g., a dozen DMS spread over 200 miles of highway)? Because of the great number to be deployed or the difficulty of accessing geographically dispersed devices, testing must be more intense and robust to reduce the potential for site visits after deployment. After the device is deployed, any hardware modifications become expensive and easily offset testing expenses.

For the device functionality, each operational requirement needs to be addressed with a specific test case. The test cases are likely to be complex; after all it takes a considerable amount of setup to be able to test some of the functions.

Consider, for example, testing for correct transitions between daylight savings time and standard time. This requires that the controller clock be set to a date (last Sunday in October23 or first Sunday in April) and time prior to the 2:00 a.m. changeover time. The controller is then allowed to select and transition to the appropriate timing plan for that time and let the clock time advance to the 2:00 a.m. daylight savings time to standard time change point. At this point in the test, a check is made to determine whether the controller's clock time was either set back to 1:00 a.m. for the fall changeover or advanced to 3:00 a.m. for the spring changeover. The check should also confirm that appropriate plan for the new time was selected and that the new cycle length, phases, and that the transition is made to the correct offsets. Note for the fall change, when the time is set back, it is important to allow the controller's clock time to advance to (or be reset to near) 2:00 a.m. again and continue advancing without repeating the set back to 1:00 a.m. and the selection of a new timing plan. If the agency routinely includes 2 AM plan changes, then this needs to be factored into the test procedures.

It is also critical that the test environment provide simulation tools (hardware and software) to fully verify the required operations and that those tools be verified for accuracy. For devices such as detector monitoring stations and actuated controllers, it is essential that detector simulators be available to accurately simulate volumes, occupancies, speeds, vehicle length, etc. If a ramp meter is supposed to change plans based on specific traffic conditions, then the test environment must provide a method to accurately simulate the value of the traffic condition (volume, occupancy, etc.) input parameters that are specified to cause a plan change, and it must be verified that the change was to the correct plan. For incident detection algorithms, the simulation environment must be able to present a profile of input data to replicate the anticipated conditions. To simulate detector activations at a given frequency (vehicles per hour), and occupancy based on vehicle length, or to provide trap contact closures to simulate various speeds and vehicle lengths. As an example, detector inputs to a controller/ramp (contact closures) can be simulated using a Universal Serial Bus (USB) relay device interfaced to a PC running simple detector simulation test software.

As noted above, the vendor usually provides the test plan, but the agency or its representative needs to work with the vendor to make sure that the test plan is representative of the operation and complexities required for their specific application and as documented in the procurement specification. The procurement specification should provide specific guidance with respect to what features, configurations, and operations must be demonstrated during operational testing and therefore included in the test procedure. The agency has a responsibility to review the test procedure and assure that the test cases proposed cover all of the operational test requirements and are structured to be representative of both planned and future operations as detailed in the specification.

The operational test procedure should subject the DUT to bad data, improperly formed packets, and other communications errors (e.g., interruptions, packet loss) to make sure that it handles the situation in a safe and orderly manner.

If NTCIP standards are invoked in the specification and include requirements for default configuration parameters for such anomalies as power and communications outages, the test procedure should include checking that these default configuration parameters have been successfully implemented following the respective outages. Such errors should not cause the DUT to reset to an unknown configuration, halt, or perform unsafe operations. As an example, communications errors should not cause an intersection to go to a flashing condition, and attempts to store parameters that are "out of range" should return an error to the management station rather than store the bad values. Where these conditions are not addressed in the invoked standards, it may be necessary to include some additional provisions in the procurement specifications. As an example, should a traffic controller check the validity of a timing plan when it is being stored in the database or when it is "run"? If the former, the management station is alerted to the bad data and can correct the situation before it affects operation. However, if the data is not verified until it is "run," the traffic controller will not discover the error until it attempts to run the plan, causing disruption to the traffic flow. It is the responsibility of the agency to review the device standards (e.g., NEMA, CALTRANS TEES, IEEE) and the NTCIP standards (e.g., 1201, 1202, etc.) and determine if they meet their operational needs. If not, then the procurement specification must be augmented to address the differences.

The agency needs to work with the vendor to establish a test environment and test cases that will be representative of the proposed operation, including limit conditions (e.g., number of units on a line, maximum number of messages) and error conditions (power interruptions and communications disruptions).

6.2.5 Performance

Performance testing verifies that the device meets requirements that specifically relate to quantitative criteria (i.e. measurable) and apply under specific environmental conditions. Performance typically deals with timing accuracy, brightness, visibility, and the accuracy of the measurements (e.g., speed, volumes, temperature, RF levels).

The following is an example of performance testing that will test the resolve of both the agency and the vendor to accomplish, but is extremely important to assuring that the implementation performs correctly and will serve the intended purpose.

Verifying the accuracy of a traffic controller's internal clock and interval timing is one of the more difficult performance tests to perform. It is important that clock drift, clock accuracy (time-of-day) and the consistency of interval timing be verified to be compliant to the specification requirements. Controller clocks are typically synchronized (track) to the local AC power cycle zero (power line voltage) crossings and derive their basic once-a-second clock cycle from counting 60 successive zero crossings. The power company maintains the long-term accuracy of the 60-cycle frequency to within a few seconds, making it a very good clock synchronization reference. The power grid manages the addition and subtraction of cycles in a manner that ensures that there is no long-term clock drift; although the clock may wander within limits (up to 22 seconds has been observed), it will not drift beyond those limits. Testing for clock drift in the presence of short-term power interruptions requires very accurate measurements. For example, if the controller's internal clock maintenance software were to "loose" or "gain" even a single 60th of a second with each short-term power interruption (<500 milliseconds), over time the controller's clock will gradually drift from neighboring controllers that may have had a different short-term power interruption history. The resulting error or clock drift will be reflected as a timing plan offset error between adjacent signals which will compromise the green band.24 This type of error can cause serious damage to arterial progression depending on intersection spacing and speeds.

Testing for controller timing accuracy is far more difficult than simply looking for clock drift over a 24-hour period. It requires an accurate recording device that allows the comparison between the output timing of the DUT and a time reference standard that is accurate to within a few milliseconds. Testing a unit for the accuracy of its internal clock (typically specified as +0.005 percent) when power is not applied requires a reference to a national standard such as WWV or GPS. Because the AC power line can "wander" several seconds25 during any test period, it is important to account for this effect to ensure the accuracy of the clock drift measurements. Conversely, when monitoring the timing of a device connected to the AC power line, it is important that the reference used for such measurements be calibrated with or linked to the AC power line being provided to the DUT.

Again, the agency needs to work with the vendor to establish an appropriate test environment, specific test measurement and recording equipment, and test cases with well understood and agreed on pass/fail criteria that will verify the quantitative measures specified in the performance requirements.

6.2.6 Standards Conformance

TMS hardware procurement specifications typically reference a number of different device and communication protocol standards and require conformance to them. Representative standards include the NEMA TS2 and CALTRANS TEES traffic controller standards, the advanced transportation controller (ATC) standard, the NEMA TS4 standard for dynamic message signs, and the NTCIP communications standards. The device standards generally describe functionality and some physical features of the device. The NTCIP communication standards define the meaning and format of the data exchanged between the device and a management station (e.g., closed loop master controller, TMC, etc.) and for the most part do not describe the device's functionality. However, the test plan for a TMS that includes these devices and standard references that must test the data exchanged, device functionality, and physical features actually delivered. Where a delivered function, feature or data exchange is required in the procurement specification to conform to a particular standard, the verification test must include steps to confirm that conformance.

For example consider the NTICP standards. It is important that the procurement specifications include a complete description of what specific parts of NTCIP standards apply to this procurement and for what devices. Specifications that simply require that the device "shall be NTCIP compliant" are meaningless without an entire series of clarifying statements. It is important to identify the communication media (e.g., analog telephone, Ethernet, EIA-232), the transport to be supported (e.g., point-to-point or point-to-multi-point), and whether the exchanges will be handled on an IP network. In addition, the procurement specifications must identify the application level protocols to be exchanged (reference NTCIP 1103) such as simple network management protocol (SNMP), simple fixed message protocol (SFMP), and simple transportation management protocol (STMP - also described as "dynamic objects"). Finally, the procurement specifications must identify the value ranges for certain variables (e.g., the number of messages to be supported), and which (if any) optional objects are to be supported. These details are representative of the complexity of developing a proper device procurement specification that invokes the NTCIP standards. In order to ensure the interchangeability of the field devices, the agency procurement specification must fully identify all of the NTCIP objects to be supported, the value ranges, any specific optional objects, and how special or proprietary functions are defined in terms of both functionality and communications protocols. The NTCIP standards are a powerful tool for the agency and can ensure interchangeability of field devices, but only if the agency takes the time to fully identify both the functionality and the objects to support that functionality. For those about to develop their first NTCIP-oriented device procurement, it is recommended that they review NTCIP 9001, which is freely available on the NTCIP web site at www.ntcip.org.

There are a number of communications testers and software applications26 that can be used to exchange NTCIP objects with an ITS device, but there is no "generally accepted test procedure" for verifying NTCIP compliance to specific requirements. The Testing and Conformity Assessment Working Group under the NTCIP Joint Committee has produced a document, NTCIP 8007, "Testing and Conformity Assessment Documentation within NTCIP Standards Publications," to assist the NTCIP working groups in developing test plans to be included in the various NTCIP standards. However, there is no assurance that this section will be added to the standards.

There are two different views of NTCIP testing that need to be understood. Since the NTCIP standards generally only define communications objects (parameters), one group feels that NTCIP compliance testing can be performed by verifying that data packets and parameters sent to the device are properly stored and available for retrieval. The second group wants to verify full device functionality based on the exchange of the NTCIP objects. Their claim is that the only way to be sure that the device will perform as expected is to combine both requirements into the NTCIP test plan. Hence any NTCIP test plan must verify both the data exchanges and the device functionality. The latter requirement is probably the most important for any ITS deployment and should be included in any device testing program.

NTCIP compliance testing typically consists of "walking the MIB"27 to verify that the device supports all of the required data objects (and value ranges) of the NTCIP standards referenced in the procurement specification. Testing then uses individual SNMP SET and GET operations to verify that each of the parameters can be stored and retrieved, and that out of range data is rejected and the proper response occurs when it is out of range. If the device has a display, then that display should be used to verify that the parameter sent to the unit is what the unit displays on its front panel; if the unit allows the operator to store parameters, then the SNMP GET operations should be performed to verify that the data can be properly retrieved. Any errors noted while executing either of these processes means that the device does not conform to the NTCIP standard. There are a number of issues with NTCIP implementation that make this aspect of device testing very time consuming. First, while there are testers for SNMP, most of the available test devices do not handle STMP (dynamic objects), which are typically critical to low-speed communications to actuated signal controllers.28 As a result, the test environment may need to use a sample central system or extend the testers with scripts to verify these objects. Secondly, many of the vendors have created custom objects and block objects to improve the efficiency of the data transfers. Where they are used, the vendor will typically have a means for verifying them. While the new versions of the standards (e.g., 1202V2) have standardized these blocks, not all vendors will support all versions of the standards. Further, the NTCIP standard only deals with the NEMA TS2 described functionality. When the vendor adds features and functions beyond the basic NEMA standards, then the NTCIP testing must also be extended to verify the additional capabilities. With this type of "extension" (see section 7.2.1.3) comes a potential for conflicts between the standard NTCIP objects, the custom objects, and the block objects. Therefore, it is critical that the NTCIP testing verify the data exchanges using STMP, block objects, single objects, and the custom objects over all of the value ranges identified in the specifications. The vendor should create a test procedure with test cases to verify all of these issues and to demonstrate all functionality in the requirements.

In addition to simply verifying that the NTCIP objects can be exchanged with the device and that the device performs the proper "function" or reports the proper "status," there is a need to verify the performance on the communications channel. If the agency plans to use low speed communications, then there may be timing requirements for the communications response that should be added to the specifications. Such considerations may be part of the overall system design and not part of the NTCIP standards. However, these must be verified as part of the NTCIP testing. Such timing can be critical to a system deployment and will affect the number of devices attached to a communications channel.

6.2.7 Maintenance and Serviceability

For maintenance activities to be carried out effectively and efficiently, it is important that the procurement specifications include some serviceability requirements. For example, a specification might require that a technician be able to repair or replace any field-repairable (replaceable) subassembly in 10 minutes without risk of personal injury or damage to the device with the use of common hand tools only. Such a requirement is somewhat ambiguous because, for example, the definition of common hand tools must be established, expected field conditions (e.g., weather, traffic, etc.) must be defined, and even the type of training the maintenance technician must have. Once these clarifications have been established, the agency simply inspects the device and goes through the process of replacing any device that looks difficult to service. The procurement specification should specify that the serviceability tests will be done by agency technicians attempting to perform the maintenance activity following only the maintenance instructions in the vendor's written documentation. If specific training will be required to perform certain maintenance activities, these activities and training courses should be required deliverables defined in the procurement specification. Serviceability tests are an opportunity to verify required maintenance training and both the documentation (which is really part of the serviceability requirements) and the product's compliance with the serviceability requirements. Inadequate training and/or poor documentation will most likely result in the failure of serviceability testing. This can be educational for both the vendor and the agency. It may point out the need to alter the mechanical design; for example, the addition of mounting rails to support heavy rack-mounted equipment.

The fragile nature of the components should be addressed in the maintenance procedures. Examples of these considerations might include anti-static straps, module carriers, and special packaging for the individual subassemblies or replaced components. Other examples of problems that might be discovered during the serviceability testing include tolerances on mechanical assemblies and the complexity of the disassembly and re-assembly process to change a component. It is possible that the mechanical design may need to be altered with such additions as mounting rails, locating studs, or alternate fasteners due to problems with threaded mounts.

The agency technician should go through the complete replacement operation for such commonly replaceable components as matrix panels for a DMS display, power supplies, power distribution assemblies, rack assemblies, fan assemblies, filters, load switches, flashers, and shelf mounted devices.

6.3 When Should Testing Occur?

The previous section dealt with the types of testing that may be part of the hardware test program for ITS devices. This section discusses the chronology of a typical ITS hardware testing for the procurement of ITS devices. The testing program described herein is based on the procurement of a custom or new device, not previously provided to the agency, and without an extensive deployment history. Depending on the maturity of the product, not all of the test phases described below will be required. Each of the following discussions will provide some guidance on the testing required based on the maturity of the device.

6.3.1 Acceptance of Previous Tests

Although the project specifications may require a full testing program to verify compliance with the procurement specifications, the vendor (contractor) may offer to submit the results of tests performed on the device for a previous project or as part of their product development process in lieu of performing all or part of the specified tests. This typically occurs when the device specified is a standard product or has been proven on previous projects. When developing the procurement specifications, this request should be expected and special provisions should be included that allow the agency to accept or reject the previous test results based on a well-defined criteria. This criterion should state that the testing must be on the identical product and must encompass all of the testing required in the project specifications. If a vendor can truly show this to be the case, then the agency should require that the vendor submit the previous test results, all data taken, details of the testing configuration, and the details of the test plan. The agency should also insist on inspecting the actual device that was submitted to the previous testing program to verify that the design is truly the same (trust but verify).

To determine whether the previous results are relevant, one needs to ensure that the current product design is identical to the unit that was previously tested. The determination of "identical," however, can be subjective. Often vendors will modify their design or are forced to change their design to manage component obsolescence, reduce costs, simplify construction, or to meet special project requirements; however, they will still claim that the previous test results are valid. When determining if a re-test is required, one needs to determine if and how the electrical or electronic design has changed, and whether the design changes could adversely affect the characteristics of the device. This includes the following evaluations:

- Thermal characteristics of the device in terms of internal case temperature, adjacent component mounting, and location of ventilation. Could the change affect the temperature of operation of the device?

- Mechanical characteristics to determine if the changes could affect the shock and vibration response of the unit. Examples include location of connectors, component mounting, hardware changes that might cause a change in how the unit handles the mechanical stress.

- Electrical and electronic characteristics to determine if any of the changes could affect the operation of the unit under transient and power interruptions. One also needs to determine if component substitutions compromise the margin requirements contained within the project specifications.

Changes that appear to be relatively harmless could have significant consequences, and often require analysis by an experienced electrical and/or mechanical engineer to determine whether there are issues that would warrant re-testing vs. acceptance of previous test results.

In addition, the agency should not automatically accept the unit because it is on the qualified products list of another agency or State. The procuring agency needs to request and carefully review the full test procedure that was followed, the data that was collected, and the results that were observed. Examples of issues that should raise doubt as to the acceptability of the test results include:

- Anomalies that may have been observed but ignored because they only occurred "once."

- The test environment did not allow the device to be continuously monitored during all temperature transients and transitions.

- All of the tests were not preformed at all temperatures and line voltages. In other words, is there a question as to whether the testing was as thorough as your procurement specifications require.

There have also been instances where anomalies were observed during the testing, but a repeat of the specific test step did not repeat the anomaly so the device "passed" the test and was accepted. The agency should expect to review the cause of the failure (because this was a genuine failure) and determine if this is acceptable operation. Examples of areas which merit close scrutiny include power interruption testing, slowly varying line voltages, timing accuracy, and power line transients. Most ITS devices are expected to operate 24 hours per day 7 days a week without operator intervention; even very infrequent anomalies that require a machine reset or power to be cycled can create operational problems for both the agency and the public depending on the number of units deployed and the frequency of such disturbances.

|

REAL WORLD EXAMPLE: In a recent review of the test results provided by a vendor to show compliance with the NEMA TS2 environmental requirements, the test report declared successful operation. However, upon close review of the detailed test report and test results, it was evident that one of the tests had failed during the first attempt and then did not fail during a repeat of the test step. In this case, the failure occurred in the monitoring electronics causing the device to report a non-existent failure. Such intermittent problems can be difficult to track and might cause an agency to "disable" the monitoring because of false errors. If a single sample product exhibits a few anomalies during a carefully controlled test, what can be expected when a large number are deployed? Such a situation should require that the vendor conduct a full review of the design of the monitoring circuitry to determine what happened and why and modify the design to avoid such false readings in the future. |

6.3.2 Incoming Unit Testing

This is the lowest level of testing for hardware components delivered for installation. It involves a receiving inspection to verify compliance with the Contract Requirements Deliverables List and/or purchasing documentation, completeness of products and supporting documentation (operations and maintenance manuals, installation drawings and checklist were applicable), workmanship29 ; damage due to shipping; and stand-alone functionality testing if applicable. The functionality testing may include mechanical and interface testing as described below. Products found to be incomplete, of poor quality workmanship (as defined in the procurement specification) or damaged in shipment, or that did not pass applicable stand-alone functional testing should not be accepted. The agency should have the right to waive any minor receiving irregularities, such as missing documentation, mounting hardware or cabling and grant conditional delivery acceptance (if it is in the interest of the agency to do so), with final delivery acceptance subject to correction of the irregularities and completion of the unit testing within a negotiated time interval.

The agency should prepare a receiving inspection and unit test report and advise the vendor of the delivery acceptance. Hardware components that fail unit testing should be repackaged, with all other materials received, in their original (undamaged) shipping containers and returned to the vendor. If the shipping container has been torn open or shows extensive damage (crushed, water stains, etc.) it should not be accepted from the shipping agent. Note: completion of the unit testing and delivery acceptance will typically trigger the payment provisions of the procurement specification or purchase order. Once the unit has successfully passed unit testing and has achieved delivery acceptance status, it should be formally entered into the TMS equipment inventory and placed under configuration management control.

6.3.3 Interface Testing

Two types of interface testing are necessary: mechanical and electrical.

6.3.3.1. Mechanical

Mechanical interface testing involves inspection and test to ensure that the hardware component fits within specified space in an enclosure, equipment rack, etc. or on a required mounting bracket and has the required mounting points. It checks for component clearances, especially where the component moves on a mount such as a CCTV camera. It also includes checking to see that all required electrical and communications connectors are accessible, compatible with, and lineup with (or are properly keyed to) mating connectors before attempting mate testing. Mate testing ensures that connectors mate and de-mate with ease and do not have to be forced. This is not a functional interface test; it should not be performed with powered electrical or communications connections.

6.3.3.2. Electrical

Electrical interface testing is performed subsequent to successfully passing mechanical interface testing. Electrical interface testing can be initially performed in a test environment without using an actual enclosure, a rack, or the mounting mechanical interfaces. However, electrical interface testing must ultimately be completed on components installed at their operational sites. It includes applying power and exercising the communications interfaces. Testing is performed to determine required compliance with at least some level of operational functionality; i.e., power on/off switches, displays, and keypads are functional and communications can be established with the device.

6.4 Hardware Test Phases

When procuring "new" or "custom" ITS devices that have not been deployed before, it is best to require a comprehensive hardware test program to verify the design and operation of the device from conception to final site operation. Further, by participating in the testing of the device from its design through deployment, the agency becomes familiar with the design, the operation of the device, the complexity of the testing, and the complexity of the device. Depending on the contract, the agency may also be able to work with the vendor during the early phases of the project (i.e., during the submittal phase and the prototype testing) to suggest or support changes to improve the overall cost, utility, and reliability of the product.

In general, the hardware test program can be broken into six phases as described below.

- Prototype testing — generally required for "new" and custom product development but may also apply to modified product depending on the nature and complexity of the modifications. This tests the electrical, electronic, and operational conformance during the early stages of product design.

- Design approval testing (DAT) — generally required for final pre-production product testing and occurs after the prototype testing. The DAT should fully demonstrate that the ITS device conforms to all of the requirements of the specifications.

- Factory acceptance testing (FAT) — generally performs the final factory inspection and testing for an ITS device prior to shipment to the project location.

- Site testing — includes pre-installation testing, initial site acceptance testing and site integration testing. This tests for damage that may have occurred during shipment, demonstrates that the device has been properly installed and that all mechanical and electrical interfaces comply with requirements and other installed equipment at the location, and verifies the device has been integrated with the overall central system.

- Burn-in and observation period testing — generally performed for all devices. A burn-in is normally a 30 to 60 day period that a new devise is operated and monitored for proper operation. An observation period test normally begins after successful completion of the final (acceptance) test and is similar to the burn-in test except it applies to the entire system.

- Final acceptance testing — verification that all of the purchased units are functioning according to the procurement specifications after an extended period of operation. The procurement specifications should describe the time frames and requirements for final acceptance. In general, final acceptance requires that all devices be fully operational and that all deliverables (e.g., documentation, training) have been completed.

The following sections will explain the distinction between these phases and the product development cycle.

6.4.1 Prototype Testing

Prototype testing is intended to be a thorough test of the design of the device, but mechanically less stressful than the design approval testing (DAT) because it does not include the vibration and shock test procedures. The prototype testing should include full electrical and environmental testing to verify both the hardware and software design. It should also include inspection and verification of the serviceability, design requirements, and NTCIP compliance. If the prototype testing is robust and full featured, it is more likely that the DAT will be successful. Further, the prototype testing is the opportunity to verify both the ITS device and the DAT test environment.

Prototype testing is usually carried out on a single unit of each device type and takes place at either the vendor's facility or an independent testing laboratory if the vendor does not have the resources necessary for the testing at their facility. Even if they do have the resources, there are situations when an agency may require the use of an independent lab; for example, to accelerate the test schedule or when there is an overriding product safety issue.

Such a third party testing laboratory generally provides the measurement instrumentation, test chambers (temperature and humidity) and vibration and shock testing equipment that is expensive to acquire, operate and maintain. An independent testing laboratory will also have the technical expertise and experience necessary to conduct and monitor the testing, track access to the DUT, analyze the test results, produce a detailed test report, and certify the test operations that they are given the responsibility for performing. However, the laboratory is not likely to include domain expertise; hence, they will simply run the tests according to the documented test procedures and make the necessary measurements and observations. Thus, the robustness of the test procedure will determine the utility of the laboratory testing. More often, the laboratory simply provides the environmental and measurement equipment while the actual device testing is performed by the vendor.

During prototype testing (for a new product) it is assumed that the vendor may have included some "cuts and paste" modifications30 to their circuit design to correct defects or problems discovered during the early design testing. Thus, although the prototype phase must include the proposed final mechanical packaging, it is generally not required that the prototype meet all of the design and construction requirements identified in the procurement specifications such as hardware, paint color, component mounting, and printed circuit construction practices. Some of the "modifications" can have a tendency to compromise the structural integrity of the unit, hence most prototype testing does not mandate the full vibration and shock.

Under some circumstances, the agency may require that the vendor deliver a "mockup" of the proposed mechanical design to allow the agency to evaluate conformance to the serviceability requirements. An example that might warrant an early mockup is a custom traffic controller cabinet design to evaluate clearances and serviceability for equipment that will be housed in the proposed cabinet design. While such a mockup is not part of the prototype testing, it should be included in the procurement specifications if the agency feels it necessary to examine a representative product at an early stage of the design approval process.

While it has been stressed that the prototype testing should include full functional testing of the device, it is not unusual for the vendor to request that the hardware be tested with limited software functionality due to the prolonged software development cycle, changing operational requirements, and the pressures of the project schedule. Under these circumstances, the agency should proceed very cautiously as long as the hardware aspects of the product can truly be separated from the total product. There is a risk that latent problems will necessitate a change to the hardware design - which would require a complete repeat of the testing. Under these circumstances the vendor and the agency must weigh the risk of further delays to the project against the benefits of allowing the vendor to complete the hardware testing and move on toward the DAT. The risk is that in some circumstances, such as the traffic controller timing issues discussed earlier, the hardware design and the software design may be more closely coupled than is apparent. As a result, successful completion of the prototype testing (with limited software functionality) is no assurance of proper operation of the final product or completion of the DAT. It is important that such risks be understood by all involved and that the agency does not assume liability for any of the risks if design changes are necessary. Such risks could include increased cost of the testing program and delays in the completion of the project.

As an example, during the initial field deployment of a new traffic controller design, it was observed that the clocks were drifting by minutes per day; after extensive investigation it took a combination of a hardware change (in this case re-programming a logic array) and a software change to correct the situation. While the software changes were easy to effect (reload the firmware using a USB device), the changes to the logic array were more time consuming and required that each field device be disassembled to access the programming pins. These issues were not discovered during the DAT because the full controller functionality had not been complete at that time - and the decision was made to allow the vendor to progress even though not all of the required functionality had been completed.

Another related situation arises when the vendor requests to skip the prototype testing and go directly to the DAT. This can work to both the agency's and the vendor's advantage if the vendor is confident in their design and has a well developed and acceptable test environment. However, if prototype testing was required by procurement specification, a contract modification will be required to eliminate it, and the agency should carefully assess benefits it should receive in return, i.e., reduced schedule and testing costs against the risk of skipping prototype testing. When prototype testing is skipped, there is a heightened risk that a design defect will be found in the subsequent DAT, necessitating a change to the circuit design that violates one or more aspects of the specifications for construction and materials. Further, the DAT should be performed on several devices and the prototype testing is typically only performed on a single device. In general, the testing program is structured to complete the prototype first because it offers a lower risk to the vendor by allowing construction practices that are not acceptable at the DAT phase. In addition, it provides an opportunity for the agency to evaluate the testing plan and environment.

It is also possible that the vendor may fail (what the vendor considers to be) some minor aspect of the prototype testing and requests permission to go directly to the DAT phase of the project. As long as the agency understands the risk and that the risk lies with the vendor and not the agency, such requests should be considered if there is a benefit to the agency (i.e., schedule, cost) to do so and the request is contractually acceptable. Procurement specifications should limit the test period and the number of unsuccessful vendor test attempts allowed without incurring significant penalties, such as withholding progress payments, liquated damages, and canceling the contract for non-performance. The limited test period and limited number of test attempts helps to contain the agency's costs of participate in testing. Note: if the vendor is allowed to skip prototype testing or the prototype is accepted without satisfying all aspects of the required testing, any contractual remedies the agency may have included in the procurement specification to cover prototype test problems are no longer available.

6.4.2 Design Approval Testing

The design approval testing is the next stage of the device testing and is intended to verify the complete product (in final production form), including packaging is in full compliance with the specifications. Typically, if the vendor "got it right" during the prototype testing and there were no changes to the mechanical design or construction, then the DAT should be a routine exercise. The only additional testing performed at the DAT is for vibration and shock following either the NEMA or the CALTRANS procedures - depending on which was designated in the procurement specification.

For the DAT, the testing should be performed on multiple units that are randomly selected by the agency from the initial (pilot) production run of the devices. All of the testing and inspection is the same as that for the prototype, except for the addition of the vibration and shock tests that should be conducted before the start of the environmental and functional testing. Depending on the number of units being purchased, the agency may wish to insist that a minimum of two (2) and up to five (5) units be subjected to the full testing suite. For a typical procurement of 200 or more, it is recommended that a least 5 units should be tested.

The procurement specifications should reserve the right to require a repeat of the DAT if there is a change to the design or components. If changes are required, then the agency (or its consultant) needs to analyze the nature of the changes and determine if the full battery of testing is necessary or whether a subset of the original testing can be performed to verify the effects of the change. In some cases, no further retesting may be necessary.

Another issue to be addressed during the DAT is the overall integration testing and the availability of the other system components. If the field device is to be connected to a large-scale central system, then it is best to bring a portion of the central system to the DAT (or extend a communications link to the test facility) to verify the communications aspects of its operation, such as object encoding, protocol support, and performance timing. Where this is not feasible, the vendor should be required to develop and demonstrate a central system simulator that provides the data streams specified and measures the performance and response from the device.

For certain devices such as dynamic message signs, the prototype and DAT may be waived or required only for a sample system (e.g., the controller and one or two panels) because of the expense and availability of test chambers large enough to test a complete sign. This will depend on the maturity of the device, the number being purchased, and the thoroughness of the vendor's previous testing program. However, when the formal environmental testing is waived for devices such as DMS, it is recommended that the power interruption testing, transient testing, and voltage variation testing be performed for the complete DMS as part of the factory acceptance test.

Note that we have continued to stress the need to verify operation under transient power conditions. Since all of the ITS devices are expected to operate 24x7x365 without operator intervention, the goal is to ensure that typical power line anomalies do not necessitate the visit of a field technician to "reset" the device, clear a conflict monitor, or reset a circuit breaker. Such situations can compromise the integrity of the overall TMS at times when their use may be mission critical (e.g. evacuation during storms, evening rush hour during thunderstorms).

6.4.3 Factory Acceptance Testing

The factory acceptance test (FAT) is typically the final phase of vendor testing that is performed prior to shipment to the installation site (or the agency's or a contractor's warehouse). For a complete DMS, the FAT serves as the agency's primary opportunity to view and review the operation of the device for any special features, and to inspect the device for conformance to the specifications in terms of functionality, serviceability, performance, and construction (including materials). The DMS FAT should include all the elements of a device DAT except the environmental (temperature and humidity), vibration, and shock testing.

As with the prototype testing and the DAT, the vendor should provide the test procedure for the FAT. The FAT should demonstrate to the agency that the operation of the device, the quality of construction, and the continuity of all features and functions are in accordance with the specifications. If the device (or its constitute components) passed a DAT, then the FAT procedure should verify the as-built product is "identical" to the device (or components) inspected and tested during the DAT.

When the DAT was not performed for the complete device, such as a DMS, the FAT must include inspection and verification of the complete assembled device (with all its components) including those specification requirements (physical, environmental, functional and operational) that could not be verified at the DAT. A DMS will have to be disassembled after testing for shipment to the installation site. It is important to assure at the FAT, that the complete list of deliverables, including all the specified DMS components, cabling, fasteners, mounting brackets, installation checklist, drawings, manuals, etc. is verified for completeness and accuracy.

For more modest devices such as ramp controllers, traffic controllers, and ramp metering stations, the FAT inspection should include doors, gasket, backplane wiring, cable assembly, hardware (nuts and bolts), materials, and the list of deliverables such as load switches, prints, flashers, etc.

Each unit must be subjected to the FAT before being authorized for delivery. Once the FAT has been completed, the unit is deemed ready for transport to the installation site. For some devices, such as Dynamic Message signs and the first units of custom and new products, the agency should plan on attending the test and being part of the final testing and inspection procedure. For other standard devices, such as CCTV cameras, traffic controllers, data collection stations, the vendor will conduct the FAT in accordance with the procurement specification without the presence of agency personnel. The vendor should be required to provide a detailed description of the FAT procedure used; keep accurate records of test result including date, time, device serial number, and all test equipment, test data, etc. for each unit shipped; and identify the person(s) responsible for actually performing and verifying the test. While agency attendance at an FAT is not usually included for production devices, the procurement specification should require the vendor to notify the agency 10 days prior to when the tests will occur and reserve the right to attend any and all tests. Remember, the goal of the FAT is to ensure that the device has been built correctly and that all functions and interface circuits function properly and that all of the Quality Assurance requirements of the specifications have been fulfilled.