5. Planning a Project Test Program

5.1 Overview

This chapter presents some of the considerations necessary for planning a project test program. As stated previously, several levels of testing are necessary to verify the compliance to all of the system requirements. In the next section, the building block approach to testing is shown and each of the testing levels discussed in detail. Then, the concept of product maturity is introduced, with emphasis on how the maturity of ITS products can affect the level and complexity of testing needed. Lastly, a discussion of how the choice of custom or new products affects the risks to project schedule and costs.

5.2 A Building Block Approach

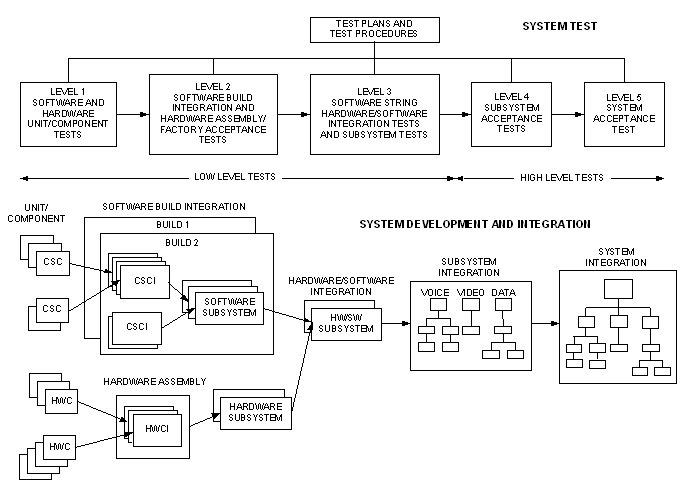

Figure 5-1 shows the building block approach to testing. At the lowest level (1), hardware and computer software components are verified independently. Receiving inspections, burn-in,14 and functional checkouts are performed on individual hardware components by the respective vendors and installation contractors as appropriate. A functional checkout is performed for all commercial-off-the-shelf (COTS) software and hardware components, and pre-existing systems or equipment (if any) to be incorporated into the system. This testing is to ensure that the item is operational and performs in accordance with its specifications.

At the next level, software components are combined and integrated into deliverable computer software configuration items that are defined by the contract specification. A software build, typically consisting of multiple configuration items, is loaded and tested together on the development computer system. Hardware components are combined and integrated into deliverable hardware configuration items, also defined by the contract specification. Hardware acceptance tests, which include physical configuration audits and interface checkouts, are performed on the hardware configuration items at the factory, as they are staged at an integration facility, and/or as installed and integrated at their operational locations.15

At level 3, the system computer software configuration items and the hardware configuration items are integrated into functional chains and subsystems. The integrated chains and subsystems provide the first opportunity to exercise and test hardware/software interfaces and verify operational functionality in accordance with the specifications. Subsystem integration and subsystem-to-subsystem interface testing is conducted at level 4 to verify that the physical and performance requirements, as stated in the contract specifications, have been met for the complete hardware and software subsystems comprising a functional element.

System integration and test is performed at level 5 to verify interface compatibility, design compliance of the complete system, and to demonstrate the operational readiness of the system. System-level integration and test is the responsibility of the acquiring or operations agency. Final system acceptance is based on review and approval of the test results and formal test reports submitted to the acquiring or operations agency.

The system tests at levels 4 and 5 should be accomplished following successful completion of all relevant lower level testing. Level 4 of the system test follows the successful completion of level 3 tests associated with system configuration; startup and initial operations; and communications network connectivity between the field devices, communication hubs, and the TMC(s). System software functions, relational data base management (RDBMS) functions, and those system security functions associated with access authorization, privileges, and controls are also verified at level 3. Level 4 testing comprises integration and functional tests of the major subsystems and the connectivity between multiple TMCs (if applicable) and associated field equipment to include the functions of the interface and video switching, routing, recording, retrieval, playback, display, and device control (as appropriate). Level 5 testing should include an operational readiness test that will verify the total system operation and the adequacy of the operations and maintenance support training over a continuous period of multiple days. The operational readiness test should include functional tests of the inter-operability with multiple TMCs (if applicable), failover of operations from one TMC to another, and other higher level functionality such as congestion and incident detection and management functions. At each level, system expansion performance should also be verified with the appropriate simulators to ensure that the level of testing is complete and that the system will meet its requirements under fully loaded conditions.

It should also be noted that the testing program must include infrastructure-induced anomalies and failures such as power interruptions, connection disruption, noisy communications (lost packets, poor throughput), improper operator interactions (which might actually occur with inexperienced users or new users), device failures, etc. These "anomalies" do and will occur over time, and it is important that the system responds as specified (based on the procurement requirements).

Figure 5-1. Building Block Approach to Testing

5.3 The Product Maturity Concept

The maturity of the software and hardware ITS products selected for deployment in your TMS will affect the type and complexity, and therefore the cost, of the testing necessary to verify compliance with your specific project requirements.

The following will establish a definition for the maturity of the ITS product and provide some guidance as to when it should be classified as standard, modified, or new/custom. Even though these classifications are very similar as applied to hardware and software products, they are presented separately because of the specific examples given.

5.3.1 Hardware

5.3.1.1. Standard Product

This type of device uses an existing design and the device is already operational in several locations around the country. It is assumed that the hardware design is proven and stable; that is, that general industry experience is positive. Proven, stable hardware design means other users of this same product report that it is generally reliable16 and meets the functionality and performance listed in the published literature (note that it is recommended that product references be contacted to verify manufacturer claims of proven reliability).

This device may be from the manufacturer's standard product line or it may be a standard product that has previously been customized for the same application. The key factor is that the device has been fully designed, constructed, deployed, and proven elsewhere prior to being acquired for this specific project. The product will be used "as-is" and without any further modification. It is assumed that the user is able to "touch," "use," or "see" a sample of the device and is able to contact current users of the device for feedback on the operation and reliability of the device.

It is important that the environmental and operational use of the device is similar in nature to the intended operation; devices with experience in North Dakota may have problems when deployed in Arizona, or devices generally installed on leased, analog telephone lines may experience a different result when connected to a wireless or other IP network. It is also assumed that the device is being purchased or deployed without design or functional changes to its operation (i.e., no firmware changes). Examples include typical traffic controllers, loop detectors, CCTV equipment, video projectors, workstations, monitors, and telephone systems. Such devices have typically been subjected to NEMA TS2 (or the older TS1) testing specifications or have been placed on a Qualified Products List (QPL) for some other State (e.g., CALTRANS, Florida DOT). In general, the acquiring agency should be willing to accept the previous unit test results, providing they were properly done and are representative of expected operating conditions. The agency can then concentrate on delivery inspection and functional tests and installation testing of these standard products. If the agency is in doubt, it is recommended that the test results from previous tests be requested and reviewed to verify the above.

5.3.1.2. Modified Standard Product

This type of device is based on an existing device that is being modified to meet specific functional and/or environmental or mechanical requirements for this procurement. It is assumed that the modifications are relatively minor in nature, although that is a judgment call and difficult to quantify.

Several examples of minor changes and their potential implications should clarify why these changes are difficult to quantify. A vendor may increase the number of pixel columns in a dynamic message sign. This change could impact overall sign weight, power consumption, and reliability, therefore affecting other sign systems. A DMS vendor may change the physical attributes of the design - perhaps the module mounting - but keep the electronics and other components the same. This change can also impact sign weight and mounting requirements. A DMS vendor may install the same electronics onto a new circuit board layout. This change may impact power consumption, heat generation, and electrical "noise" within the DMS. In all cases, in order to be classified as a modified standard product, the base product being modified meets the criteria for a standard product as described above. Although the changes are minor, they may create "ripple effects" that impact other design characteristics.

The type of testing that the unit should be subjected to will vary depending on the nature of the modifications. Mechanical changes may necessitate a repeat of the vibration and shock testing; electrical or electronic changes may require a complete repeat of the environmental and voltage testing; and, functional changes such as new display features (e.g., support for graphics on a DMS) may necessitate a complete repeat of the functional testing (but not a repeat of the environmental testing). Members of the design team should work closely with the test team to build an appropriate test suite to economically address the risk associated with the design modifications.

5.3.1.3. New or Custom Product

This type of device is likely to be developed and fabricated to meet the specific project requirements. Note that "new" may also mean a first-time product offering by a well-known vendor, or that a vendor is entering the market for the first time.

The device's design is likely based on a pre-existing design or technology base, but its physical, electrical, electronic, and functional characteristics have been significantly altered to meet the specific project requirements. Examples might include custom ramp controllers, special telemetry adapters, and traffic controllers in an all new cabinet or configuration. It is assumed that the design has not been installed and proven in other installations or that this may be the first deployment for this product.

5.3.2 Software

5.3.2.1. Standard Product (COTS)

Standard product or commercial-off-the shelf (COTS) software has a design that is proven and stable. The general deployment history and industry experience has been positive and those references contacted that are currently using the same product in the same or a similar environment find that it is generally reliable and has met the functionality and performance listed in the published literature.

It may be a custom, semi-custom or a developer's standard software product, but it has been fully designed, coded, deployed, and proven elsewhere prior to being proposed for this specific project. It is assumed that the user is able to "use" or "see" a demonstration of the product and is able to contact current users of the product for feedback on its operation and reliability.

Operating systems, relational databases, and geographical information systems software are examples of standard products. ATMS software from some vendors may also fall under this category as well.

5.3.2.2. Modified Standard Product

This type of software is based on an existing standard product that is being modified to meet specific functional and/or operational requirements for this procurement. It is assumed that the modifications are relatively minor in nature, although all differences should be carefully reviewed to determine the extent of the differences and whether the basic design or architecture was affected.

Examples of minor changes include modifying the color and text font style on a GUI control screen or changing the description of an event printed by the system. Sometimes the nature of the change can appear minor but have significant effects on the entire system. For example, consider a change to the maximum number of DMS messages that can be stored in the database. Increasing that message limit may be considered a major change since it could impact the message database design and structure, message storage allocation, and performance times for retrieving messages. On the other hand, reducing that limit may only affect the limit test on the message index. In all of these cases, in order to be classified as a modified standard product, the base product must meet the criteria for a standard product as described above.

The type of testing that this class of products should be subjected to will vary depending on the nature of the modifications. Changes to communication channel configurations to add a new channel type or a device on an existing channel may necessitate a repeat of the communication interface testing; functional changes such as new GUI display features (e.g., support for graphics characters on a DMS) may necessitate a repeat of the functional testing for the DMS command and control GUI. Members of the design team should work closely with the test team to build an appropriate test suite to economically address the risk associated with the design modifications.

5.3.2.3. New or Custom Product

This class of software is a new design or a highly customized standard product developed to meet the specific project requirements. Note that this may also be a new product offering by a well-known applications software developer or ITS device vendor, or a new vendor who decides to supply this software product for the first time. The vendor is likely to work from an existing design or technology base, but will be significantly altering the functional design and operation features to meet the specific project requirements. Examples might include: custom map display software to show traffic management devices and incident locations that the user can interactively select to manage, special routing, porting to a new platform, large screen display and distribution of graphics and surveillance video, and incident and congestion detection and management algorithms. It is assumed that the design has not been developed or deployed and proven in other installations or that this may be the first deployment for a new product.

For software, agencies are cautioned that the distinction between "modified" and "new" can be cloudy. Many integrators have claimed that this is a "simple port" to re-implementation with a few changes, only to find that man years had to be devoted to the changes and testing program that resulted in the modified software.

5.4 Risk

The risk to project schedule and cost increases when utilizing custom or new products in a system. There are more "unknowns" associated with custom and new products simply because they have not been proven in an actual application or operational environment, or there is no extensive track record to indicate either a positive or negative outcome for their use or effectiveness. What's unknown is the product's performance under the full range of expected operating and environmental conditions and its reliability and maintainability. While testing can reduce these unknowns to acceptable levels, that testing may prove to be prohibitively expensive or disproportionately time consuming. Accepting a custom or new product without appropriate testing can also result in added project costs and implementation delays when these products must be re-designed or replaced. These unknowns are real and translate directly into project cost and schedule risk. It is imperative that the design and test teams work together to produce test suites that economically address the risk associated with the new or custom designs.

When planning the project, risk areas should be identified early and contingency plans should be formulated to address the risks proportionately. Risk assessments should be performed that qualitatively assess the probability of the risk and the costs associated with implementing remediation measures. The remediation measures can include a wide range of measures including increased testing cycles, additional oversight and review meetings, more frequent coordination meetings, etc.

After identifying the risks and remediation costs, the project funding and schedules can be adjusted to provide a reasonable level of contingency for the risks. Risk is managed and not eliminated by shifting it to another organization. Costs will follow the shifted risks; however, some level of management costs will remain with the acquiring agency.

5.5 Summary

This chapter has described the building block approach to testing and the testing level in a way that should help in planning a project test plan. It introduced the product maturity concept and how the maturity of products selected for use in your TMS affect what and how testing must be done. These concepts directly impact test planning. Lastly, this section considered how the risk to project schedule and cost are affected by the maturity of the products and their testing program requirements.

14 Burn-in is a procedure used to detect and reject products that fail early in their operational life due to otherwise undetected manufacturing process or component defects. The procedure involves exposing the product to the full range of expected operational and environmental conditions for a continuous period of time that exceeds its early failure rate (also known as infant mortality rate). Products that continue to function properly following the burn-in period are accepted for installation and use; those that fail are rejected as unsuitable. The product manufacturer typically conducts the burn-in procedure prior to shipment. However, a large assembly of different components from different manufacturers such as a DMS may require burn-in after site-installation.

15 An integration facility typically incorporates a software development environment as well as an area for representative system hardware and software components that have successfully passed unit testing to be integrated and tested prior to site installation.

16 This is not quantified and it is difficult to put a number on "reliability" for a typical ITS device. For traffic controllers, the general history has been a 1-2 percent DOA rate for initial installation and typically between 4 percent and 8 percent failure rate per year. While these are non-scientific numbers, this has been the general experience for these types of devices.