|

Ramp Performance Monitoring, Evaluation and Reporting |

|

| 9.1 Chapter Overview |

Performance monitoring provides a mechanism to determine the effectiveness of the ramp management strategies and actions described in this handbook. Performance monitoring ties the strategies and actions selected in Chapter 6 back to the program goals and objectives outlined in Chapter 3. By doing so, practitioners can easily determine if selected strategies help resolve the problems that occur on or near ramps of interest. Additionally, performance monitoring, evaluation, and reporting promote ongoing support of the ramp management strategies and offer ways to improve them. This leads to improvements in operational efficiency and reduces unneeded expenditures. Finally, performance monitoring and reporting provide feedback for refining agency and traffic management program goals and objectives.

This chapter guides practitioners through the process of monitoring, evaluating, and reporting the performance of ramp operations and the ramp management strategies selected and implemented in Chapters 6 and 7, respectively. The processes and methods that may be used to monitor and evaluate the performance of ramp management strategies are presented. This includes a discussion of the types of ramp management analyses, examples of performance measures, and costs and resources needed to conduct these activities.

Traffic Managers should use the information derived in Chapter 6 (selecting ramp management strategies), Chapter 7 (implementing ramp strategies), and Chapter 8 (operating and maintaining ramp strategies) to develop performance measures that are consistent with program goals and objectives (discussed in Chapter 3). The results of the performance monitoring effort feed back to refining and updating the program on a periodic basis.

Throughout this chapter, references are made to previously conducted evaluation efforts, including the recently conducted evaluation of the Twin Cities, Minnesota ramp metering system. This particular evaluation effort is further highlighted as a case study in Chapter 11.

Chapter 9 Objectives:

- Objective 1: Explain what is involved in ramp management analysis, including performance measures and analysis tools.

- Objective 2: Describe how to tailor monitoring and evaluation efforts to meet the needs of the deploying agency.

- Objective 3: Describe how to measure and estimate ramp, freeway and arterial performance.

- Objective 4: Describe analysis methodologies and reporting tools and techniques.

- Objective 5: Explain the importance of performance monitoring, evaluation, and reporting in maintaining an effective ramp management system.

|

| 9.2 Ramp Management Analysis Considerations |

The analysis of ramp management performance can be performed as a single study, on a periodic basis, or as an ongoing continuous program, depending on the needs and available resources of the deploying agency. For the purposes of this manual, the general steps in analyzing ramp management performance are described as:

- Performance Monitoring – The collection of performance statistics, using either manual or automated methods, to enable the assessment of particular Measures of Effectiveness (MOEs) related to the performance of the ramp management deployment.

- Evaluation – The analysis of the collected data to provide meaningful feedback on the performance of the system.

- Reporting – The output of the evaluation results in a format appropriate to the needs of agency personnel, elected decision makers, the public, and/or other potential audiences.

This section summarizes some of the important considerations in developing a performance analysis process and provides practitioners with guidelines for tailoring performance monitoring, evaluation, and reporting efforts that are appropriate to their needs.

Prior to initiating any ramp performance monitoring or evaluation effort, many factors should be considered that will shape the overall effort. Careful consideration of these factors is encouraged to better ensure that the monitoring and evaluation results are relevant to the objectives of the effort, technically valid, and appropriate to the intended audience. Some important considerations are discussed further in the following sections. |

| 9.2.1 Types of Ramp Management Analysis |

|

|

One of the first considerations in planning an evaluation is to identify the type of analysis to be performed. The type of analysis to be performed is largely defined by the objectives of the evaluation and type of feedback desired. Ramp management evaluations may be performed prior to implementation, conducted as “before” and “after” snapshot views of performance, or implemented as a continuous monitoring and evaluation process. The evaluation efforts may also be narrowly focused to analyze one specific performance impact, or may be more broadly defined to capture the comprehensive regional benefits of the ramp management application. These analyses may also be intended to isolate the impacts of the ramp management deployment by itself, or to evaluate the performance of ramp management as part of a combination of operational strategies.

This section summarizes the basic types of analysis related to ramp management performance. This section also discusses the implications of how each type of study has different needs that substantially influence the analysis procedures to be performed. Some of the different types of analysis include:

- Pre-Deployment Studies – Analysis performed to determine the appropriateness of ramp management applications for a particular location.

- System Impact Studies – Analysis performed to identify the impact of an existing ramp management strategy on one or more selected MOEs.

- Benefit/Cost Analysis – Comprehensive analysis conducted to evaluate the cost effectiveness of a ramp management application.

- Ongoing System Monitoring and Analysis – Continuous, real-time performance analysis for the purpose of providing feedback to system operators.

The following sections provide descriptions of these various types of analyses and discuss how the intended purpose of the analysis helps to determine the appropriate approach.

Pre-Deployment Studies

Pre-deployment studies are typically performed to assess the feasibility and appropriateness of ramp management applications for a particular location. These studies may be performed to analyze the potential impacts of introducing ramp management deployments in a region that currently does not use these strategies, or may be used to assess the impacts of expanding an existing ramp management program to new locations within a region. These studies may also be implemented to estimate the impact of a proposed change in ramp management strategy at an existing location.

As the name implies, these analyses are performed prior to the actual implementation of the strategy. Thus, the impacts estimated in these studies represent predictions of what will likely occur, rather than observations of what has occurred. These analyses, however, often use observed “before” and “after” results from previously conducted system impact studies (described below) of existing ramp management activities in the region, or observed results from other regions as inputs to the analysis process. These inputs may be entered into a variety of planning tools, ranging from simple spreadsheet models to complex micro-simulation programs, to evaluate the expected impacts of the potential ramp management application. The U.S. Department of Transportation (DOT) Intelligent Transportation Systems (ITS) Joint Program Office maintains an ITS benefits website that lists observed results for a wide range of ITS projects and elements from regions across the United States. [1]

Pre-deployment studies may be used to analyze ramp management applications by themselves, in combination with other improvements, or as alternatives to other improvements. Although not technically considered an evaluation or monitoring effort, pre-deployment studies are mentioned here since they may use similar analysis approaches and tools. The use of pre-deployment studies in the selection of appropriate ramp management strategies is discussed in Chapter 6 and supported by the decision diagrams. In addition, the planning of these strategies for implementation is discussed in greater detail in Chapter 10.

System Impact Studies

System impact studies attempt to identify the impact of a ramp management application on one or more particular performance measures. These studies typically involve the comparison of conditions “before” the deployment of ramp management with conditions “after” the strategy is deployed. This, however, is not always the case. In the evaluation of the Twin Cities ramp metering system conducted in 2000, the entire ramp metering system was shut down for a period of six weeks to allow the identification of conditions “without” ramp meters for comparison of conditions observed “with” the fully functioning system.

The purpose of these studies is often to provide the system operators with direct feedback on the effectiveness of their implemented strategies. For example, a system impact study may be implemented to assess the success of a ramp management deployment in mitigating a particular system deficiency, such as higher than expected crash rates in a merge area. These studies are also frequently conducted to assess the particular benefits of ramp management deployments. The results may then be communicated to decision makers and/or the traveling public to help justify and promote ramp management as an effective traffic management strategy.

The traffic conditions used for comparison in these system impact studies are typically based on observed data collected in the field using manual or automated data collection methods. In evaluations where the available evaluation resources do not support the collection of ground-truth (observed) data or in situations where the collection of this data is not feasible, various models and/or traffic analysis tools may be used to simulate these conditions for comparison. These tools may include a wide range of sketch planning tools, Highway Capacity Manual [2]

( HCM )-based tools, travel demand models, or macro- and micro-simulation tools. The FHWA’s Traffic Analysis Toolbox Volumes 1 and 2 provide additional discussion of the available tools as well as guidelines for selecting the appropriate tool. [3]

Benefit/Cost Analysis

In many regards, benefit/cost analyses are similar to system impact studies in that both represent assessments of the impacts related to the implementation of a particular project or strategy. Whereas system impact studies may focus on particular performance measures, a benefit/cost analysis is broader and attempts to fully account for the comprehensive, multi-modal impacts of ramp management strategies. Benefit/cost analysis weighs the complete observed impacts of the system – including both positive impacts (e.g., reduced travel time on the mainline facility) and negative impacts (e.g., increased emissions at the ramp queues) – with the cost of implementing and operating the ramp management strategy.

The purpose of these analyses is typically to identify the relative effectiveness of investment in the strategy proposed for use, by providing a common point of comparison with other strategies that may be used in prioritizing funding for future applications. The information generated by benefit/cost analyses is also used to communicate the relative benefits of the system to decision makers and the traveling public. The comprehensive analysis of the ramp metering system in the Twin Cities region of Minnesota (cited throughout this section and presented as a case study in Chapter 11) represents an example of a regional benefit/cost analysis of a ramp metering system. This analysis was initiated to identify and communicate the benefits of the application to lawmakers and residents in the region.

Benefit/cost analyses are also typically based on comparisons of conditions both with and without the application of the strategy. The compared conditions may represent a snapshot view or may be based on longer-term trends, depending on the needs of the particular study. Due to the more comprehensive nature of benefit/cost analyses, however, these studies often make more substantial use of analysis tools and models to generate estimates of the full range of possible impacts.

Although intended to provide a comprehensive quantitative analysis of the benefits and costs of the ramp management application, there are many impacts that are difficult or impossible to quantify, such as traveler perceptions. No benefit/cost analysis can fully encompass all of the possible impacts of a ramp management system, so it is important to recognize that benefit/cost analysis provides only a partial view of the overall picture that should be evaluated in assessing the success of the strategy.

Similar to the other types of analysis, a benefit/cost analysis can be designed to isolate the particular impacts and benefits related specifically to ramp management. It may also be utilized as part of a broader evaluation designed to capture the benefits of a selected combination of traffic management strategies.

Ongoing System Monitoring and Analysis

The purpose of ongoing system monitoring and analysis is to provide system operators with direct, real-time feedback on the performance of the ramp management strategy, to allow for more active and precise management of the system. If the data collected through this monitoring effort is appropriately archived, additional analysis may be performed to identify trends that show how the impacts of ramp management may change over time or vary under different traffic conditions.

The ongoing nature of monitoring efforts typically requires a dependence on automated data sources, such as loop detector data, radar- or acoustic-based speed detectors, closed-circuit television (CCTV) cameras, and automatic vehicle location systems. Often, these automated data sources may be deployed as part of a ramp metering system or a general freeway management system. Reliable access to accurate data sources such as these is a prerequisite for implementing a successful monitoring and analysis program. Refer to Section 9.4.2 for more detail on the benefits and challenges of automated data collection and monitoring.

Although these ongoing monitoring and analysis efforts are intended to provide performance data to the system operations personnel, it is important to note that the data generated by these efforts may be utilized in other evaluation efforts. For example, automated system data collected by the Minnesota DOT for use in monitoring the real-time performance and making operational decisions, was used extensively in the benefit/cost analysis of the Twin Cities metering system. This historical volume and speed data was used to extrapolate impacts observed during a limited data collection window to other time periods and traffic conditions. |

| 9.2.2 Identifying the Appropriate Study Area |

|

|

The study area selected can have significant implications on the analysis data requirements, evaluation techniques, resource requirements, and even the results. These implications are discussed in this section to help practitioners identify the appropriate study area suitable to their particular needs.

Ramp management applications can have impacts far beyond the local area in which they are implemented. Depending on travel pattern changes, impacts may be observed at freeway bottleneck locations far downstream from the ramp itself, arterial intersections located many miles from the interchange, or even on alternative modes such as transit. Failure to define the study area broadly enough may result in critical impacts not being captured and an overstatement or understatement of reported benefits. On the other hand, defining the study area too broadly may result in the inefficient use of evaluation resources if efforts are diverted toward analyzing inconsequential impacts. Therefore, it is critically important to identify an appropriate study area prior to the implementation of the evaluation effort to ensure the proper assessment of system impacts.

There are no firmly established guidelines for identifying the appropriate study area, however this decision is usually based on:

- Purpose of the Study – Is the evaluation effort being undertaken to identify the ability of the ramp management strategy to mitigate a specific deficiency in a particular location, or does it intend to provide a comprehensive accounting of the region-wide benefits and costs?

- Extent of the Ramp Management Application – Is the evaluation being focused on a single or a very limited number of ramps, or does the application involve multiple ramps?

- Knowledge of Local Traffic Conditions – Local operations personnel are usually familiar with traffic conditions and should be involved in any decision regarding the extent of the study area.

Furthermore, factors such as the particular performance measures being evaluated, the proposed analysis tools, and the evaluation resources available have a symbiotic relationship with the determination of the appropriate study area. The intended performance measures, analysis tools, and resource availability should be considered in the determination of the study area. Likewise, the identified study area may also determine the possible performance measures, the appropriate analysis tools, and the evaluation resources required.

Study areas can be generalized into three categories: localized, corridor, or regional. These categories are discussed below with examples of when they should be used.

- Localized Analysis – This analysis focuses on the impacts observed on the facilities immediately adjacent to the ramp management application and is the most appropriate for limited-scale deployments or for system impact evaluations focused on a narrowly defined set of performance measures. For example, an evaluation effort solely focused on identifying the ability of a ramp meter application to decrease the number of crashes occurring within the immediate merge area might limit the study area to this narrowly defined extent.

- Corridor Analysis – Expanding the study area to the corridor level is more appropriate when multiple ramp locations are involved, or when the deployment is anticipated to affect any of the selected performance measures along an entire corridor. The study corridor extent should be based on the local street pattern and knowledge of local travel demand, in order to determine the freeway mainline, ramp, and arterial facilities to be included. The evaluation of the Madison, Wisconsin ramp meter pilot deployment, presented as a case study in Chapter 11, was conducted as a corridor analysis. In this study, the evaluators were interested in capturing the full impacts of the ramp metering deployment. However, the limited extent of the deployment (five ramps on a single beltline corridor) was not deemed likely to produce any significant impacts outside of the defined corridor.

- Regional Analysis – A regional study area is most appropriate when a comprehensive accounting of all possible impacts is required, or when the deployments are scattered across a large area. The evaluation of the Twin Cities ramp metering system was conducted as a regional analysis because the Minnesota DOT wanted to identify the full impacts of the entire system (approximately 430 meters) on the overall region. Regional analyses can be the most costly analysis to conduct, due to the significant data requirements. Therefore, this analysis will often use various large-scale traffic analysis tools (e.g., regional travel demand models) to estimate the impacts, rather than depending solely on observed before and after data.

Not all evaluation efforts will fit neatly into these study area definitions. Some evaluations may use multiple study area definitions within the same effort based on the performance measures being evaluated or the availability of data. For example, in the Twin Cities evaluation, extensive analysis was first performed on several representative corridors to identify the specific impacts to the freeway, ramp, and parallel arterial facilities. The findings from this corridor-level analysis were then extrapolated regionally using a series of spreadsheet-based analysis tools to estimate the regional impacts. |

| 9.2.3 Performance Measures |

|

|

The FHWA’s Freeway Management and Operations Handbook provides the following overview of performance measures: [4]

“Performance measures provide the basis for identifying the location and severity of problems (e.g., congestion and high crash rates), and for evaluating the effectiveness of the implemented freeway management strategies. This monitoring information can be used to track changes in system performance over time, identify systems or corridors with poor performance, identify the degree to which the freeway facilities are meeting goals and objectives established for those facilities, identify potential causes and associated remedies, identify specific areas of a freeway management program or system that requires improvement/enhancements, and provide information to decision-makers and the public. In essence, performance measures are used to measure how the transportation system performs with respect to the overall vision and adopted policies, both for the ongoing management and operations of the system and for the evaluation of future options.

Agencies have instituted performance measures and the associated monitoring, evaluation, and reporting processes for a variety of reasons: to provide better information about the transportation system to the public and decision makers (in part due, no doubt, to a greater expectation for accountability of all government agencies); to improve management access to relevant performance data; and to generally improve agency efficiency and effectiveness, particularly where demands on the transportation agency have increased while the available resources have become more limited.”

The particular performance measures selected for monitoring, evaluation, and reporting have substantial influence over the analysis structure and requirements. Performance measures should be carefully identified and mapped to the specific need of the study. The FHWA’s Freeway Management and Operations Handbook provides guidelines for developing good performance measures, including:

- Goals and Objectives – Performance measures should be identified to reflect goals and objectives, rather than the other way around. This approach helps to ensure that an agency is measuring the right parameters and that “measured success” will in fact correspond with actual success in terms of goals and objectives. Measures that are unfocused and have little impact on performance are less effective tools in managing the agency. Moreover, just as there can be conflicting goals, reasonable performance measures can also be divergent (i.e., actions that move a particular measure toward one objective may move a second measure away from another objective). Such conflicts may be unavoidable, but they should be explicitly recognized, and techniques for balancing these interests should be available.

- Data Needs – Performance measures should not be solely defined by what data are readily available. Difficult-to-measure items, such as quality of life, are important to the community. Data needs and the methods for analyzing them should be determined by what it will take to create or ‘‘populate’’ the desired measures. At the same time, some sort of “reality check” is necessary. For example: Are the costs to collect, validate, and update the underlying data within reason, particularly when weighed against the value of the results? Can easier, less costly measures satisfy the purpose – perhaps not as elegantly, but in a way that does the job? Ideally, agencies will define and over time implement the necessary programs and infrastructure (e.g., detection and surveillance subsystems) for data collection and analysis that will support a more robust and descriptive set of performance measures.

- Decision-Making Process – Performance measures must be integrated into the decision-making process. Otherwise, performance measurement will be simply an add-on activity that does not affect the agency’s operation. Performance measures should be based on the information needs of decision makers, with the level of detail and the reporting cycle of the performance measures matching the needs of the decision makers. As previously noted, different decision-making tiers will likely have different requirements for performance measures. One successful design is a set of nested performance measures such that the structure is tiered from broader to more detailed measures for use at different decision-making levels.

- Facilitate Improvement – The ultimate purpose of performance measures must clearly be to improve the products and services of an agency. If not, they will be seen as mere “report cards”, and games may be played simply to get a good grade. Performance measures must therefore provide the ability to diagnose problems and to assess outcomes that reveal actual operational results (as compared to outputs that measure level of effort, which may not be the best indicator of results).

- Stakeholder Involvement – Performance should be reported in stakeholder terms; and the objectives against which performance is measured should reflect the interests and desires of a diverse population, including customers, decision makers, and agency employees. Buy-in from the various stakeholders is critical for initial acceptance and continued success of the performance measures. If these groups do not consider the measures appropriate, it will be impossible to use the results of the analysis process to report performance and negotiate the changes needed to improve it. Those who are expected to use the process to shape and make decisions should be allowed to influence the design of the program from the beginning. Similarly, those who will be held accountable for results (who are not always the same as the decision makers) and/or will be responsible for collecting the data should be involved early on, to ensure that they will support rather than circumvent the process or its intended outcome. The selected performance measures should also reflect the point of view of the customer or system user. An agency must think about who its customers are, what the customers actually expect of the department’s activities and results, and how to define measures that describe that view.

- Other Attributes – Good performance measures possess several attributes that cut across all of the “process” issues noted previously. These include:

- Limited Number of Measures – All other things being equal, fewer rather than more measures is better, particularly when initiating a program. Data collection and analytical requirements can quickly overwhelm an agency’s resources. Similarly, too much, too many types or too detailed of information can overwhelm decision makers. The corollary is to avoid a performance measure that reflects an impact already measured by other measures. Performance measures can be likened to the gauges of a dashboard – several gauges are essential, but a vehicle with too many gauges is distracting to the driver.

- Easy to Measure – The data required for performance measures should be easy to collect and analyze, preferably directly and automatically from a freeway management (or other) system. As an example, in most ramp controllers, the firmware and the detector loops can automatically detect when a vehicle has violated the red signal phase. This information can be collected and used to note high violation areas that could benefit from increased enforcement or perhaps a change in the signal operation timing.

- Simple and Understandable – Within the constraints of required precision, accuracy, and facilitating improvement, performance measures should prove simple in application with consistent definitions and interpretations. Any presentation of performance measure data must be carefully designed so that it is easy for the audience to understand the information, and the data analysis provides the information necessary to improve decision-making.

- Time Frame – The decision-making “tiers” can have significantly different time frames, both for making the decision and for the effect of that decision to take place. Using performance measures to monitor the effectiveness of a policy plan requires measures that can reflect long-term changes in system usage or condition. Similarly, performance measures for the operation of a TMC should reflect changes within a “real-time” context. Once established, performance measures should be in place long enough to provide consistent guidance in terms of improvements and monitoring, to determine whether the objectives are being met.

- Sensitivity – Performance measurement must be designed in such a way that change is measured at the same order of magnitude as will likely result from the implemented actions.

- Geographically Appropriate – The geographic area covered by a measure varies depending on the decision-making context in which it is used. The scope of measures used to evaluate progress on broad policies and long-range planning goals and objectives often are regional, statewide, and even nationwide. To be effective in an operations context, measures may need to be focused on a specific geographic area (e.g., a corridor or system).

The FHWA’s Freeway Management and Operations Handbook also provides a synthesis of innovative performance measures identified in recent research efforts into performance-based planning.4 This handbook will not attempt to document the full inventory of available performance measures. Instead, this section highlights several different categories of performance measures and discusses their potential implications on the analysis effort. In general, when selecting a performance measure, the method to obtain the performance measure data for the evaluation should also be considered. Section 9.2.4 presents guidelines for developing an analysis structure designed to promote the consideration of these factors while drafting the evaluation approach. These performance measures are illustrated with example measures from previously conducted ramp management analyses:

Safety – Safety is most often measured through the change in the number of crashes, segmented by severity (e.g., fatal, injury, property damage only, etc.). This performance measure may also be segmented by crash type (e.g., rear-end, side-swipe, etc.). The data supporting this performance measure is most typically obtained from crash records kept by one or more emergency responder agencies or the Department of Transportation. The format and availability of the regional crash data greatly influences the format of the performance measure used to evaluate safety impacts.

Evaluators should use caution in the development of performance measures and in the actual analysis of the data related to crashes. Crashes are randomly occurring events and may be based on limited sample sizes, particularly in the case of less frequently occurring crashes such as those involving fatalities. Thus, a limited number of crashes may cause the rate to spike over short periods or in particular locations. These spikes in the data may be misinterpreted as being related to the ramp management deployment. Therefore, longer-term historical data should be used to validate the crash rate, or the evaluators should consider consolidating some of the crash segmentations to ensure an adequate sample size. Furthermore, it may be more appropriate to evaluate the change in the crash rate (e.g., number of crashes per vehicle-mile traveled) rather than the actual number of observed crashes to help control for changes in traffic volumes.

In the Twin Cities evaluation, the Minnesota Department of Public Safety maintained a useful database of all regional crashes reported by local police agencies, which was used to obtain the crash data. [5]

The length of the data collection period (six weeks) and the extent of the study area (regionally) were sufficient to segment the observed crash data by severity and type. However, no fatalities were observed during either of the data collection periods.

Mobility – Travel mobility impacts are typically measured as a change in travel time, speed, or delay. These measures are targeted at capturing the user’s travel experience. Therefore, these measures are most effective when captured on a per-trip basis, such as the change in travel time for a door-to-door trip. Use of aggregate system measures such as total system person-hours of travel (PHT) may not accurately capture user benefits. Likewise, spot measurements of speed may not accurately reflect the individual’s overall travel experience.

In the Twin Cities evaluation, travel time and speed were used as performance measures. Travel time was collected for several representative trips utilizing arterial, ramp, and freeway facilities. Spot speeds were also collected to support the travel time findings.

Travel Time Reliability – A number of innovative performance measures have recently been developed to aid in the evaluation of travel time reliability. A few examples include the travel time index (TTI), which is a comparison between the travel conditions in the peak-period to free-flow conditions, and the buffer time index (BTI), which expresses the amount of extra “buffer” time needed to be on time at your destination 95 percent of the time (i.e., late to work one day per month). [6]

These performance measures are critically important to ramp management evaluations and the analysis of many other operational improvements. These measures are intended to capture the impact of reducing travel time variability and making travel times more predictable. More predictable travel times allow travelers to better budget their travel schedules and avoid unexpected delays. Ramp metering systems reduce travel time variability and have a potentially significant impact on this measure.

To illustrate the magnitude of this impact, the estimated impacts on travel time reliability in the Twin Cities evaluation outweighed the impacts on average travel time by a factor of ten, and overall accounted for 40 percent of the total benefits identified for the system.

Environmental – Environmental performance measures used in ramp management analyses typically include changes in vehicle emissions and in fuel consumption. Identifying effective environmental performance measures that may be successfully evaluated within the framework and available resources can be a challenge. For example, the implementation of ramp metering can simultaneously reduce emissions and fuel use on the freeway mainline, while increasing these factors at the ramp meters. Therefore, the data collection and analysis methodology for these performance measures must be sensitive to this situation.

In the Twin Cities evaluation, the estimation of fuel use impacts was particularly problematic. This performance measure was estimated based on a fuel use rate based on collected speed and vehicle-miles traveled (VMT) data. Freeway speeds were observed to increase with ramp meters as stop-and-go driving conditions were reduced – a situation that would be expected to result in decreased fuel use. However, the fuel use analysis was based on traditional relationships that estimate higher fuel consumption rates as average vehicle speeds increase. While average speeds did increase in the Twin Cities study, the amount of heavy accelerations and decelerations decreased as traffic flow was more stable and smooth flowing. Thus, in reality, fuel use likely decreased, but this effect was not captured in the traditional analysis.

More advanced fuel estimation methodologies that are sensitive to vehicle acceleration profiles were considered for use in the evaluation. However, the data required for this analysis could not be collected within the timeframe and resources available for the Twin Cities study. Furthermore, limited studies comparing the accuracy of these advanced methodologies with more traditional methods have been inconclusive to date, which hindered their application in this highly visible evaluation.

Similar lack of sensitivity to actual operating conditions in many vehicle emission estimation methodologies can also create difficulties in estimating these performance measures.

Facility Throughput – These performance measures are targeted toward representing the system operator’s perspective and typically include one or more of the following: throughput (vehicle or person volumes), level of service (LOS), facility speeds, volume to capacity (V/C) ratio, or queuing measures (length and frequency). The particular performance measure(s) selected in this category greatly influences the format of the data that needs to be collected. In general, performance measures targeted toward assessing person volumes or throughput are more difficult to collect than vehicle-based measures, but often these person-based measures can provide a much more accurate picture of the changes in traveler behavior, especially for special-use treatments on ramps (e.g., HOV bypass lanes). For the majority of smaller-scale ramp management evaluations, vehicle occupancies would not be anticipated to change significantly, which allows the vehicle measures to be used without a significant loss of accuracy.

The Twin Cities evaluation included measures of vehicle volumes on freeways, ramps, and parallel arterials, as well as measures of transit passenger counts to evaluate potential mode shifts. The evaluation also included a queuing analysis measuring queue lengths at the ramp facilities.

Public Perceptions/Acceptance – The perceptions of the traveling public regarding the benefits of the ramp management system and their acceptance of the system performance can be extremely important to measure depending on the purpose of the study. These measures are typically assessed through conducting a series of one or more focus groups, telephone surveys, intercept surveys, or panel survey groups. The collection of this data often requires significant resources to complete. Nevertheless, the information on public perceptions gained through these methods can be invaluable in shaping public outreach campaigns.

These performance measures are often used to support the findings from field data collection and can be used to identify areas where perceptions differ from reality. A critical finding from the Twin Cities evaluation showed that the public’s perception of waiting times in ramp queues was nearly twice the actual wait time recorded from the field data. Insight into this perception was a critical input in modifying the system’s operational procedures following the evaluation.

Other Performance Measures – Many other performance measures have been used in evaluating ramp management applications that do not fit neatly in the above categories. Most common is the use of system costs in benefit/cost analyses and other studies. In evaluating costs, it is important to identify both the full up-front cost of planning and implementing the ramp management application (capital costs), as well as the ongoing operations and maintenance costs associated with the deployment. Identifying these costs can be problematic, because the costs of ramp management deployment and operation are often lumped in with other programs and it may take some effort to isolate the specific costs relatable to ramp metering. The U.S. DOT ITS Joint Program Office maintains an ITS cost database on their website that provides both unit and system costs of ITS elements and projects throughout the country.[7]

Specific performance measures have been used in other evaluations to test the ramp metering system’s impact on other operational strategies. For example, an evaluation conducted in Madison, Wisconsin (included as a case study in Chapter 11) compared the average incident response time both before and after the ramp meters were deployed, to identify efficiency gains in the incident management program attributable to coordination with the ramp metering system. |

|

The U.S. DOT ITS Joint Program Office (ITS JPO) has developed guidelines for conducting evaluations for operational tests and deployments carried out under the Transportation Equity Act for the 21st Century (TEA-21) ITS program. Although not all ramp management deployments are subject to the specific evaluation requirements of this program, the guidelines do provide a valid and implementable analysis structure. Conducting evaluations according to a well defined, systematic structure helps to ensure that the evaluation meets the needs and expectations of stakeholders.

These evaluation guidelines are typically intended to guide the conduct of “before and after” evaluations looking to estimate the impact of the deployed improvements on the system performance. These guidelines are also intended to provide evaluation results within a consistent reporting framework that will allow the comparison of results from different geographic regions. With minor modification, however, this evaluation framework may be applied to a variety of evaluation types and may be easily scaled to the size of the evaluation effort and available resources. The basic steps in the analysis structure recommended by the ITS JPO guidelines include:

- Forming the Evaluation Team.

- Developing the Evaluation Strategy.

- Developing the Evaluation Plan.

- Developing Detailed Test Plans.

- Collecting and Analyzing Data.

- Documenting Results.

More detailed discussions of the recommended steps are provided below. The specific steps recommended by ITS JPO have been modified slightly to make them more relevant to ramp management evaluations. These evaluation guidelines focus heavily on the systematic development of evaluation plans to guide the conduct of the evaluation effort. Less specific guidance is provided by these guidelines on collecting and analyzing data and on reporting results. Additional discussions of these crucial evaluation tasks are provided in this handbook in Sections 9.3, 9.4, and 9.5.

1. Forming the Evaluation Team. Each of the project partners and stakeholders designates one member to participate on the evaluation team, with one member designated as the evaluation team leader. Experience has demonstrated that formation of this team early in the project is essential to facilitating evaluation planning along a "no surprises" path. Participation by every project stakeholder is particularly crucial during the development of the "Evaluation Strategy."

2. Developing the Evaluation Strategy. The evaluation strategy document includes a description of the project to be evaluated and identifies the key stakeholders committed to the success of the project. It also relates the purpose of the project to the general goal areas. Example project goals may include:

- Traveler safety.

- Traveler mobility.

- Transportation system efficiency.

- Productivity of transportation providers.

- Conservation of energy and protection of the environment.

- Other goals that may be appropriate to unique features of a project.

For any given evaluation, the goal areas must reflect local, regional, or agency transportation goals and objectives. A major purpose of the evaluation strategy document is to focus partner attention on identifying which goal areas have priority for their project. Partners assign ratings of importance to goal areas, and evaluation priorities and resources are consequently aligned to the prioritized set. This rating process gives partners valuable insights regarding areas of agreement and disagreement and assists in reconciling differences and bolstering common causes.

Each of these goal areas can be associated with outcomes of deployment that lend themselves to measurement. These outcomes resulting from project deployment are identified as measures and have been adopted as useful metrics. The association of goal areas and measures is depicted in Table 9‑1.

Table

9‑1 : Example Evaluation Goals and Measures

| Goal Area |

Measure |

Safety |

- Reduction in the overall rate of crashes.

- Reduction in the rate of crashes resulting in fatalities.

- Reduction in the rate of crashes resulting in injuries.

|

Mobility & Reliability |

- Reduction in delay.

- Reduction in transit time variability.

|

Public Perception/ Acceptance |

- Improvement in customer satisfaction.

|

Improvements in

Effective Capacity |

- Increases in freeway and arterial throughput or effective capacity.

|

Cost Savings |

- Reduction in agency costs.

|

Energy &

Environment |

- Decrease in emissions levels.

- Decrease in energy consumption.

|

|

The "few good measures" in the preceding table constitute the framework of benefits expected to result from deploying and integrating ITS technologies (including ramp management). While each project partnership will establish its unique evaluation goals, these measures serve to maintain the focus of goal setting on how the project can contribute to reaping the benefits of one or more of the measures.

3. Developing the Evaluation Plan. After the goals are identified and priorities are set by the partners, the evaluation plan should refine the evaluation approach by formulating hypotheses. Hypotheses are merely "if-then" statements about expected outcomes after the project is deployed. For example, a possible goal of implementing a ramp meter system is improving safety by reducing crashes in merge areas. If the evaluation strategy included this goal, the evaluation plan would formulate hypotheses that could be tested. In this case, one hypothesis might be, "If ramp metering is implemented, vehicle crashes will be reduced in the merge areas." A more detailed hypothesis might suggest that such collisions would be reduced by 10 percent. The evaluation plan identifies all such hypotheses and then outlines the number of different tests that might be needed to test all hypotheses.

In addition to hypotheses regarding system and subsystem performance, the evaluation plan identifies any qualitative studies that will be performed. The qualitative studies may address key components of the project, such as, (but not limited to):

- Consumer acceptance.

- Institutional issues.

- Others as appropriate to local considerations.

4. Developing Detailed Test Plans. A test plan will be needed for each test identified in the evaluation plan. A test plan lays out all of the details regarding how the test will be conducted. It identifies the number of evaluator personnel, equipment and supplies, procedures, schedule, and resources required to complete the test. For ongoing monitoring activities or evaluation activities involving automated data sources, the test plan should identify any database design or data archiving issues.

5. Collecting and Analyzing Data and Information. This step is the implementation of each test plan. It is in this phase where careful cooperation between partners and evaluators can save money. By early planning, it is possible to build capabilities for automatic data collection into the project. Such data collection can be used by partners after the evaluation is completed to provide valuable feedback with regard to the performance of the system. Such feedback can help in detect system failures and improve system performance. Refer to Sections 9.3 and 9.4 for more detail on the data collection and data analysis needs, respectively, to support the evaluation.

6. Documenting Results. The strategy, plans, results, conclusions, and recommendations should be documented in a Final Report. Refer to Section 9.5 for more detail on reporting of results. |

| 9.2.5 Controlling Analysis Externalities |

|

|

Externalities, such as data collection periods or multiple system installations, can have a distorting impact on performance analysis. This section highlights some of the potential impacts and discusses remedies, so that practitioners can anticipate and minimize the impacts of these externalities in their monitoring and evaluation efforts. Specifically, strategies for controlling two analysis externalities are discussed: data discrepancies due to the passage of time, and data discrepancies related to other system improvements.

Controlling for Data Discrepancies Occurring Over Time

Many evaluation efforts, particularly those relying on the collection and analysis of “before” and “after” data, may be adversely affected by the passage of time. Seasonal and cyclical variations in traffic patterns, as well as regional trends, may all serve to distort data collected in different time periods. This makes it difficult to isolate the impacts of the ramp management implementation from the “background noise.” The best way to control for these externalities is to understand these influences and include plans for addressing them in the evaluation plans.

Prior to initiating a data collection effort, historical data should be analyzed to provide a better understanding of any seasonal variations and trends affecting traffic patterns. Data collection and analysis plans should be developed to minimize the impact of these variations and designed to capture performance data during periods with similar characteristics. As a simple mitigating strategy for some before and after studies, evaluators may schedule both the before and after data collection periods as closely as possible to the implementation date to minimize the data window. In addition, care should be taken in using too brief of an “after” evaluation period. Immediately after implementation, motorists will start to become accustomed to the new ramp management strategy. As such, the impacts may be abnormal in these initial stages. After a certain amount of time, these impacts may stabilize as the motorists become more familiar with the strategy. Therefore, the evaluation should be designed to take this initialization period into account.

However, this simple mitigation strategy cannot be used if the evaluators require a longer data collection period or prefer to evaluate the system performance at a sufficient time after the implementation to allow traveler behaviors to change. For these situations, alternative control strategies may be required to normalize the data. In the Twin Cities ramp meter evaluation, historical crash data from the previous five years was analyzed to estimate the number of crashes that would be expected to occur in each of two separate six-week data collection periods. This analysis revealed that seasonal variations resulted in more crashes historically occurring in the second period. These predicted crash rates were compared with the observed data and used to discount this seasonal variation in the second data collection period and avoid the over-estimation of benefits.

Other mitigation strategies involve the use of control data collected from a corridor or region of the network unlikely to be affected by the ramp management deployment. Any differences between the before and after data observed in the control corridor data may be used to represent regional traffic variations that should be discounted from the data collected in the metered corridor, to avoid including these global variations as benefits of the ramp meters.

Controlling for Data Discrepancies Due to Other System Improvements

Another significant externality that may result in data discrepancies is the presence or implementation of other system improvements. For example, if a ramp metering system is deployed simultaneously with an incident management system, it may be impossible to isolate particular impacts attributable to each system. Likewise, construction activity in the freeway corridor or on major parallel surface streets can result in changed travel patterns and negatively affect the overall validity of the analysis.

To control these externalities, evaluators need to identify and understand the potential impact of any other planned system improvements. Prior to implementing any data collection effort, all agencies responsible for managing and maintaining the transportation network in the study region should be contacted to identify any new infrastructure or operational improvements, proposed changes to operational policies or procedures, planned construction or maintenance activities, or any other activities having a possible effect on travel patterns in the study area. To the degree possible, data collection activities should be scheduled around any significant system changes to avoid introducing bias to the data. The phasing of multiple system improvements may also be considered, to provide an opportunity to evaluate the impacts of each improvement separately. |

| 9.2.6 Costs of Evaluation and Monitoring |

|

|

The costs of evaluation and monitoring are nontrivial and should be carefully considered in the planning of any ramp management application, to ensure that suitable resources are available to successfully conduct these activities now and in the future. The actual costs incurred can be extremely variable depending on the type of evaluation or monitoring activities and the timeframe of the analysis. Agencies conducting these types of efforts have reported costs ranging from under $10,000 to nearly $1 million. Example costs for various evaluation efforts are discussed in several of the case studies presented in Chapter 11.

The cost of data collection for evaluation and monitoring efforts using real-world data can be substantial, often accounting for more than one-half of the total evaluation costs. For studies using advanced traffic analysis tools to evaluate performance, the cost of model development and calibration often accounts for the largest proportion of costs. Other cost items to be considered include:

- Staff labor costs.

- Project management costs.

- Costs associated with developing and updating an evaluation plan.

- Data storage and archiving costs.

- Contracting costs for any outside consultants or researchers.

- Costs of survey activities.

- Costs of developing and distributing reports.

In addition to the type of evaluation or monitoring activities and the timeframe of the analysis, there are a number of additional factors that may affect the cost and resources required. These factors include, but are not limited to:

- Number and geographic distribution of ramp locations.

- Availability and reliability of automated real-time performance data.

- Availability of archived historical performance data and pre-existing data management structures.

- Availability of calibrated traffic analysis tools or models for the analysis region.

- Familiarity of staff in developing and implementing evaluation and monitoring plans.

|

| |

| 9.3 Data Collection |

This section provides practical guidance on the collection of data required to support the evaluation of ramp management strategies. The collection of data should be related to the performance measures presented earlier in Section 9.2.3. Further information on data collection methods can be found in the Travel Time Data Collection Handbook or the Traffic Engineering Handbook. [8], [9]

Practical experience from the Twin Cities Ramp Metering Evaluation and/or other real-world analysis efforts are used where appropriate to illustrate these guidelines.

As discussed in Section 9.2, data collection methodologies should be considered early in the development of the evaluation strategy and in the identification of performance measures. The data collection methodologies should be carefully defined in the Evaluation Test Plans. These data collection plans should minimally include an identification of individuals responsible for conducting the effort, resource requirements, data management plans, and contingency plans, as appropriate.

The following sections highlight some of the data collection implications that should be considered when attempting to assess ramp management impacts related to merge/weave areas, ramp queuing, freeway operation, and arterial operation. Each discussion focuses on the appropriate performance measures, the analysis data needed, and data collection methods and tools.

The discussion does not attempt to be prescriptive. Instead, various options are presented that may be considered based on the particular needs of the evaluator. Additional discussion on the approach to analyzing data is presented in Section 9.4. |

| 9.3.1 Data Collection for Evaluation of Merge/Weave Areas |

|

|

When analyzing the impacts in merge/weave areas, one must follow the analytical steps outlined in Section 9.2. The merge/weave area impacts are primarily associated with a localized or corridor study area.

Table 9‑2 provides a summary of data collection efforts associated with evaluating various performance measures within the merge/weave area. Note that information on all performance measures is not provided within this discussion since some performance measures are not appropriately captured within the focused merge or weave area. Mobility and travel time reliability measures are not included in the table because they are better captured when analyzing freeway and arterial operations, and are discussed further in those sections.

Safety is one of the performance measures that can be evaluated in merge/weave areas. As shown in Table 9‑2, the recommended data collection method uses crash records and traffic volume counts. As discussed in Section 9.2.3, the analysis would involve calculating a crash rate using the number of crashes and the vehicle-miles traveled (VMT). The use of videotape is another method to monitor safety conditions by analyzing the number of conflicts (near-crash events).

Environmental performance measures can also be evaluated using travel time runs or “hot spot” detection. “Hot spot” detection uses sensors and equipment to determine where concentrated levels of carbon monoxide (CO) emissions exist. These methods help determine the change in fuel consumption or emission levels. Again, it is important to note that while emissions may be reduced on the freeway mainline, they may increase on the ramp and therefore, the analysis must take ramp conditions into account.

Table 9‑2 : Merge/Weave Area Data Collection Methods

Performance

Measures |

Analysis Data Needed |

Data Collection Methods and Tools |

Safety – Crash Rate |

Number of crashes |

Crash records |

VMT |

Manual or automatic traffic volume counts |

Safety –

Number of Conflicts |

Observation of conflicting movements |

Field observation or videotape |

Throughput –

Traffic Volumes |

Observed traffic

volumes |

Manual or automatic traffic volume counts |

Facility Speeds |

Spot speed

measurements |

Automated speed collection (e.g., loop detector, acoustic, radar, etc.) |

Environmental –

Fuel Consumption |

Vehicle speeds and acceleration profiles |

Travel time runs (GPS-equipped) |

Environmental –

Vehicle Emissions |

Observed emissions |

Hot spot detection |

|

|

| 9.3.2 Data Collection for Evaluation of Ramp Operations |

|

|

Table 9‑3 provides a summary of data collection efforts associated with evaluating ramp operations. This particular analysis often makes use of ramp queue observations that record when vehicles join the rear of the queue and when vehicles are released, or ramp queue counts that periodically record the number of vehicles in queues. From this data, a number of performance measures may be estimated, as shown in the table. These queue counts may be collected manually, or through the use of automated detection where appropriately equipped.

The analysis of ramp queuing impacts is primarily a localized or corridor level study area, though some evaluations may analyze queuing on a regional scale. Performance measures such as mobility and travel time reliability can be evaluated with respect to the ramp conditions. The use of manual or automated ramp queue counts is a data collection method that can provide information about the ramp delay and queue length (including the average value, standard deviation, and maximum observed levels). Analysis of this information can address whether or not the ramp management strategy is reducing the variability of travel time and therefore making travel more predictable for motorists. |

| 9.3.3 Data Collection for Evaluation of Freeway Operations |

|

|

Table 9‑4 provides a summary of data collection efforts associated with the evaluation of performance measures related to freeway operations. Many of the same data collection methods are applicable for freeway operations as for the previous two types of needs. Throughput is a specific performance measure that can be evaluated using a variety of methods. These may include manual or automated traffic counts, travel time runs, or automated speed collection. Automated speed collection can be conducted using a variety of methods. These vehicle detection methods can range from Doppler microwave, active infrared, and passive infrared technologies that have a “point-and-shoot” type of setup. Passive magnetic, radar, passive acoustic and pulse ultrasonic devices require some type of adjustment once the device is mounted. Electronic toll tags can also be used to collect travel time information that can be converted to speed data using the data collected at multiple points.[10]

In any case, these methods can collect traffic volume or spot speed data that can be analyzed to determine level-of-service, volume-to-capacity (V/C) ratios, or facility speeds. These values are directly tied to facility throughput. |

| 9.3.4 Data Collection for Evaluation of Arterial Operations |

|

|

Table 9‑5 provides a summary of data collection efforts associated with the evaluation of performance measures for arterial operations. Many of the data collection activities are related to capturing the impact of drivers diverting from the freeway as a result of ramp metering or other ramp management strategy. Although most of the highlighted data collection activities are intended to be performed on the arterial facilities, some performance measures may be supported with data collected at the ramp facilities or on the freeway. Each of the data collection methods shown in this table has been discussed previously in Sections 9.3.1 through 9.3.3.

Table 9‑3 : Ramp Condition Data Collection Methods

| Performance Measures |

Analysis Data Needed |

Data Collection Methods and Tools |

Safety – Crash Rate |

Number of crashes |

Crash records |

|

VMT |

Manual or automatic traffic volume counts |

Mobility – Travel Time/Ramp Delay |

Seconds of ramp

delay |

Manual or automated ramp queue counts |

Reliability – Travel Time Variation |

Standard deviation in seconds of ramp delay |

Manual or automated ramp queue counts |

Throughput –

Volume |

Ramp volumes |

Manual or automated ramp volume counts |

Queue Spillover |

Percent of time ramp queue impacts adjacent arterial intersections |

Manual or video observation of ramp queue lengths |

Environmental –

Fuel Consumption |

Vehicle speeds and acceleration profiles |

Travel time runs, or manual or automated ramp queue counts or ramp queue observations |

Environmental –

Vehicle Emissions |

Observed emissions |

Hot spot detection |

|

Vehicle speeds and acceleration profiles |

Travel time runs, or manual or automated ramp queue counts or ramp queue observations |

|

Table 9‑4 : Freeway Operations Data Collection Methods

| Performance

Measures |

Analysis Data Needed |

Data Collection Methods and Tools |

Safety – Crash Rate |

Number of crashes |

Crash records |

|

VMT |

Manual or automatic traffic volume counts |

Mobility – Travel Time |

Observed travel times and speeds |

Travel time runs, or speeds from multiple detection sites |

Mobility –

Traveler Delay |

Observed travel times and free-flow travel times |

Travel time runs, or speeds from multiple detection sites |

Travel Time Reliability |

Observed variability in travel times or speeds |

Travel time runs, or speeds from multiple detection sites |

Throughput – Volume |

Observed traffic

volumes |

Manual or automatic traffic volume counts |

Throughput –

Facility Speeds |

Spot speed

measurements |

Travel time runs or automated speed collection (e.g., loop detector, acoustic, radar, etc.) |

Throughput –

LOS or V/C Ratio |

Observed traffic

volumes |

Manual or automatic traffic volume counts |

|

Facility capacity |

Estimates from HCM, or manual or automatic traffic volume counts |

Environmental –

Fuel Consumption |

Vehicle speeds,

volumes and acceleration profiles |

Travel time runs (GPS equipped) or manual or automatic traffic volume counts |

Environmental –

Vehicle Emissions |

Vehicle speeds,

volumes and acceleration profiles |

Travel time runs (GPS equipped) or manual or automatic traffic volume counts |

|

Table 9‑5 : Arterial Operations Data Collection Methods

| Performance Measures |

Analysis Data Needed |

Data Collection Methods and Tools |

Safety – Crash Rate |

Number of crashes |

Crash records |

|

VMT |

Manual or automatic traffic volume counts |

Mobility – Travel Time |

Observed travel times and speeds |

Travel time runs, or speeds from multiple detection sites |

Mobility –

Traveler Delay |

Observed travel times and free-flow travel times |

Travel time runs, or speeds from multiple detection sites |

Travel Time

Reliability |

Observed variability in travel times or speeds |

Travel time runs, or speeds from multiple detection sites |

Throughput – Volume |

Observed traffic

volumes |

Manual or automatic traffic volume counts |

Throughput –

Facility Speeds |

Spot speed

measurements |

Travel time runs or automated speed collection (e.g., loop detector, acoustic, radar, etc.) |

Throughput – Arterial LOS or V/C ratio |

Observed traffic

volumes |

Manual or automatic traffic volume counts |

|

Facility capacity |

Estimates from HCM, or manual or automatic traffic volume counts |

Throughput –

Intersection LOS |

Observed traffic

volumes |

Manual or automatic traffic volume counts |

|

Signal timing settings |

Signal timing settings from local agencies |

Queue Spillover |

Percent of time ramp queue impacts adjacent arterial intersections |

Manual or video observation of ramp queue lengths |

Environmental –

Fuel Consumption |

Vehicle speeds,

volumes and

acceleration profiles |

Travel time runs (GPS equipped) or manual or automatic traffic volume counts |

Environmental –

Vehicle Emissions |

Vehicle speeds,

volumes and

acceleration profiles |

Travel time runs (GPS equipped) or manual or automatic traffic volume counts |

|

|

| 9.4 Data Analysis |

This section provides practical guidance on the analyses needed to support performance evaluation of ramp management strategies. The data analysis procedures and tools discussed in this section build on the data collection strategies discussed in Section 9.3. |

| 9.4.1 Analysis Techniques and Tools |

|

|

Most ramp management evaluation efforts are conducted using a variety of analysis techniques. Often the analysis involves field measurement data combined with one or more traffic analysis tools or models. These analysis tools and models may be used to enhance field data measurement or as an alternative to field data measurement when data is unavailable. Recent advances in data management technology have provided improvements in the accuracy, functionality, and usefulness of both modeling and measurement processes. Future advances will likely provide further opportunities for improvement and integration of these tools.

This subsection provides a discussion of some of the general implications of using modeling tools compared with direct field measurement, summarizes the various categories of available modeling and analysis tools, and provides guidance on which analysis techniques are most appropriate to different analysis scenarios.

Modeling vs. Measurement

The discussion in this section, comparing the relative strengths and limitations of using traffic models or field measurement in the analysis of ramp management impacts, was adapted from a draft version of the National State of Congestion Report developed for FHWA. [11]

A common general rule that is suggested in analyzing congestion is: “Measure where you can, model everything else.” This recognizes that measurement using operations data often represents the best combination of accuracy and detail. However, the use of measurement data is often not feasible due to lack of availability, coverage, quality, or standardization. In these situations, modeling may be the better option. In using one or both of the analysis processes, it is important to understand that modeling and measurement each have their own relative strengths and weaknesses. In general:

- Modeling provides an estimate of what would likely happen as a result of a particular change in the system, assuming that individuals reacted similarly to past behaviors.

- Measurement provides an accurate assessment of what has happened or what is happening (for real-time systems), but has less ability to draw conclusions about what will happen.

Table 9‑6 provides additional detail on the relative advantages and limitations of these two approaches to analyzing congestion.

Table 9‑6 : Relative Advantages and Limitations of Modeling Versus Measurement

|

Advantages |

Limitations |

Modeling |

- Provides predictive capabilities.

- Once developed, can provide rapid analysis of multiple scenarios.

- Can be developed to provide micro- or macro-level analysis.

- Technology advances in data management are providing for more advanced and accurate models.

|

- Only as good as the data used to develop the models.

- Only provides an estimate of the real world. Results must be calibrated against observed data.

- Difficult to predict travelers’ reactions to unique conditions or innovative strategies.

- Can be costly to develop initial models.

|

Measurement |

- Provides a more accurate assessment of actual conditions.

- Can be used to analyze traveler reactions to specific conditions or unique events.

- Technology advances in data collection and better data management are providing improved measurements.

|

- Data availability and quality issues may limit usefulness of the data.

- Can be costly to implement extensive data collection programs or systems.

|

|

Since models are based on observed behaviors, they are most accurate when analyzing predictable conditions. Utilizing models to analyze extreme conditions, innovative operations strategies, or situations where traveler behaviors would be unpredictable is less advised. When the traffic conditions are extremely unpredictable, modeling should only be used if measurement is cost prohibitive.

Figure 9‑1 shows the trade-offs between the relative cost of the analysis and the conditions being analyzed, demonstrating the general areas of strength for both models and measurement.

Figure 9-1 : Modeling Versus Measurement – When Should They Be Used? [12]

Many agencies still view modeling and measurement as mutually exclusive processes with different end uses. However, many agencies are increasingly integrating the processes to provide even more powerful tools for analyzing congestion.

Examples of the benefits that can be achieved through the integration of measurement and models include:

- Data sets obtained through measurement can be used in the development and calibration of models.

- Models can be tied to real-time data measurement to add the capability of predicting future conditions based on current real-world conditions.

- Models can be used to extrapolate localized measurement data to a regional scale.

- Data generated by models can also be used to provide sensitivity testing as a reality check on measurement tools and data sets, in order to help identify potentially erroneous data or alert personnel of inoperative data collection equipment.

Available Traffic Analysis Tools

A number of tools and models are available to assist in the evaluation of ramp management applications. These tools range from very simplistic spreadsheet-based tools to much more complex microsimulation models. Each tool has strengths and weaknesses, and are better or worse suited to analyzing particular situations.

Recognizing that little guidance currently exists to guide planners and engineers in understanding and selecting among the various tools, the FHWA recently developed a detailed assessment of the available traffic analysis tools to provide this information. The following excerpt from Traffic Analysis Toolbox Volume 1: Traffic Analysis Tools Primer provides an overview of available analysis tools that may be applicable to ramp management evaluation.[3] This document includes discussions of the relative strengths and limitations of the various tools.

“To date, numerous traffic analysis methodologies and tools have been developed by public agencies, research organizations, and consultants. Traffic analysis tools can be grouped into the following categories:

- Sketch-Planning Tools: Sketch-planning methodologies and tools produce general order-of-magnitude estimates of travel demand and traffic operations in response to transportation improvements. They allow for the evaluation of specific projects or alternatives without conducting an in-depth engineering analysis. Such techniques are primarily used to prepare preliminary budgets and proposals, and are not considered to be a substitute for the detailed engineering analysis often needed later in the project implementation process. Sketch-planning approaches are typically the simplest and least costly of the traffic analysis techniques. Sketch-planning tools perform some or all of the functions of other analytical tool types, using simplified analysis techniques and highly aggregated data. However, sketch-planning techniques are usually limited in scope, analytical robustness, and presentation capabilities.

- Travel Demand Models: Travel demand models have specific analytical capabilities, such as the prediction of travel demand and the consideration of destination choice, mode choice, time-of-day travel choice, and route choice, and the representation of traffic flow in the highway network. These are mathematical models that forecast future travel demand based on current conditions, and future projections of household and employment characteristics. Travel demand models were originally developed to determine the benefits and impacts of major highway improvements in metropolitan areas. However, they were not designed to evaluate travel management strategies such as Transportation System Management (TSM), Intelligent Transportation Systems (ITS), or other operational strategies, including ramp management. Travel demand models only have limited capabilities to accurately estimate changes in operational characteristics (e.g., speed, delay, and queuing) resulting from implementation of TSM and other operational strategies. These inadequacies generally occur because of the poor representation of the dynamic nature of traffic in travel demand models.

- Analytical/Deterministic Tools (HCM-based): Most analytical/deterministic tools implement the procedures of the Highway Capacity Manual (HCM).2 These tools quickly predict capacity, density, speed, delay, and queuing on a variety of transportation facilities and are validated with field data, laboratory test beds, or small-scale experiments. Analytical/deterministic tools are good for analyzing the performance of isolated or small-scale transportation facilities; but are limited in their ability to analyze network or system effects.

- Traffic Signal Optimization Tools: Traffic signal optimization tools are primarily designed to develop optimal signal phasing and timing plans for isolated signalized intersections, arterial streets, or signal networks. This may include capacity calculations; cycle length; and split optimization including left turns; and coordination/offset plans. These can be important for the signal timing aspect of ramp terminal treatments. Some optimization tools can also be used for optimizing ramp metering rates for freeway ramp control.

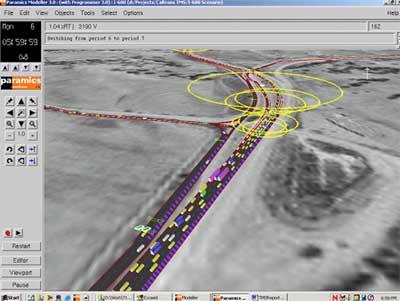

- Macroscopic Simulation Models: Macroscopic simulation models are based on the deterministic relationships of the flow, speed, and density of the traffic stream. The simulation in a macroscopic model takes place on a section-by-section basis rather than by tracking individual vehicles. Macroscopic models have considerably fewer demanding computer requirements than microscopic models. However, they do not have the ability to analyze transportation improvements in as much detail as the microscopic models.