Transportation Management Centers: Streaming Video Sharing and Distribution - Final Report

Chapter 6. Technical Considerations

Technical challenges associated with closed-circuit television (CCTV) streaming vary from transportation management center (TMC) to TMC based on the agency's information technology (IT) capabilities, network configuration and robustness, and system maturity. For most agencies, CCTV management is a core capability of their system, and expanding that capability to share those streams, while potentially costly and time consuming, is straightforward.

Networking

What sets video streaming apart from other data transfers at the TMC is that video streaming requires the transfer of significantly more data. The amount of data transferred varies based on the video resolution, compression algorithm, bitrate, etc. but can vary from a few megabytes (MB) to hundreds of MB per frame.

To make streaming video useful for TMC operations and for sharing partners and third parties, agencies must deploy and configure a flexible network that allows this massive amount of streaming data to be transferred while both keeping the network secure and reliable as well as ensuring that video quality is high enough to be useful.

Another related challenge TMCs face is that the network used to transfer streaming video from the field to the TMC and from TMC to sharing partners is usually the same network used to manage all other network traffic, such as advanced traffic management systems (ATMS) data transfers and general internet access. It is critically important to ensure that video stream sharing does not negatively impact the TMC's internal operations and communications.

Network Configuration and Documentation

The first networking consideration in the context of video stream sharing is determining how the existing network is configured and documented. The agency network must connect to field cameras to allow streaming video to transfer from the field to the TMC. Once the streams are in the TMC, they are distributed internally for operational use and shared separately with third parties. There are multiple ways to configure the network to achieve this arrangement. An exhaustive list of potential configurations is outside the scope of this report given the endless variations in each agency's network equipment, network maturity, etc. However, several typical approaches are outlined here.

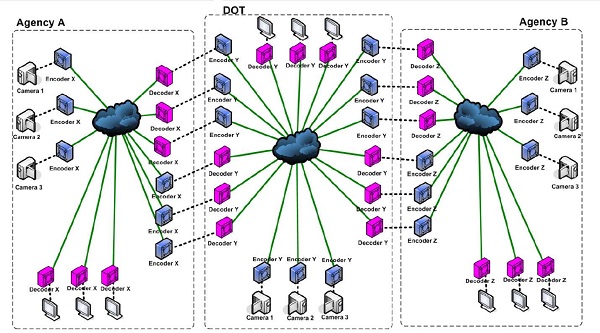

Many-to-many configuration addresses each sharing partner connection separately. For example, for a TMC to share video with Agency A requires deployment of a set of encoders at the TMC, decoders at Agency A, and a connection between these encoders and decoders. Similarly, if the TMC wants to share video streams with Agency B, it establishes another set of encoders, decoders, and connections.

© 2012 Maryland Department of Transportation, State Highway Administration

© 2012 Maryland Department of Transportation, State Highway Administration

Figure 4. Diagram. Example of an older many-to-many stream-sharing configuration.

Source: CHART Submission for 2012 Digital State-Final, Maryland Department of Transportation, State Highway Administration.

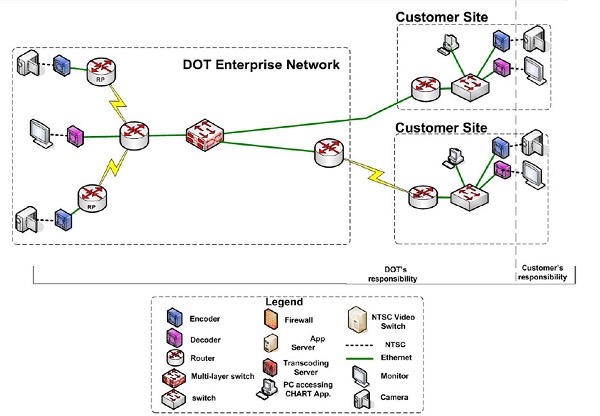

To support the many-to-many configuration, the network must be extended to reach across agencies. This can be accomplished several different ways, but one common way is to deploy agency network equipment to agency partner sites.

© 2012 Maryland Department of Transportation, State Highway Administration

Figure 5. Diagram. Example of many-to-many network configuration.

Source: CHART Submission for 2012 Digital State-Final, Maryland Department of Transportation, State Highway Administration.

The key challenges in this configuration is that it is not scalable because, with each new sharing partner, new equipment is installed in off-site locations, increasing both the cost and maintenance overhead. Security is not ideal because the many different potential entry points into the network will need to be maintained and patched regularly.

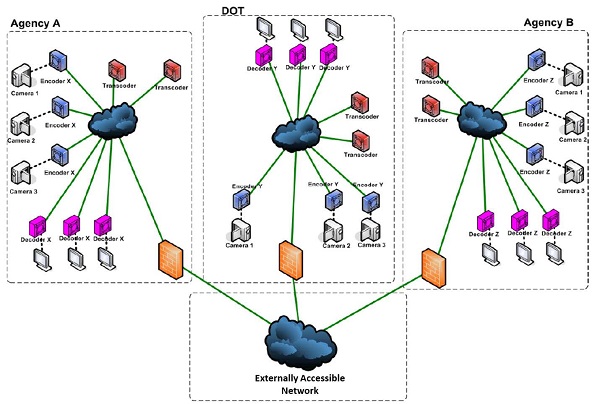

© 2012 Maryland Department of Transportation, State Highway Administration

Figure 6. Diagram. Example of one-to-many stream-sharing configuration.

Source: CHART Submission for 2012 Digital State-Final, Maryland Department of Transportation, State Highway Administration.

An alternative approach is to perform video encoding once inside the network and publish streams to a segmented network that is accessible to authorized third party sharing partners. This approach reduces the cost of hardware installation and deployment, while improving security by limiting network exposure to a single segmented part of the network.

Individual components of many-to-many or one-to-many models can be configured and deployed by internal IT and networking staff, or administered and managed by a consultant. Alternatively, some or all components of a stream sharing system can be offloaded to a hosting provider that takes responsibility of pulling in streams and building out network capability to share those streams among the agency and its sharing partners without modifying the agency's existing network. This hosting model is becoming more popular due to lower maintenance overhead and a lower overall cost resulting from economies of scale.

Regardless of network configuration, it is critical for agencies to document their configuration to ensure overall understanding of network complexity. Changes to individual components of a network often have indiscernible and cascading impacts elsewhere in the network, with symptoms sometimes not appearing for a long time or until the network is compromised in some way. To avoid these negative impacts, agencies must document their network configuration and keep the documentation relevant and up to date. Finally, stream sharing negotiations and MOUs are often easier when there is clear documentation of the network configuration that helps answer logistics and security questions.

Bandwidth Estimation

Bandwidth represents the amount of data that can be sent across the network per unit of time and is usually measured in megabits per second (Mbps). Note that megabits (Mb) are not the same as megabytes (MB), which are generally used for measure of storage capacity (1 megabyte = 8 megabits or 1MB = 8Mb). Network bandwidth is a critical measure when it comes to video stream sharing since it is a direct limitation to how much video can be shared. For example, without any special configuration, a 100 Mbps network bandwidth means that it can handle up to roughly 65 standard definition streams at one time (given that stream resolution, quality, frame rate, etc. all impact data payload size). Note that this is a theoretical limit and the actual number of streams may be lower due to other variables such as network latency, network protocol overhead, other traffic on the network, etc.

Given the direct correlation between network bandwidth and stream sharing capability, it is critical for TMCs to accurately estimate bandwidth utilization and requirements before embarking on video stream sharing activities. Improper estimation can put the entire TMC network in jeopardy of becoming overloaded to the point where other critical operational capabilities are impacted.

If TMC operations and the video sharing network are sharing the same bandwidth, one approach to estimation is to analyze normal TMC operations bandwidth utilization to get baseline usage figures. Then, using the number of available camera streams, it is possible to estimate both average and peak utilization, which may be dependent on specific sharing solution implementation and number of sharing partners. The total necessary bandwidth would be the sum of the baseline TMC and peak stream sharing bandwidth. The key is to evaluate situations that may cause larger than normal demand on the network, such as major or long-lasting weather events, when sharing partners and the public may be more actively streaming feeds from multiple cameras in a region. If the bandwidth is estimated only based on average conditions, the TMC is at risk of having its network brought down during major events when camera streams are most valuable.

Bandwidth is impacted by multiple factors including Internet Service Provider (ISP) provided upstream and downstream (or upload and download) bandwidth, network switch ratings, firewall throughput, physical cable ratings, etc. This means that once bandwidth estimates are available, the TMC must ensure that all components of the network can support such bandwidth. If an ISP is providing 50Mbps/100Mbps up/down bandwidth, but key network components only support 1Mbps traffic, then the TMC will be unable to take advantage of all available bandwidth.

Latency

Network latency is delay in communications across the network. Latency can be introduced in multiple places in the network. For example, poorly configured network switches or overly aggressive firewall packet inspections can introduce significant latency, which impacts the time it takes for data to reach its destination.

When it comes to video stream sharing, latency is usually the measure of how far behind from "live" video the stream is. This means that in terms of video stream sharing, latency is the delay between when the camera captures the video in the field and sharing partners see it on their screen. Latency can vary from a few seconds to half a minute or even longer. In addition to network configuration, video stream latency is impacted by the chosen streaming protocol. For example, HTTP Live Streaming (HLS) protocol focuses on high quality video but at the cost of high latency. This is because HLS prepares "chunks" of video and delivers them to the client for a smooth, high-quality video experience. The down side is that chunking of the video takes time (because of encoding, transcoding, buffering, etc.), so the video may not appear for 30–45 seconds after the camera captures it. Because of this, HLS is not ideal for realtime operational use by sharing partners. On the other hand, Web RealTime Communication (WebRTC) creates direct dynamic channels between peers without the need for any external encoders. This means that streams can be transferred with a very low latency, albeit with lower quality. However, this may be acceptable for sharing partners relying on video streams to react quickly to situations on the roadway even if the image is not perfectly clear.

In a race to reduce latency to achieve the necessary level of quality for TMCs, some transportation domain vendors are deploying proprietary protocols tailored for use in TMCs and for stream sharing with partners.

Security

Physical security and cybersecurity are critical considerations for TMCs as they look to share streaming video with their partners and public. Security concerns permeate all other aspects of TMC operations and video stream sharing specifically, including field equipment, network infrastructure and configuration, streaming and stream-sharing equipment, video players, etc.

Beyond physical security considerations for field cameras, the specific physical security considerations for stream sharing are with respect to equipment that may be deployed off-site or with hosted solutions. For example, a stream sharing architecture that depends on switches, routers, encoders, and decoders that is deployed off-site introduces risk in several areas. The off-site deployed equipment and hardware could be subject to compromise if the location does not have a strong physical security policy. This means that an unauthorized person could access physical equipment and either damage it or connect to it to either gain unauthorized access to video streams or modify its configuration to impact performance, ship video elsewhere, or compromise the upstream TMC network and gain unauthorized access to it. With hosted solutions, physical security is generally addressed as part of the policy, but still includes some level of risk that a person authorized to access physical equipment for one customer may also tamper (intentionally or unintentionally) with equipment for another customer.

Arguably, the more challenging security considerations are with cybersecurity. Any time devices are networked and connected, there is a risk of compromise on either end of the connection or by the man-in-the-middle (MITM) attack. While many of these risks can be managed and minimized in the context of internal operations through network security measures that prevent unauthorized access and control traffic within the network, the situation is more difficult to manage when certain portions of the network and its capabilities are exposed to shared streams with partners and the public. For architectures requiring deployment of equipment at partner sites, the TMC must make external connections to each of the individual locations. To extend the network to these locations, the TMC must establish either private leased lines, which can be very expensive and not scalable to a large number of partners, or establish virtual private networks (VPN) that encrypt traffic to prevent anyone on the internet from having access to it. The challenge is that most VPN technology uses Transmission Control Protocol (TCP), which requires acknowledgement of each received packet of data in order. This increases network traffic and introduces additional delays in transmission. To address this, TMCs may have to consider a special Unigram Data Protocol (UDP) based VPN. Regardless of the type of VPN or secure connection with sharing partners, this model is not scalable, as an overly stretched network connecting to many different locations increases the potential for misconfiguration or the exposure of a vulnerability due to unpatched systems.

Another approach to this problem is to build an architecture that segments away the sharing portion of the network from the rest of the TMC network and secures that segment with an understanding that it is going to be accessed by many clients. Agencies can then implement specific cybersecurity strategies in that segment with appropriate fail-safe procedures that would prevent any compromise of the segment from impacting the TMC network and resources.

Hosted solutions often come with robust cybersecurity, as their focus is on video stream sharing and they can use their domain and cybersecurity expertise to ensure hardware, equipment, networks, and software are more secure.

Regardless of in-house or hosted solution approach, the two key points to remember are:

- No networked system is ever 100 percent secure.

- Regardless of money, time, and effort invested in developing cybersecurity technical solutions, humans are most often the cybersecurity weak link that enables a cybersecurity compromise. Staff and operators must be educated about cybersecurity risks and safeguards.

Video Normalization

When it comes to video stream sharing, it is critical to normalize video to allow it to be shared and managed more effectively. Video normalization refers to adjusting different parameters of the video stream to achieve maximum usability with the smallest video stream transfer cost and overhead. Most of the parameters used in normalization are interrelated, and improving one may have negative impact on others. The goal is to understand how the shared video streams are being used by partners and normalize streams to achieve those goals. For example, each sharing partner may have slightly different needs:

- Transportation agencies will look for average quality video with low latency to ensure situational awareness in near real time to support coordinated operations.

- Public safety agencies may be more concerned with very low latency video even if the quality is not great. This allows first responders to access video in the field in realtime and ensure their own safety and safety of others in the field.

- News media may be most concerned with high quality video even if it is delayed by 30 seconds because crystal clear visuals from the field have a bigger impact on the audience than having true realtime information. Most of the time these streams are delayed anyway to allow kill switching video in case of incidents or other sensitive visuals that should not be broadcast.

- The public may be most concerned with having access to a large number of cameras on a public website, even if they are slightly delayed and lower quality, as they are looking for regional awareness or specific trip information.

Video stream normalization includes several key steps:

- Compression. Video compression is necessary to reduce the size of the video stream being transmitted. Compression can be achieved in a number of ways, such as by removing repetitive images and estimating motion changes. To achieve consistency across different camera technologies and stream types, TMCs can use specific compression algorithms for all their shared streams as part of video normalization. Some example compression formats include MPEG-4 Part 2, H.264 (aka MPEG-4 Part 10), H.265 (aka HEVC), etc.

- Resolution. Video stream resolution refers to height and width in pixels of a video being transmitted to the partners. Once again, to provide consistency across camera types and formats, TMCs normalize video streams to a specific resolution. Some standard resolutions are 240px (426 x 240), 360px (640 x 360), 480px (854 x 480, Standard Definition), 720px (1280 x 720), 1080px (1920 x 1080, High Definition), 4000px (3840 x 2160), but this can be customized as needed.

- Frame Rate. Frame rate refers to the number of video image frames displayed each second, and it is measured in units of frames per second (fps). Frame rate mainly impacts the smoothness of the video as a lower frame rate looks choppier and a higher frame rate looks smoother. This is because at a lower frame rate, the user is seeing fewer frames each second and the gaps between frames appear as choppiness of the video. Frame rate is important when visualizing high speed and detailed video streams, such as sporting events, but may not be critical for other uses. The standard frame rate is 24fps, but can vary from 1fps to 60fps, with most traffic cameras supporting up to 30fps.

- Bitrate. Bitrate represents the number of bits of data conveyed per unit of time in a video. Higher bitrate means that more information is available in each frame of the video and therefore the video looks to be higher "quality." As with other variables, bitrate is related to the level of compression, resolution, and other factors. Bitrates range from 16 kilobits per second on the lowest end to over 1 gigabit per second for large uncompressed HD video on the high end. Bitrate limitations also exist on physical camera devices in the field. For stream sharing, bitrate is rarely considered in a vacuum and is instead adjusted in conjunction with other variables to achieve best quality at the lowest network cost.

When designing a video stream sharing solution, TMCs must consider their network limitations, partner agency needs, and all stream variables to create a normalized video to share with their partners and third parties. Normalization of video requires equipment, hardware, and codecs4 to perform processing on incoming feeds before pushing them out to the sharing partners. Normalization introduces additional latency that needs to be properly communicated and understood by all parties in a sharing agreement.

Kill-Switch Technology

The key TMC use case scenario for CCTV is to monitor traffic, detect and verify incidents, and coordinate response. In some instances, the field view may expose scenes that should not be shared with other entities. For example, a particularly graphic incident scene may be upsetting to the public and unnecessary for news media. At other times, specific security activity may be in the view of the camera, such as police enforcement activity or privileged movement of people or materials, and as such, should not be shared outside of the TMC.

When CCTV streams are pulled into the TMC, most of these use cases do not require any special treatment. However, when sharing streams outside of the TMC, operations staff must have the ability to abort stream sharing for individual streams in cases where the field situation may present a security issue or inappropriate information beyond operations. To achieve this, TMCs implement a "kill-switch" capability that ensures that each stream can be shut off at a moment's notice.

While the concept is simple, implementation is not always so straightforward. Some of the key challenges include:

- Shutting off individual or sets of shared streams only based on impacted streams.

- Shutting off streams only to specific sharing partners. For example, a stream may be shut off for the public, media, and other TMC partners, but left available for public safety agencies or specific individuals that need the information to appropriately respond to the incident.

- Introducing artificial delay in shared streams to allow for sufficient time to activate the kill switch before that part of the stream is shared with others.

Sometimes TMCs only have the basic kill-switch capability that just shuts off all stream sharing paths. While potentially better than exposing undesired information, an all or nothing kill-switch is not ideal as it may impact coordinated response to important incidents where neighboring jurisdictions and operational partners are left blind to the situation.

Technology and Infrastructure Maintenance

CCTV technology, from actual cameras in the field to network infrastructure to sharing mechanisms, all change continuously to keep up with demands of both industry and the public. Streaming video has become commonplace with the proliferation of video services such as Netflix, Hulu, YouTube, and many others. To respond to this demand, manufacturers and developers continuously improve camera capabilities, compression algorithms, network capacity, processing power, etc. From the TMC standpoint, one of the challenges with such a fast-moving technology is keeping up with new devices, protocols, and needs. Agencies cannot afford to build an architecture that is not flexible enough to handle different formats and protocols. Instead, they are looking for ways to be technology and protocol agnostic. For example, with Flash technology end of life announced to occur in 2020, many Flash-based media players and stream distribution solutions will be going away. While the underlying protocols such as Realtime Messaging Protocol may remain in use, many others are being introduced to take advantage of newer web technologies and capabilities. This means that agencies must continue to adjust and provide video streams to their partners using the latest protocols to minimize network overhead and maximize shared stream quality.

In addition to software and protocols, vendors continuously update and market new cameras with new features and capabilities. While many cameras maintain backwards compatibility, the cost of backwards compatibility is high, and vendors tend to provide this compatibility for a limited amount of time. This technology evolution impacts stream normalization processes.

Another maintenance consideration is with changes in network infrastructure and equipment. Some agencies still rely on copper wire infrastructure, but more agencies are continuing to move to fiber optic networks, which provide many benefits. With the expansion of modern network infrastructure, agencies are also adopting more modern networking equipment, including the newest switches, firewalls, routes, etc. These changes can impact how streams are shared and may require frequent configuration changes to keep up with network changes. Similarly, as some agencies start relying more on hosted and cloud-based solutions, the cost and burden of maintenance is starting to shrink for many of the TMCs taking advantage of this new model.