Effectiveness of Disseminating Traveler Information on Travel Time Reliability: Implement Plan and Survey Results Report

CHAPTER 4. PARTICIPANT TASKS

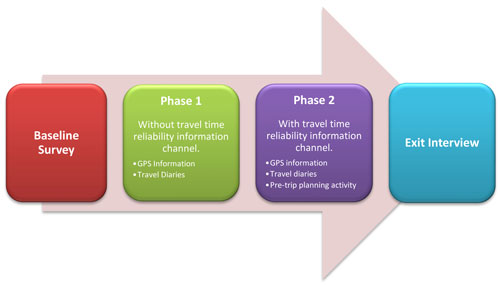

The various tasks participants completed throughout the duration of the study are illustrated in Figure 5. The following sections discuss in detail the various activities of the participants and their relationship to the overall goals and objectives of the study. The overall design of the study and additional details regarding the overall architecture and data flows are provided in Appendix A.

Figure 5. Chart. Field study phases and data.

BASELINE SURVEY

To begin participation in the study, participants completed a web-based baseline survey that screened them to ensure they met the minimum criteria (i.e., regular travel on the study highway and smartphone ownership) and collected information to establish pre-study travel and information-use habits. The collected baseline information included:

- Usual commute routes, modes, and trip times, including the variability of those trip times.

- Alternate commute routes and modes.

- Frequency of non-commute travel to familiar and unfamiliar destinations in the region.

- Level of familiarity and comfort with travel time information, including travel time reliability (TTR) information in particular, as well as the channels they currently used to obtain traveler information (e.g., radio stations, websites, apps).

- Impacts of traveler information on travel behavior.

- Basic demographic information (e.g., gender, age, household size and income).

The baseline survey also collected contact email addresses to facilitate administration of the remaining tasks (email addresses and other personal identifying information were deleted at the conclusion of the study to protect privacy). The baseline surveys for West Houston, North Houston, North Columbus, and Triangle Transportation studies are provided in Appendix B, Appendix C, Appendix D, and Appendix E, respectively.

As part of the recruitment materials and baseline survey, participants were informed that the project would study the experiences of regular drivers and that input would help regional agencies prioritize improvements to the transportation system. Also, the baseline survey indicated that it would establish a baseline awareness of, comfort with, and use of traveler information resources and how they use them to influence travel choices. The participants were not specifically notified that the study was related to TTR information nor what that information is and how they might use it. As normal procedure with research experiments involving human subjects, providing specific information about the purpose of the study may bias responses or confound the overall results, thereby minimizing the usefulness of the study. Even though the concept of TTR and its potential usefulness is complex, providing participants with information related to TTR at the beginning of the study would have been counterproductive.

PHASE 1

At the end of the baseline survey, qualifying participants were asked to install a mobile application on their smartphones to capture information about where and when they traveled during the study period (details about the smartphone application are provided in the next section). At this time, participants were also assigned to a Lexicon assembly (Assembly A or B, encompassing the groups of terms listed in Table 1) and to an information platform (website, App, or 511 telephone system, as the channel where participants would access TTR information). Participants were not informed about their assignments for Phase 1; the assignments were established in preparation for distribution and use in Phase 2 (discussed in the next section). More information about the assignment process is provided later in the report.

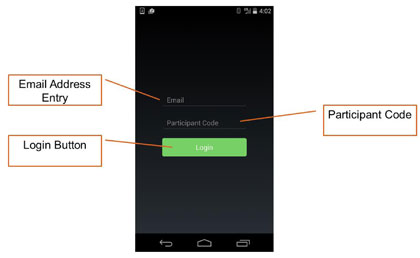

Once the participants downloaded the mobile application, they needed to log into the application, as illustrated in Figure 6, using their email address and participant code that was sent to them with the download instructions. This unique code was cross-linked with the participant information, including the study in which they participated (i.e., West Houston, North Houston, North Columbus, or Triangle). It also linked to their assigned Lexicon assembly and TTR information platform to be used in Phase 2, which is discussed in the next section.

Figure 6. Screen shot. Mobile application login screen.

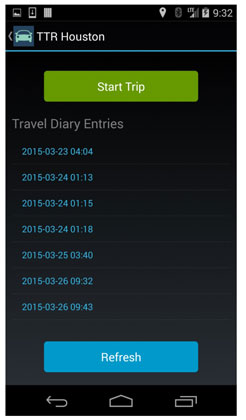

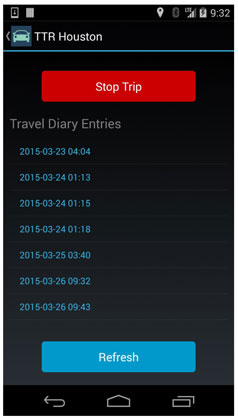

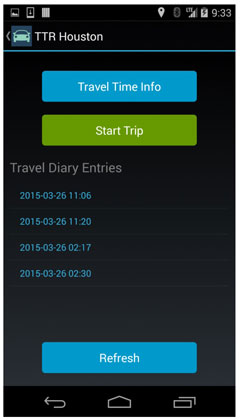

During the first phase of the field study, participants were asked to use the mobile application when traveling and to complete daily travel diary entries for each trip they took. It is important to note that participants were asked to open the application prior to making a trip, but NOT operate the application while driving. The travel diary captured information about their pre-trip travel decisions, including any information sources (website, television, or radio news reports) they used to plan a trip. Travel diary information was matched with information about the route(s) traveled by participants' vehicles as recorded by the smartphone application. A screen shot of the mobile application home screen used in Phase 1 is shown in Figure 7. The participants accessed the home screen each time they took a trip, pressing the "Start Trip" button at the beginning of the trip and the "Stop Trip" button (shown in Figure 8) at the end of the trip. This application collected GPS-related data while in use.

Figure 7. Screen shot. Mobile application home screen, phase 1.

Figure 8. Screen shot. Mobile application in trip, phase 1.

The travel diaries were designed to be completed as easily and efficiently as possible, using a form that participants accessed via the smartphone application. To maximize the likelihood that participants would consistently complete the travel diaries, participants received a daily notification in the application to remind them to complete their travel diary entries. During later rounds of data collection, participants also received regular emails to help them remember to track their trips and complete travel diaries. The transcript of the questions that were included in the Phase 1 travel diary is provided in Appendix F. The overall objective of the Phase 1 travel diary was to identify the following:

- Trip mode and purpose.

- Resources used to plan trip (e.g., websites, TV, radio).

- Impact of any information obtained through those resources on mode choice, departure time, and/or route decisions made before the trip began.

PHASE 2

In the second phase of the field study, participants completing at least four trips during Phase 1 were provided access to one of three pre-assigned information channels: a dedicated website, a smartphone application module that was part of the study application, or a 511 phone number.3 These three channels provided travel time reliability information for the users' selected routes prior to the beginning of their trip. As in Phase 1, participants were asked to use the smartphone application when making a trip and complete a modified version of the daily travel diary that captured information regarding their pre-trip travel decisions, their use of the study's information channel (and any other information sources used), and the influence of pre-trip travel time reliability information on their travel decisions. Travel diary information was again matched with information about the routes traveled by participants' vehicles as recorded by the smartphone application.

Trip Planning Website Description

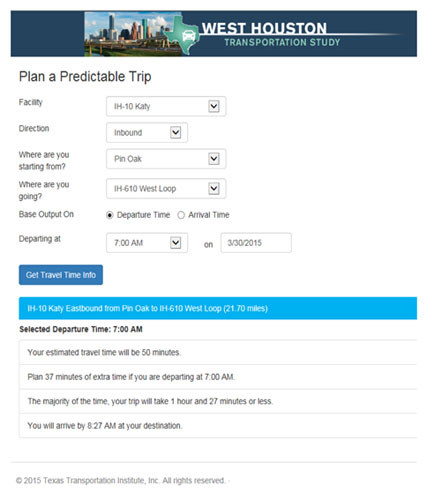

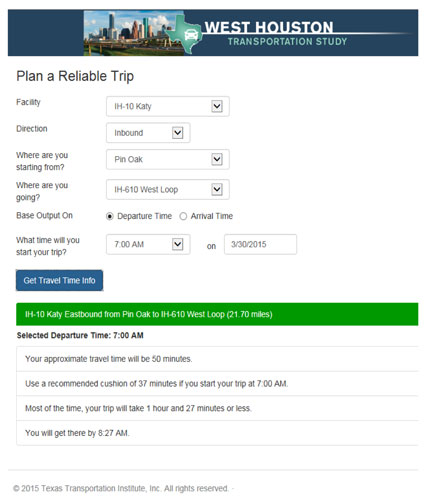

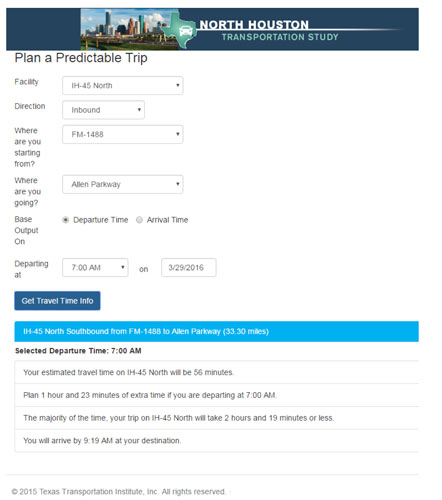

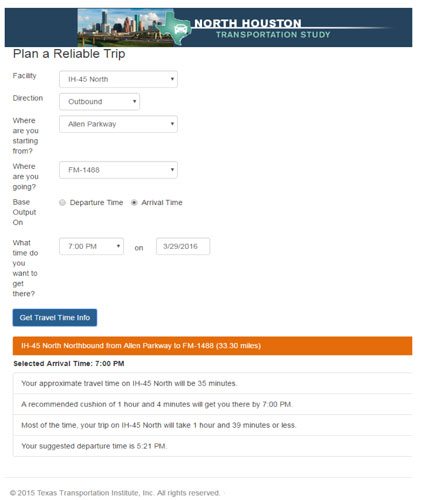

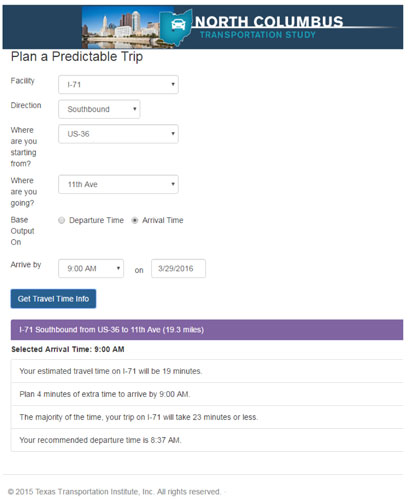

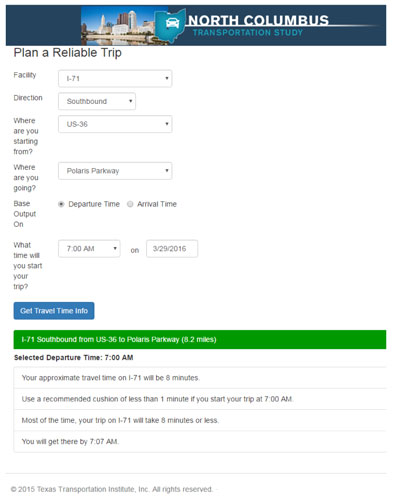

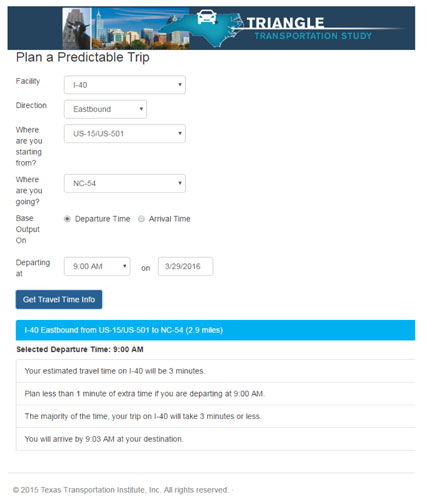

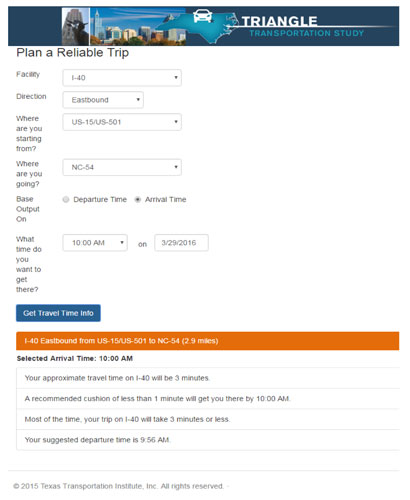

Working with the respective partner agencies, the study website delivery of the travel time reliability information was implemented to support this phase of the study. Secured access to the website ensured the general public was not able to access the site. The conceptual website design and page flow and the Lexicon terms presented on these pages for the study sites (West Houston, North Houston, North Columbus, and Triangle) are provided in Appendix G, H, I, and J, respectively. The website for the West Houston Transportation Study is provided in Figure 9 and Figure 10. The websites for the North Houston, North Columbus, and Triangle studies were similar to this one, yet varied according to the corridors selected for the studies in those regions. They are provided in Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, and Figure 16. In each figure, the use of the phrases from Assembly A and Assembly B are displayed to illustrate fully what the participants saw depending on which location and Assembly they were assigned.

Figure 9. Screen shot. West Houston transportation study trip planning website (Assembly A).

Figure 10. Screen shot. West Houston transportation study trip planning website (Assembly B).

Figure 11. Screen shot. North Houston transportation study trip planning website (Assembly A).

Figure 12. Screen shot. North Houston transportation study trip planning website (Assembly B).

Figure 13. Screen shot. North Columbus transportation study trip planning website (Assembly A).

Figure 14. Screen shot. North Columbus transportation study trip planning website (Assembly B).

Figure 15. Screen shot. Triangle transportation study trip planning website (Assembly A).

Figure 16. Screen shot. Triangle transportation study trip planning website (Assembly B).

Smartphone Mobile Application Description

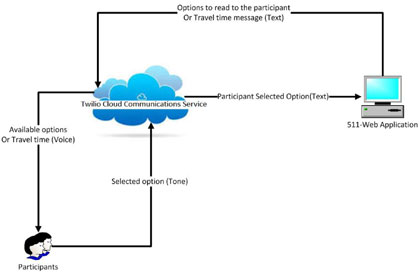

A trip planning mobile application for delivery of the travel time reliability information was implemented to support this phase of the study. The trip planning mobile application was a smartphone application module embedded within the Global Positioning System (GPS) data collection application that all participants downloaded at the start of the study. This module was activated only for participants who were assigned to the mobile application portion of TTR information delivery.

In Phase 2, those participants who were assigned to the groups accessing the TTR information via the mobile application had that portion of the trip planning module of their application activated. The home screen then looked like the example provided in Figure 17. The Travel Time Info" button took the participant to the mobile application travel time planning screen (e.g., Figure 9). Figure 18 shows the home screen after the trip was begun (i.e., after the "Start Trip" button was pressed). The "Travel Time Info" button behaved in the same fashion on this page. For each location, the website developed to provide the TTR information was embedded in the smartphone mobile application trip planning module to ensure consistency across dissemination platforms.

Figure 17. Screen shot. Mobile application home screen, phase 2.

Figure 18. Screen shot. Mobile application in trip, phase 2.

Mobile Application Technical Details

Development of the TTR mobile application was performed using Xamarin, a Microsoft product that allows for multiple-platform mobile development using C# and .Net. Xamarin allows for code reuse between the Android and iOS platforms, and reduces the amount of platform-specific code needed. The mobile application had a simple interface to allow the participant to start a trip, which would allow the mobile application to register a trip with the TTR backend system and send up occasional locations while the trip was active. Once the user stopped the trip using a button press, the mobile application would stop sending the trip location points, register a trip stop with the backend system, and then present the participant with a trip report. The trip report was a travel diary survey that was hosted on Survey Monkey, which was different for the two main phases of the project.

The mobile application integrated with a backend system running in the Microsoft Azure environment. The backend system contained the Web Application Programming Interface (API) methods to send and retrieve data from the databases. Microsoft SQL Server databases held the user accounts, trips, and probe points assigned to those trips. The trip location point data were analyzed to verify that a trip indeed was in the area of interest. The trip would be marked in the database if the algorithm found it to be in the area of interest. The data collected by the TTR backend system and Survey Monkey were used for analysis.

Trip Planning 511 System Description

A system for agency 511 delivery of the travel time reliability information was implemented to support this phase of the study. At the time of the study, neither Ohio nor Texas operated a statewide 511 system, nor was it the expectation that this study would implement a system of this magnitude within these states. However, the implementation of a cloud-based keypad entry system that operated in the same manner as a true 511 system was achieved using open-source tools and text-to-speech and speech-to-text translators. The study team selected Twilio as the software for deployment of the trip planning 511 system.

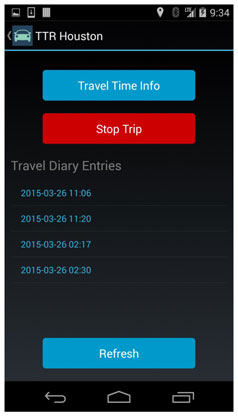

The study's 511 system leveraged the same data feed that was used to provide information to both the website and mobile applications. Phone numbers related to each demonstration location were provided. A flow diagram of the 511 system is provided in Figure 19. The system functioned in the same manner for each study site, and provided the TTR information for trip planning purposes exactly as it was presented in the website and smartphone application platforms.

Figure 19. Chart. Travel time reliability study 511 system flow diagram.

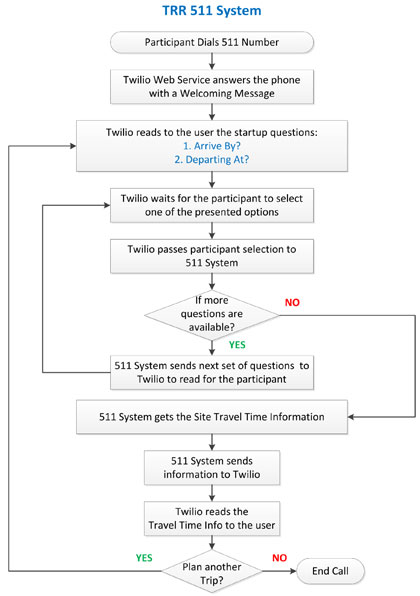

The 511 system was developed using the Twilio API. Twilio is a cloud communications platform that enables a user to develop apps to interact with participants using voice, video calls, and messaging. The Twilio service also leases Voice Over IP (VoIP) phone lines that can be used to communicate with participants. The study team used the Twilio API to develop a 511 web-based Interactive Voice Response (IVR) application to interact with study participants through the use of voice and tones input via keypad. Anytime a participant called one of the VoIP lines, the Twilio service read to the participant the available options and forwarded the participant's selected option to the 511 application. The 511 application then sent Twilio a new series of options to read to the participant and received from Twilio the selected option until all the information required to provide the user with a travel time for their planned trip had been answered. The 511 application then provided a textual message to Twilio to read to the participant that contained the travel time information for their trip. Figure 20 illustrates the communication flow between the participants and Twilio.

Figure 20. Travel time reliability 511 communication flow.

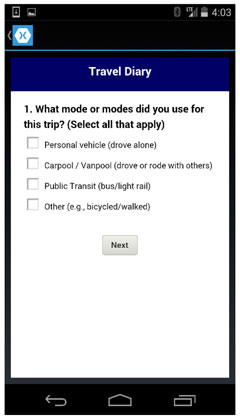

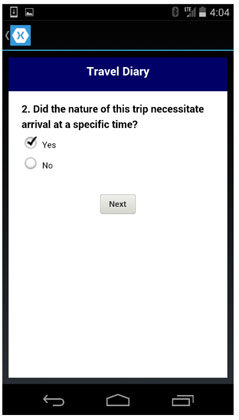

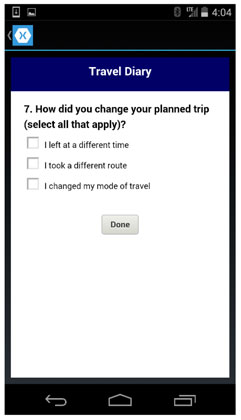

Phase 2 Travel Diary Fields

In Phase 2, participants were asked to complete travel diary entries as in Phase 1 via the smartphone mobile application. Screen shots of the travel diary module of the smartphone application are provided in Figure 21, Figure 22 and Figure 23 (selected questions only). In addition to the questions asked in the Phase 1 diary (Appendix F), the Phase 2 diary (see Appendix K) assessed the following:

- Usefulness of the TTR information provided by the study's website, mobile application, or 511 system for mode, departure time, and/or route decisions.

- Impact, if any, of the TTR information on mode choice, departure time, and/or route decisions made before the trip began.

Figure 21. Screen shot. Mobile application travel diary screen (question 1), phases 1 and 2.

Figure 22. Screen shot. Mobile application travel diary screen (question 2), phases 1 and 2.

Figure 23. Screen shot. Mobile application travel diary screen (question 7), phases 1 and 2.

EXIT SURVEY

Following the second phase of the field study, all participants who had completed at least four recorded trips in each phase4 participated in a web-based exit survey. The primary purpose of the exit survey was to collect information about if and how respondents used the TTR information they were provided by collecting self-reported behaviors based on respondents' recollections of how often they used the TTR information and how often they changed trip plans in response to that information (separate from the trip behavior observed passively by the smartphone application during Phase 2). The exit survey also collected respondents' perceptions of and satisfaction with that information. Questions included respondents' perceptions or recollections of:

- How often they used the TTR information for different kinds of trips.

- What kind of impacts the TTR information had on behavior (if any) (e.g., changes in departure time, route, mode choice).

- How satisfied they were with various aspects of the information (e.g., clarity, ease of access, trustworthiness, overall usefulness).

- What kind of impacts the TTR had on trip experience (if any) (e.g., reduced stress, congestion avoidance, shorter trip, overall trip satisfaction).

- Factors that might make the information more useful for different kinds of trips or in an unfamiliar city (or what might make participants likely to use the information in the future).

A number of questions about information use and satisfaction were modeled after baseline questions (particularly where comparability could be useful between baseline perceptions of traveler information and post-study perceptions of TTR information). Other questions from the baseline (e.g., typical travel patterns and demographics) did not need to be repeated; because the exit survey was conducted approximately 5 weeks after the baseline, it was assumed that participants' circumstances and typical behaviors had not changed. The exit survey questions for West Houston, North Houston, North Columbus, and Triangle are included in Appendices L, M, N, and O, respectively.

STUDY ADMINISTRATION METHODS

The following section provides an overview of additional tasks conducted to facilitate and encourage participation in the study. These tasks directly and indirectly supported the primary participant tasks and included recruitment efforts, participant assignment to treatment groups, participant communication management, and study incentive distribution.

Participant Recruitment

Potential participants were identified and recruited in a variety of ways throughout the study. The initial study plan had included only one "round" of recruitment and data collection in each study area, using an address-based sample to recruit residents who lived near the study corridors. However, this first round of data collection resulted in response and retention rates that were lower than expected for several stages of the process. Therefore, additional "rounds" of data collection were conducted using outreach and advertising to recruit additional participants through convenience sampling, sending more direct and frequent communications to participants to keep them engaged throughout the study, and offering a variety of incentives in an effort to increase recruitment and retention. These activities are discussed below and are summarized in Table 3. To reach the needed response rate, one study round was conducted in Triangle, while three rounds were required in North Columbus and Houston. Note that the rounds are numbered according to the overall number of sequential efforts conducted during the study across all three sites, rather than for the number of efforts conducted within each site.

During the first round of data collection, participants were identified for the study using an address-based sample approach. A sample of residential addresses in the zip codes adjacent to the study corridors were invited to the study by mail. This approach was used to minimize self-selection bias and other potential invitation biases, while targeting residents who might be more likely to use the study corridor frequently (due to their proximity to the highway). The number of residents invited from each city was based on preliminary estimates of required sample sizes, response rates, and retention rates throughout each participant activity. The target sample size was 900 participants completing every step in the study; the sample budget allowed for a "cushion" of 100 extra participants (for a total of 1,000). These targets were distributed across the three study areas. Table 2 summarizes the initial response and retention rate assumptions for each step of the study and the resulting number of invitations to be mailed to each site.

| Study Area | Response Rate Assumption3* | West Houston (Texas) | North Columbus (Ohio) | Triangle (North Carolina) |

|---|---|---|---|---|

| Proposed invitations to mail | -- | 35,800 | 23,900 | 19,900 |

| Baseline response (pre-screening) | 7% | 2,500 | 1,667 | 1,389 |

| Baseline completion (screened & qualified) | 60% | 1,500 | 1,000 | 833 |

| Diary retention (Phase 1 & 2) | 40% | 600 | 400 | 333 |

| Target sample size (Exit Response) | 75% | 450 | 300 | 250 |

3* Response rate assumptions reflect the percentage of people from each stage who were expected to complete the next stage.

Assumptions were based on experience from previous similar studies and on data about smartphone ownership in the U.S. [Return to 3* Link]

As the study progressed, the actual response and retention rates were evaluated, and adjustments were made to the study plans (including increasing the number of invitations in the Triangle area and using different outreach methods to recruit more participants in later rounds of data collection). More details about the address-based sample design for each city are provided in the specific sections on participant selection for the West Houston (Round 1), North Columbus (Round 1), and Triangle study sites.

Each of the Round 1 potential participants received an invitation postcard (containing a unique study password) delivered to their home address when the baseline survey started, and a reminder postcard a few days later. Examples of these postcards and all other recruitment materials (described in later sections) used for the West Houston, North Houston, North Columbus, and Triangle Transportation studies are provided in Appendices P, Q, R, and S, respectively. During this round, all participants began each task at the same time (after all participants in their cohort completed the previous task).

For Round 2 in the West Houston study area, participants were re-invited from the panel of participants who had initially completed the baseline survey in Round 1, but had not completed the rest of the study. For Rounds 3 and 4 of data collection, potential participants were recruited through outreach in their communities, such as advertisements on websites or social media, in newsletters or email lists, or on fliers posted at large institutions (such as colleges near the corridor). Details of these recruitment efforts are discussed in the specific sections on participant selection for West Houston (Round 2), North Houston (Round 3) and North Columbus (Round 3 and Round 4). Potential participants who responded to one of these advertisements called a recruiter for pre-screening, and then were given a link to complete the baseline survey. After providing their email address at the end of the baseline survey, participants were then assigned a unique study password to use throughout the remainder of the study. During these recruitment efforts, participants began each task of the study on a weekly basis, as outreach and pre-screening continued to enroll more participants.

Treatment Group Assignment

Participants who completed the baseline survey and were qualified to continue in Phase 1 were randomly assigned to one of six treatment groups. These treatment groups determined how the participant would access TTR information during Phase 2 (web, App, or 511), and which of the two TTR Lexicon assemblies the participant would receive (A or B). Each treatment group contained approximately the same distribution of participants by gender and age categories to minimize unintentional demographic biases. These assignments were made prior to Phase 1, because it was assumed that the demographic distribution of active participants would not change significantly during Phase 1; pre-assignment also helped facilitate a seamless transition from Phase 1 to Phase 2. This assignment process was identical for all rounds of data collection.

Participant Communication Management

Throughout the study, the study administrators regularly communicated with participants through a variety of channels. As previously described, initial recruitment involved communication by mailed postcards or fliers and newsletters. During Round 1, once participants completed the baseline survey and provided their email address, all communication to the participants was sent via email or through the smartphone application downloaded for the study. During later rounds, participants could call or email study recruiters before completing the baseline survey to ask questions or to sign up, but they were still required to provide an email in the baseline survey to receive follow-up information and reminders.

"Help" email accounts for each study site were established to manage in-bound communication from participants, such as questions about the study and requests for help with each of the tasks if needed. One set of email accounts was set up to manage questions and comments about the baseline and exit surveys during the first round of data collection. Another set of email accounts was used to manage questions and comments about the smartphone application and TTR information resources, and in later rounds of data collection to facilitate study recruitment.

Out-bound emails were sent to participants regularly throughout the study, including invitations to each phase and to the exit survey, regular reminders to log trips and complete travel diaries, and distribution of study incentives. During the first round of data collection, participants received an invitation email at the start of each phase and at the start of the exit survey, and a reminder email a few days later if they did not respond. During later rounds, participants received more frequent reminders to use the smartphone application during Phase 1 and Phase 2 if the study team observed that they were not recording trips.

Study Incentives

To encourage response and to thank participants for their input, a nominal incentive was offered to participants who completed the entire study (including the baseline survey, trip reporting and trip diaries in Phase 1 and Phase 2, and the exit survey). During the first round of data collection, a $25 gift card to Amazon.com was initially offered. This is the amount that was distributed to all participants who completed Round 1 of the West Houston study. However, based on feedback and the low response in Round 1 of the West Houston study, this incentive was increased to a $50 gift card per participant for the North Columbus and Triangle Round 1 studies and the West Houston Round 2 study. This increase was communicated to participants via email and in the online frequently asked questions (FAQs). For the second round of data collection in Houston, participants also were entered into a drawing for a "grand prize" of an additional $500 from Amazon.com. Incentives were distributed by email within one week after the exit survey closed for each study area.

For Round 3 of data collection, participants in North Houston were offered an incentive of $100 cash (or $75 if they completed everything except the exit survey), while participants in North Columbus were entered into a drawing for one of several iPad tablets. For the final round of data collection in North Columbus (Round 4), participants were offered $100 cash.

Table 3 summarizes the administration efforts in each round of data collection.

| Study Site | West Houston (Texas) | North Houston (Texas) | North Columbus (Ohio) | Triangle (North Carolina) | |

|---|---|---|---|---|---|

| Round 1 | Dates | April-June 2015 | - | April-June 2015 | May-July 2015 |

| Round 1 | Recruitment | Mailed postcard | - | Mailed postcard | Mailed postcard |

| Round 1 | Incentives | $25 Amazon card | - | $50 Amazon card | $50 Amazon card |

| Round 2 | Dates | July-August 2015 | - | - | - |

| Round 2 | Recruitment | Re-invited from Round 1 | - | - | - |

| Round 2 | Incentives | $50 Amazon card, $100 grand prize drawing | - | - | - |

| Round 3 | Dates | - | October 2015-January 2016 | October-December 2015 | - |

| Round 3 | Recruitment | - | Outreach | Outreach | - |

| Round 3 | Incentives | - | $75-100 cash | iPad prize drawing | - |

| Round 4 | Dates | - | - | February-March 2016 | - |

| Round 4 | Recruitment | - | - | Outreach and ads | - |

| Round 4 | Incentives | - | - | $100 cash | - |

3 For the first round of the West Houston study, participants who completed at least three trips in Phase 1 were also invited to Phase 2, due to the smaller number of participants who had completed four trips. [Return to 3 Link]

4 For the first round of the West Houston study, participants who completed at least three trips in Phase 1 were also invited to Phase 2, due to the smaller number of participants who had completed four trips. [Return to 4 Link]