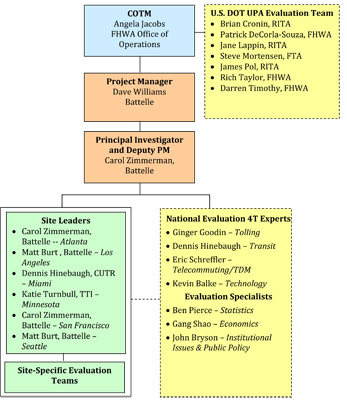

3.0 NATIONAL EVALUATION OVERVIEWThis chapter summarizes how the national evaluation of the UPA sites is being organized and carried out and identifies the steps in the San Francisco UPA evaluation process. 3.1 National Evaluation Organizational StructureThe evaluation of the UPA/CRD national evaluation is sponsored by the U.S. DOT. The Research and Innovative Technology Administration's (RITA) ITS JPO is responsible for the overall conduct of the national evaluation. Representatives from the modal agencies are actively involved in the national evaluation. Members of the Battelle evaluation team include:

As highlighted in Figure 3-1, the Battelle team is organized around the individual UPA/CRD sites. A site leader is assigned to each site, along with specific Battelle team members. The site teams are also able to draw on the resources of 4T experts and evaluation specialists. The purpose of the national evaluation is to assess the impacts of the UPA/CRD projects in a comprehensive and systematic manner across all sites. The national evaluation will generate information and produce technology transfer materials to support deployment of the strategies in other metropolitan areas. The national evaluation will also generate findings for use in future federal policy and program development related to mobility, congestion, and facility pricing. The focus of the national evaluation is on assessing the congestion reduction realized from the 4T strategies and the associated impacts and contributions of each strategy. The non-technical success factors, including outreach, political and community support, institutional arrangements, and technology will also be documented. Finally, the overall cost benefit analysis of the deployed projects will be examined. Members of the Battelle team are working with representatives from the local partner agencies and the U.S. DOT on all aspects of the national evaluation. This team approach includes the participation of local representatives throughout the process and the use of site visits, workshops, conference calls, and e-mails to ensure ongoing communication and coordination. The local agencies are responsible for data collection, including conducting surveys and interviews. The Battelle team is responsible for providing the local partners direction on the needed data, formats and collection methods and for analyzing resulting data and reporting results.

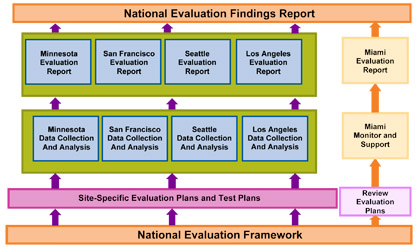

Figure 3-1. Battelle Team Organizational Structure 3.2 National Evaluation Process and FrameworkThe Battelle team developed a National Evaluation Framework (NEF) to provide a foundation for evaluation of the UPA/CRD sites. The NEF is based on the 4Ts congestion reduction strategies and the questions that the U.S. DOT seeks to answer through the evaluation. The NEF is essential because it defines the questions, analyses, measures of effectiveness, and associated data collection for the entire UPA/CRD evaluation. As illustrated in Figure 3-2, the framework is a key driver of the site-specific evaluation plans and test plans and will serve as a touchstone throughout the project to ensure that national evaluation objectives are being supported through the site-specific activities.

Figure 3-2. The National Evaluation Framework in Relation to Other Evaluation Activities The evaluation of each UPA/CRD site will involve several steps. With the exception of Miami, where the national evaluation team is serving in a limited role of review and support to the local partners, the national evaluation team will work closely with the local partners to perform the following activities and provide the following products:

The NEF provides guidance to the local sites in designing and deploying their projects, such as by identifying the need to build in data collection mechanisms if such infrastructure does not already exist. To measure the impact of the congestion strategies, it is essential to collect both the “before” and “after” data for many of the measures of effectiveness identified in the NEF. Also important is establishing as many common measures as possible that can be used at all of the sites to enable comparison of findings across the sites. For example, a core set of standardized questions and response categories for traveler surveys will be prepared. Questions may need to be tailored or added to reflect the specific congestion strategies and local context for each site, such as road names or transit lines, but striving for comparability among sites will be a goal of the evaluation. A traditional “before and after” study is the recommended analysis approach for quantifying the extent to which the strategies affect congestion in the UPA/CRD sites. In the “before,” or baseline condition, data for measures of effectiveness will be collected before the deployments become operational. For the “after” or post-deployment period, the same data will be collected to examine the effects of the strategies. The analysis approach will track how the performance measures changed over time (trend analysis) and examine the degree to which they changed between the “before” and “after” periods. Whenever possible, field-measured data will be used to generate the measures of effectiveness. 3.3 U.S. DOT Four Questions and Mapping to 12 AnalysesTable 3-1 shows the four “Objective Questions” that U.S. DOT has directed the national evaluation team to address.6 The analyses present what must be studied to answer the four objective questions. Table 3-2 identifies the 12 evaluation analyses described in the National Evaluation Framework and shows how they relate to the four objective questions. These 12 analyses form the basis of the evaluation plans at the UPA/CRD sites, including San Francisco.

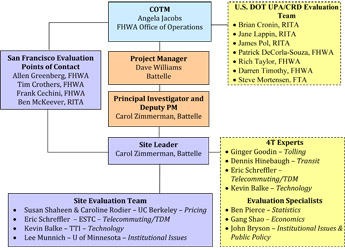

The analyses associated with Objective Question #2 are of two types. The first four analyses focus on the performance of the deployed strategies associated with each of the 4Ts. These analyses will examine the specific impacts of each deployed project/strategy, and, to the extent possible, associate the performance of specific strategies with any changes in congestion. The second type of analysis associated with Objective Question #2 focuses on specific types of impacts, e.g., “equity” and “environmental.” The 12 evaluation analyses were further elaborated into one or more hypotheses for testing. In some cases, where the analysis is not guided by a hypothesis, per se, such as the analysis of the non-technical success factors, specific questions are stated rather than hypotheses. Next, measures of effectiveness (MOE s) were identified for each hypothesis, and then required data for each MOE . 3.4 San Francisco UPA National Evaluation ProcessFigure 3-3 presents the San Francisco UPA national evaluation team. The team includes the Contract Officer Technical Manager (COTM) who serves as the U.S. DOT National Evaluation leader, the U.S. DOT evaluation team, the FHWA point of contact for the site, and the Battelle team. The national evaluation team works with representatives from the partnership agencies, shown previously in Section 2, in development of the UPA evaluation for San Francisco.

Figure 3-3. San Francisco UPA National Evaluation Team Figure 3-4 presents the process for developing and conducting the national evaluation of the San Francisco UPA projects. The major steps are briefly discussed following the figure.

Figure 3-4. San Francisco UPA National Evaluation Process Kick-Off Conference Call. The kick-off conference telephone call, held on January 26, 2009, introduced the San Francisco partners, the U.S. DOT representatives, and the Battelle team members. The San Francisco UPA projects and deployment schedule were discussed, and the national evaluation approach and activities were presented. A PowerPoint presentation and various handouts were distributed prior to the conference call. Site Visit and Workshop. Members of the U.S. DOT evaluation team and the Battelle team convened with the San Francisco partners in the Bay Area on February 17 and 18. The first day was used by the partners to brief the evaluation team on the UPA projects. SFMTA provided a tour of selected SFpark zones. A day-long evaluation workshop was held on the second day. Members of the U.S. DOT, Battelle, and local agency teams discussed potential evaluation strategies, including analyses, hypotheses, data needs, and schedule. A PowerPoint presentation containing the preliminary evaluation strategy, analysis, data needs, and other information was distributed prior to the workshop. A summary of the workshop discussion was prepared and distributed to participants after the workshop. San Francisco UPA National Evaluation Strategy. The San Francisco UPA national evaluation strategy was revised based on the discussion at the workshop and the completion of the National Evaluation Framework. The San Francisco UPA evaluation strategy included the hypotheses/ questions, measures of effectiveness, and data needs for the analysis areas. The strategy also included a preliminary pre- and post-deployment data collection schedule, possible issues associated with the evaluation, and approaches for addressing exogenous factors. The San Francisco UPA national evaluation strategy was presented in a PowerPoint presentation, which was distributed to representatives of the U.S. DOT team and the San Francisco partners and a conference call was held on April 24 to review and discuss the evaluation strategy. There was agreement among all parties on the San Francisco UPA evaluation strategy and formal approval from the U.S. DOT was subsequently received to proceed with development of the San Francisco UPA national evaluation plan. San Francisco UPA National Evaluation Plan. This document constitutes the San Francisco UPA national evaluation plan. The report provides a background to the U.S. DOT UPA, describes the San Francisco UPA projects, and presents the San Francisco UPA evaluation plan and preliminary test plans. The report was distributed in late August 2009 and reviewed with U.S. DOT and San Francisco UPA partners during a conference call on October 17, and the final plan is based on all comments and discussions about the evaluation plan. The document will guide the overall conduct of the San Francisco UPA national evaluation. San Francisco UPA National Evaluation Test Plans. Based on approval from the U.S. DOT, the Battelle San Francisco UPA evaluation team will proceed with developing separate, more detailed test plans for each type of data needed for the evaluation, i.e., traffic, parking, etc. The preliminary test plans contained in the evaluation plan provide the basis for the more fully-developed test plans. Between December 2009 and February 2010 the individual test plans will be developed and reviewed with representatives from the U.S. DOT and local partnership agencies. Pre-Deployment Data Collection. Based on approval of the San Francisco UPA evaluation individual test plans, data collection activities for the pre-deployment period will be initiated. The schedule of deployment for SFpark necessitates an abbreviated pre-deployment data collection period. However, where archived data are available and helpful in establishing long-term trends and in assessing the influence of exogenous factors (such as gas prices), they will be utilized. As discussed in Section 2, the individual projects will come on-line at various points between April and December 2010. Thus, the pre-deployment timeframe will begin in January 2010 and end as early as April and as late as December 2010 depending upon the project. Post-Deployment Data Collection. Collection of post-deployment data of the San Francisco UPA projects will begin when the SFpark zones to be evaluated become operational in 2010. As other UPA projects come on-line in 2010, such as dissemination of parking pricing information on 511 phone in April and the 511 website and TransLink® in garages in December, post-deployment data collection for those projects will begin. After the last project is deployed, in December 2010, the final post-deployment data collection will take place. Thus, the post-deployment data collection period stretches from April 2010 through the spring of 2011. Analysis and Evaluation Reports. Analysis of baseline data will begin once all of the data have been collected in the spring of 2011. Analysis of early (e.g., the first several months of) post-deployment data will begin shortly after the beginning of post-deployment data collection in mid-2010. A technical memorandum on evaluation early results, based on four or five months of post-deployment data, will be completed in the fall of 2010. The final evaluation report will be completed by December 2011. 5

While one-year each of pre- and post-deployment data are desirable, the operational data for specific projects within the overall evaluation schedule may result in more or less than a year's data being collected either pre- or post-deployment.

6 "Urban Partnership Agreement Demonstration Evaluation - Statement of Work," United States Department of Transportation, Federal Highway Administration; November 29, 2007.

| |||||||||||||||||||||

| US DOT Home | FHWA Home | Operations Home | Privacy Policy | ||